Don't leak the function that is called on drop

It probably wasn't causing problems anyway, but still, a `// this leaks, please don't pass anything that owns memory` is not sustainable.

I could implement a version which does not require `Option`, but it would require `unsafe`, at which point it's probably not worth it.

Use `Option::is_some_and` and `Result::is_ok_and` in the compiler

`.is_some_and(..)`/`.is_ok_and(..)` replace `.map_or(false, ..)` and `.map(..).unwrap_or(false)`, making the code more readable.

This PR is a sibling of https://github.com/rust-lang/rust/pull/111873#issuecomment-1561316515

Preprocess and cache dominator tree

Preprocessing dominators has a very strong effect for https://github.com/rust-lang/rust/pull/111344.

That pass checks that assignments dominate their uses repeatedly. Using the unprocessed dominator tree caused a quadratic runtime (number of bbs x depth of the dominator tree).

This PR also caches the dominator tree and the pre-processed dominators in the MIR cfg cache.

Rebase of https://github.com/rust-lang/rust/pull/107157

cc `@tmiasko`

Process current bucket instead of parent's bucket when starting loop for dominators.

The linked paper by Georgiadis suggests in §2.2.3 to process `bucket[w]` when beginning the loop, instead of `bucket[parent[w]]` when finishing it.

In the test case, we correctly computed `idom[2] = 0` and `sdom[3] = 1`, but the algorithm returned `idom[3] = 1`, instead of the correct value 0, because of the path 0-7-2-3.

This provoked LLVM ICE in https://github.com/rust-lang/rust/pull/111061#issuecomment-1546912112. LLVM checks that SSA assignments dominate uses using its own implementation of Lengauer-Tarjan, and saw case where rustc was breaking the dominance property.

r? `@Mark-Simulacrum`

Change the immediate_dominator return type to Option, and use None to

indicate that node has no immediate dominator.

Also fix the issue where the start node would be returned as its own

immediate dominator.

Introduce `DynSend` and `DynSync` auto trait for parallel compiler

part of parallel-rustc #101566

This PR introduces `DynSend / DynSync` trait and `FromDyn / IntoDyn` structure in rustc_data_structure::marker. `FromDyn` can dynamically check data structures for thread safety when switching to parallel environments (such as calling `par_for_each_in`). This happens only when `-Z threads > 1` so it doesn't affect single-threaded mode's compile efficiency.

r? `@cjgillot`

bump windows crate 0.46 -> 0.48

This drops duped version of crate(0.46), reduces `rustc_driver.dll` ~800kb and reduces exported functions number from 26k to 22k.

Also while here, added `tidy-alphabetical` sorting to lists in tidy allowed lists.

Min specialization improvements

- Don't allow specialization impls with no items, such implementations are probably not correct and only occur as mistakes in the compiler and standard library

- Fix a missing normalization call

- Adds spans for lifetime errors from overly general specializations

Closes#79457Closes#109815

Such implementations are usually mistakes and are not used in the

compiler or standard library (after this commit) so forbid them with

`min_specialization`.

Move the WorkerLocal type from the rustc-rayon fork into rustc_data_structures

This PR moves the definition of the `WorkerLocal` type from `rustc-rayon` into `rustc_data_structures`. This is enabled by the introduction of the `Registry` type which allows you to group up threads to be used by `WorkerLocal` which is basically just an array with an per thread index. The `Registry` type mirrors the one in Rayon and each Rayon worker thread is also registered with the new `Registry`. Safety for `WorkerLocal` is ensured by having it keep a reference to the registry and checking on each access that we're still on the group of threads associated with the registry used to construct it.

Accessing a `WorkerLocal` is micro-optimized due to it being hot since it's used for most arena allocations.

Performance is slightly improved for the parallel compiler:

<table><tr><td rowspan="2">Benchmark</td><td colspan="1"><b>Before</b></th><td colspan="2"><b>After</b></th></tr><tr><td align="right">Time</td><td align="right">Time</td><td align="right">%</th></tr><tr><td>🟣 <b>clap</b>:check</td><td align="right">1.9992s</td><td align="right">1.9949s</td><td align="right"> -0.21%</td></tr><tr><td>🟣 <b>hyper</b>:check</td><td align="right">0.2977s</td><td align="right">0.2970s</td><td align="right"> -0.22%</td></tr><tr><td>🟣 <b>regex</b>:check</td><td align="right">1.1335s</td><td align="right">1.1315s</td><td align="right"> -0.18%</td></tr><tr><td>🟣 <b>syn</b>:check</td><td align="right">1.8235s</td><td align="right">1.8171s</td><td align="right"> -0.35%</td></tr><tr><td>🟣 <b>syntex_syntax</b>:check</td><td align="right">6.9047s</td><td align="right">6.8930s</td><td align="right"> -0.17%</td></tr><tr><td>Total</td><td align="right">12.1586s</td><td align="right">12.1336s</td><td align="right"> -0.21%</td></tr><tr><td>Summary</td><td align="right">1.0000s</td><td align="right">0.9977s</td><td align="right"> -0.23%</td></tr></table>

cc `@SparrowLii`

Sprinkle some `#[inline]` in `rustc_data_structures::tagged_ptr`

This is based on `nm --demangle (rustc +a --print sysroot)/lib/librustc_driver-*.so | rg CopyTaggedPtr` which shows many methods that should probably be inlined. May fix the regression in https://github.com/rust-lang/rust/pull/110795.

r? ```@Nilstrieb```

Add `impl_tag!` macro to implement `Tag` for tagged pointer easily

r? `@Nilstrieb`

This should also lifts the need to think about safety from the callers (`impl_tag!` is robust (ish, see the macro issue)) and removes the possibility of making a "weird" `Tag` impl.

Encode hashes as bytes, not varint

In a few places, we store hashes as `u64` or `u128` and then apply `derive(Decodable, Encodable)` to the enclosing struct/enum. It is more efficient to encode hashes directly than try to apply some varint encoding. This PR adds two new types `Hash64` and `Hash128` which are produced by `StableHasher` and replace every use of storing a `u64` or `u128` that represents a hash.

Distribution of the byte lengths of leb128 encodings, from `x build --stage 2` with `incremental = true`

Before:

```

( 1) 373418203 (53.7%, 53.7%): 1

( 2) 196240113 (28.2%, 81.9%): 3

( 3) 108157958 (15.6%, 97.5%): 2

( 4) 17213120 ( 2.5%, 99.9%): 4

( 5) 223614 ( 0.0%,100.0%): 9

( 6) 216262 ( 0.0%,100.0%): 10

( 7) 15447 ( 0.0%,100.0%): 5

( 8) 3633 ( 0.0%,100.0%): 19

( 9) 3030 ( 0.0%,100.0%): 8

( 10) 1167 ( 0.0%,100.0%): 18

( 11) 1032 ( 0.0%,100.0%): 7

( 12) 1003 ( 0.0%,100.0%): 6

( 13) 10 ( 0.0%,100.0%): 16

( 14) 10 ( 0.0%,100.0%): 17

( 15) 5 ( 0.0%,100.0%): 12

( 16) 4 ( 0.0%,100.0%): 14

```

After:

```

( 1) 372939136 (53.7%, 53.7%): 1

( 2) 196240140 (28.3%, 82.0%): 3

( 3) 108014969 (15.6%, 97.5%): 2

( 4) 17192375 ( 2.5%,100.0%): 4

( 5) 435 ( 0.0%,100.0%): 5

( 6) 83 ( 0.0%,100.0%): 18

( 7) 79 ( 0.0%,100.0%): 10

( 8) 50 ( 0.0%,100.0%): 9

( 9) 6 ( 0.0%,100.0%): 19

```

The remaining 9 or 10 and 18 or 19 are `u64` and `u128` respectively that have the high bits set. As far as I can tell these are coming primarily from `SwitchTargets`.

Spelling compiler

This is per https://github.com/rust-lang/rust/pull/110392#issuecomment-1510193656

I'm going to delay performing a squash because I really don't expect people to be perfectly happy w/ my changes, I really am a human and I really do make mistakes.

r? Nilstrieb

I'm going to be flying this evening, but I should be able to squash / respond to reviews w/in a day or two.

I tried to be careful about dropping changes to `tests`, afaict only two files had changes that were likely related to the changes for a given commit (this is where not having eagerly squashed should have given me an advantage), but, that said, picking things apart can be error prone.

Rollup of 7 pull requests

Successful merges:

- #109981 (Set commit information environment variables when building tools)

- #110348 (Add list of supported disambiguators and suffixes for intra-doc links in the rustdoc book)

- #110409 (Don't use `serde_json` to serialize a simple JSON object)

- #110442 (Avoid including dry run steps in the build metrics)

- #110450 (rustdoc: Fix invalid handling of nested items with `--document-private-items`)

- #110461 (Use `Item::expect_*` and `ImplItem::expect_*` more)

- #110465 (Assure everyone that `has_type_flags` is fast)

Failed merges:

r? `@ghost`

`@rustbot` modify labels: rollup

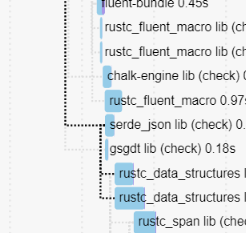

Don't use `serde_json` to serialize a simple JSON object

This avoids `rustc_data_structures` depending on `serde_json` which allows it to be compiled much earlier, unlocking most of rustc.

This used to not matter, but after #110407 we're not blocked on fluent anymore, which means that it's now a blocking edge.

This saves a few more seconds.

cc ````@Zoxc```` who added it recently

Implement StableHasher::write_u128 via write_u64

In https://github.com/rust-lang/rust/pull/110367#issuecomment-1510114777 the cachegrind diffs indicate that nearly all the regression is from this:

```

22,892,558 ???:<rustc_data_structures::sip128::SipHasher128>::slice_write_process_buffer

-9,502,262 ???:<rustc_data_structures::sip128::SipHasher128>::short_write_process_buffer::<8>

```

Which happens because the diff for that perf run swaps a `Hash::hash` of a `u64` to a `u128`. But `slice_write_process_buffer` is a `#[cold]` function, and is for handling hashes of arbitrary-length byte arrays.

Using the much more optimizer-friendly `u64` path twice to hash a `u128` provides a nice perf boost in some benchmarks.

Tagged pointers, now with strict provenance!

This is a big refactor of tagged pointers in rustc, with three main goals:

1. Porting the code to the strict provenance

2. Cleanup the code

3. Document the code (and safety invariants) better

This PR has grown quite a bit (almost a complete rewrite at this point...), so I'm not sure what's the best way to review this, but reviewing commit-by-commit should be fine.

r? `@Nilstrieb`

Remove some suspicious cast truncations

These truncations were added a long time ago, and as best I can tell without a perf justification. And with rust-lang/rust#110410 it has become perf-neutral to not truncate anymore. We worked hard for all these bits, let's use them.