Remove the -Zinsert-sideeffect

This removes all of the code we had in place to work-around LLVM's

handling of forward progress. From this removal excluded is a workaround

where we'd insert a `sideeffect` into clearly infinite loops such as

`loop {}`. This code remains conditionally effective when the LLVM

version is earlier than 12.0, which fixed the forward progress related

miscompilations at their root.

This removes all of the code we had in place to work-around LLVM's

handling of forward progress. From this removal excluded is a workaround

where we'd insert a `sideeffect` into clearly infinite loops such as

`loop {}`. This code remains conditionally effective when the LLVM

version is earlier than 12.0, which fixed the forward progress related

miscompilations at their root.

Store HIR attributes in a side table

Same idea as #72015 but for attributes.

The objective is to reduce incr-comp invalidations due to modified attributes.

Notably, those due to modified doc comments.

Implementation:

- collect attributes during AST->HIR lowering, in `LocalDefId -> ItemLocalId -> &[Attributes]` nested tables;

- access the attributes through a `hir_owner_attrs` query;

- local refactorings to use this access;

- remove `attrs` from HIR data structures one-by-one.

Change in behaviour:

- the HIR visitor traverses all attributes at once instead of parent-by-parent;

- attribute arrays are sometimes duplicated: for statements and variant constructors;

- as a consequence, attributes are marked as used after unused-attribute lint emission to avoid duplicate lints.

~~Current bug: the lint level is not correctly applied in `std::backtrace_rs`, triggering an unused attribute warning on `#![no_std]`. I welcome suggestions.~~

This updates all places where match branches check on StatementKind or UseContext.

This doesn't properly implement them, but adds TODOs where they are, and also adds some best

guesses to what they should be in some cases.

I'm still not totally sure if this is the right way to implement the memcpy, but that portion

compiles correctly now. Now to fix the compile errors everywhere else :).

Implement NOOP_METHOD_CALL lint

Implements the beginnings of https://github.com/rust-lang/lang-team/issues/67 - a lint for detecting noop method calls (e.g, calling `<&T as Clone>::clone()` when `T: !Clone`).

This PR does not fully realize the vision and has a few limitations that need to be addressed either before merging or in subsequent PRs:

* [ ] No UFCS support

* [ ] The warning message is pretty plain

* [ ] Doesn't work for `ToOwned`

The implementation uses [`Instance::resolve`](https://doc.rust-lang.org/nightly/nightly-rustc/rustc_middle/ty/instance/struct.Instance.html#method.resolve) which is normally later in the compiler. It seems that there are some invariants that this function relies on that we try our best to respect. For instance, it expects substitutions to have happened, which haven't yet performed, but we check first for `needs_subst` to ensure we're dealing with a monomorphic type.

Thank you to ```@davidtwco,``` ```@Aaron1011,``` and ```@wesleywiser``` for helping me at various points through out this PR ❤️.

Set codegen thread names

Set names on threads spawned during codegen. Various debugging and profiling tools can take advantage of this to show a more useful identifier for threads.

For example, gdb will show thread names in `info threads`:

```

(gdb) info threads

Id Target Id Frame

1 Thread 0x7fffefa7ec40 (LWP 2905) "rustc" __pthread_clockjoin_ex (threadid=140737214134016, thread_return=0x0, clockid=<optimized out>, abstime=<optimized out>, block=<optimized out>)

at pthread_join_common.c:145

2 Thread 0x7fffefa7b700 (LWP 2957) "rustc" 0x00007ffff125eaa8 in llvm::X86_MC::initLLVMToSEHAndCVRegMapping(llvm::MCRegisterInfo*) ()

from /home/wesley/.rustup/toolchains/stage1/lib/librustc_driver-f866439e29074957.so

3 Thread 0x7fffeef0f700 (LWP 3116) "rustc" futex_wait_cancelable (private=0, expected=0, futex_word=0x7fffe8602ac8) at ../sysdeps/nptl/futex-internal.h:183

* 4 Thread 0x7fffeed0e700 (LWP 3123) "rustc" rustc_codegen_ssa:🔙:write::spawn_work (cgcx=..., work=...) at /home/wesley/code/rust/rust/compiler/rustc_codegen_ssa/src/back/write.rs:1573

6 Thread 0x7fffe113b700 (LWP 3150) "opt foof.7rcbfp" 0x00007ffff2940e62 in llvm::CallGraph::populateCallGraphNode(llvm::CallGraphNode*) ()

from /home/wesley/.rustup/toolchains/stage1/lib/librustc_driver-f866439e29074957.so

8 Thread 0x7fffe0d39700 (LWP 3158) "opt foof.7rcbfp" 0x00007fffefe8998e in malloc_consolidate (av=av@entry=0x7ffe2c000020) at malloc.c:4492

9 Thread 0x7fffe0f3a700 (LWP 3162) "opt foof.7rcbfp" 0x00007fffefef27c4 in __libc_open64 (file=0x7fffe0f38608 "foof.foof.7rcbfp3g-cgu.6.rcgu.o", oflag=524865) at ../sysdeps/unix/sysv/linux/open64.c:48

(gdb)

```

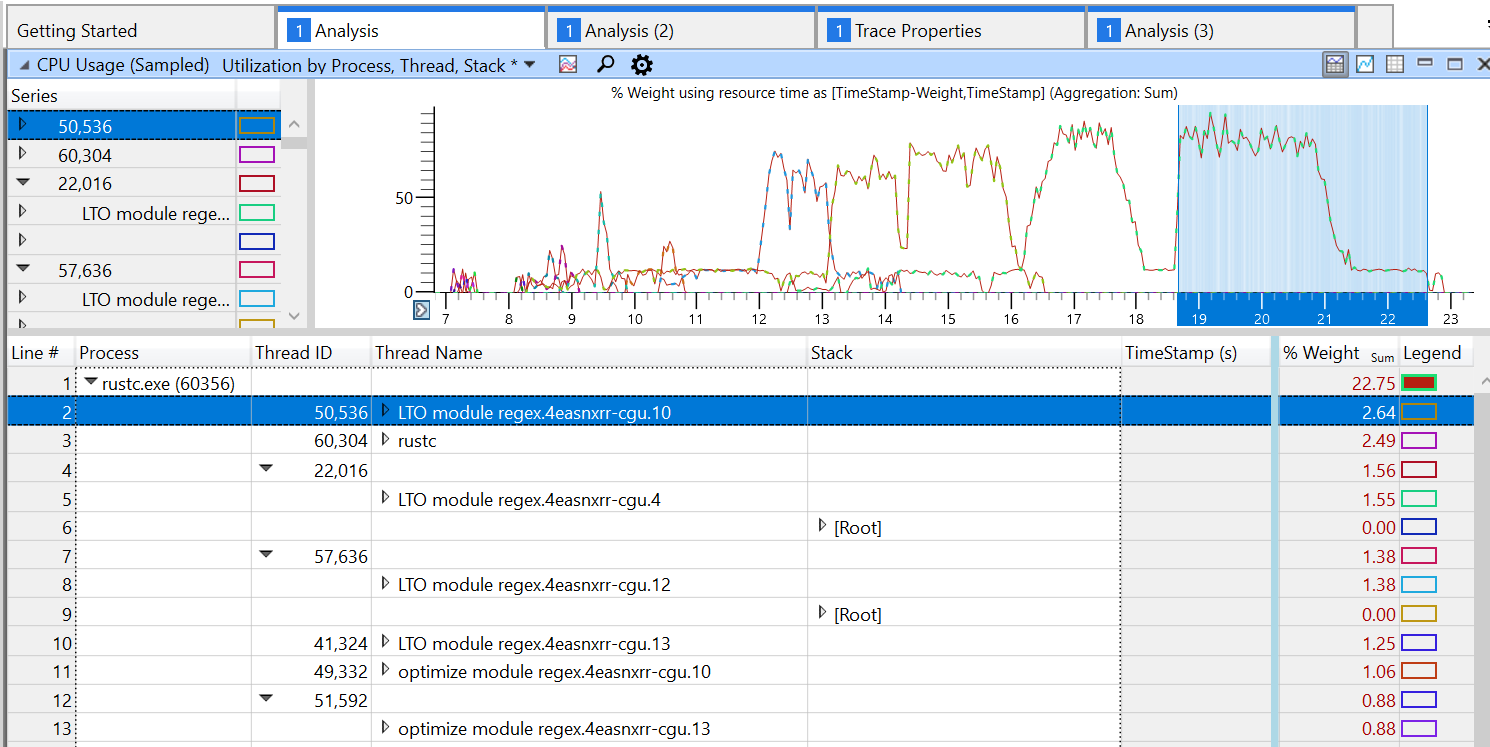

and Windows Performance Analyzer will also show this information when profiling:

rustc_codegen_ssa: tune codegen according to available concurrency

This change tunes ahead-of-time codegening according to the amount of

concurrency available, rather than according to the number of CPUs on

the system. This can lower memory usage by reducing the number of

compiled LLVM modules in memory at once, particularly across several

rustc instances.

Previously, each rustc instance would assume that it should codegen

ahead of time to meet the demand of number-of-CPUs workers. But often, a

rustc instance doesn't have nearly that much concurrency available to

it, because the concurrency availability is split, via the jobserver,

across all active rustc instances spawned by the driving cargo process,

and is further limited by the `-j` flag argument. Therefore, each rustc

might have had several times the number of LLVM modules in memory than

it really needed to meet demand. If the modules were large, the effect

on memory usage would be noticeable.

With this change, the required amount of ahead-of-time codegen scales up

with the actual number of workers running within a rustc instance. Note

that the number of workers running can be less than the actual

concurrency available to a rustc instance. However, if more concurrency

is actually available, workers are spun up quickly as job tokens are

acquired, and the ahead-of-time codegen scales up quickly as well.

Set path of the compile unit to the source directory

As part of the effort to implement split dwarf debug info, we ended up

setting the compile unit location to the output directory rather than

the source directory. Furthermore, it seems like we failed to remap the

prefixes for this as well!

The desired behaviour is to instead set the `DW_AT_GNU_dwo_name` to a

path relative to compiler's working directory. This still allows

debuggers to find the split dwarf files, while not changing the

behaviour of the code that is compiling with regular debug info, and not

changing the compiler's behaviour with regards to reproducibility.

Fixes#82074

cc `@alexcrichton` `@davidtwco`

remove redundant option/result wrapping of return values

If a function always returns `Ok(something)`, we can return `something` directly and remove the corresponding error handling in the callers.

clippy::unnecessary_wraps

Add new `rustc` target for Arm64 machines that can target the iphonesimulator

This PR lands a new target (`aarch64-apple-ios-sim`) that targets arm64 iphone simulator, previously unreachable from Apple Silicon machines.

resolves#81632

r? `@shepmaster`

Fix debug information for function arguments of type &str or slice.

Issue details:

When lowering MIR to LLVM IR, the compiler decomposes every &str and slice argument into a data pointer and a usize. Then, the original argument is reconstructed from the pointer and the usize arguments in the body of the function that owns it. Since the original argument is declared in the body of a function, it should be marked as a LocalVariable instead of an ArgumentVairable. This confusion causes MSVC debuggers unable to visualize &str and slice arguments correctly. (See https://github.com/rust-lang/rust/issues/81894 for more details).

Fix details:

Making sure that the debug variable for every &str and slice argument is marked as LocalVariable instead of ArgumentVariable in computing_per_local_var_debug_info. This change has been verified on VS Code debugger, VS debugger, WinDbg and LLDB.

Don't fail to remove files if they are missing

In the backend we may want to remove certain temporary files, but in

certain other situations these files might not be produced in the first

place. We don't exactly care about that, and the intent is really that

these files are gone after a certain point in the backend.

Here we unify the backend file removing calls to use `ensure_removed`

which will attempt to delete a file, but will not fail if it does not

exist (anymore).

The tradeoff to this approach is, of course, that we may miss instances

were we are attempting to remove files at wrong paths due to some bug –

compilation would silently succeed but the temporary files would remain

there somewhere.

Only store a LocalDefId in some HIR nodes

Some HIR nodes are guaranteed to be HIR owners: Item, TraitItem, ImplItem, ForeignItem and MacroDef.

As a consequence, we do not need to store the `HirId`'s `local_id`, and we can directly store a `LocalDefId`.

This allows to avoid a bit of the dance with `tcx.hir().local_def_id` and `tcx.hir().local_def_id_to_hir_id` mappings.

This change tunes ahead-of-time codegening according to the amount of

concurrency available, rather than according to the number of CPUs on

the system. This can lower memory usage by reducing the number of

compiled LLVM modules in memory at once, particularly across several

rustc instances.

Previously, each rustc instance would assume that it should codegen

ahead of time to meet the demand of number-of-CPUs workers. But often, a

rustc instance doesn't have nearly that much concurrency available to

it, because the concurrency availability is split, via the jobserver,

across all active rustc instances spawned by the driving cargo process,

and is further limited by the `-j` flag argument. Therefore, each rustc

might have had several times the number of LLVM modules in memory than

it really needed to meet demand. If the modules were large, the effect

on memory usage would be noticeable.

With this change, the required amount of ahead-of-time codegen scales up

with the actual number of workers running within a rustc instance. Note

that the number of workers running can be less than the actual

concurrency available to a rustc instance. However, if more concurrency

is actually available, workers are spun up quickly as job tokens are

acquired, and the ahead-of-time codegen scales up quickly as well.

In the backend we may want to remove certain temporary files, but in

certain other situations these files might not be produced in the first

place. We don't exactly care about that, and the intent is really that

these files are gone after a certain point in the backend.

Here we unify the backend file removing calls to use `ensure_removed`

which will attempt to delete a file, but will not fail if it does not

exist (anymore).

The tradeoff to this approach is, of course, that we may miss instances

were we are attempting to remove files at wrong paths due to some bug –

compilation would silently succeed but the temporary files would remain

there somewhere.

As part of the effort to implement split dwarf debug info, we ended up

setting the compile unit location to the output directory rather than

the source directory. Furthermore, it seems like we failed to remap the

prefixes for this as well!

The desired behaviour is to instead set the `DW_AT_GNU_dwo_name` to a

path relative to compiler's working directory. This still allows

debuggers to find the split dwarf files, while not changing the

behaviour of the code that is compiling with regular debug info, and not

changing the compiler's behaviour with regards to reproducibility.

Fixes#82074

For better throughput during parallel processing by LLVM, we used to sort

CGUs largest to smallest. This would lead to better thread utilization

by, for example, preventing a large CGU from being processed last and

having only one LLVM thread working while the rest remained idle.

However, this strategy would lead to high memory usage, as it meant the

LLVM-IR for all of the largest CGUs would be resident in memory at once.

Instead, we can compromise by ordering CGUs such that the largest and

smallest are first, second largest and smallest are next, etc. If there

are large size variations, this can reduce memory usage significantly.

Indicate both start and end of pass RSS in time-passes output

Previously, only the end of pass RSS was indicated. This could easily

lead one to believe that the change in RSS from one pass to the next was

attributable to the second pass, when in fact it occurred between the

end of the first pass and the start of the second.

Also, improve alignment of columns.

Sample of output:

```

time: 0.739; rss: 607MB -> 637MB item_types_checking

time: 8.429; rss: 637MB -> 775MB item_bodies_checking

time: 11.063; rss: 470MB -> 775MB type_check_crate

time: 0.232; rss: 775MB -> 777MB match_checking

time: 0.139; rss: 777MB -> 779MB liveness_and_intrinsic_checking

time: 0.372; rss: 775MB -> 779MB misc_checking_2

time: 8.188; rss: 779MB -> 1019MB MIR_borrow_checking

time: 0.062; rss: 1019MB -> 1021MB MIR_effect_checking

```

codegen: assume constants cannot fail to evaluate

https://github.com/rust-lang/rust/pull/80579 landed, so we can finally remove this old hack from codegen and instead assume that consts never fail to evaluate. :)

r? `@oli-obk`