- [x] Removed `?const` and change uses of `?const`

- [x] Added `~const` to the AST. It is gated behind const_trait_impl.

- [x] Validate `~const` in ast_validation.

- [ ] Add enum `BoundConstness` to the HIR. (With variants `NotConst` and

`ConstIfConst` allowing future extensions)

- [ ] Adjust trait selection and pre-existing code to use `BoundConstness`.

- [ ] Optional steps (*for this PR, obviously*)

- [ ] Fix#88155

- [ ] Do something with constness bounds in chalk

Use if-let guards in the codebase and various other pattern cleanups

Dogfooding if-let guards as experimentation for the feature.

Tracking issue #51114. Conflicts with #87937.

rfc3052 followup: Remove authors field from Cargo manifests

Since RFC 3052 soft deprecated the authors field, hiding it from

crates.io, docs.rs, and making Cargo not add it by default, and it is

not generally up to date/useful information for contributors, we may as well

remove it from crates in this repo.

Since RFC 3052 soft deprecated the authors field anyway, hiding it from

crates.io, docs.rs, and making Cargo not add it by default, and it is

not generally up to date/useful information, we should remove it from

crates in this repo.

The special case breaks several useful invariants (`ExpnId`s are

globally unique, and never change). This special case

was added back in 2016 in https://github.com/rust-lang/rust/pull/34355

Stabilize "RangeFrom" patterns in 1.55

Implements a partial stabilization of #67264 and #37854.

Reference PR: https://github.com/rust-lang/reference/pull/900

# Stabilization Report

This stabilizes the `X..` pattern, shown as such, offering an exhaustive match for unsigned integers:

```rust

match x as u32 {

0 => println!("zero!"),

1.. => println!("positive number!"),

}

```

Currently if a Rust author wants to write such a match on an integer, they must use `1..={integer}::MAX` . By allowing a "RangeFrom" style pattern, this simplifies the match to not require the MAX path and thus not require specifically repeating the type inside the match, allowing for easier refactoring. This is particularly useful for instances like the above case, where different behavior on "0" vs. "1 or any positive number" is desired, and the actual MAX is unimportant.

Notably, this excepts slice patterns which include half-open ranges from stabilization, as the wisdom of those is still subject to some debate.

## Practical Applications

Instances of this specific usage have appeared in the compiler:

16143d1067/compiler/rustc_middle/src/ty/inhabitedness/mod.rs (L219)673d0db5e3/compiler/rustc_ty_utils/src/ty.rs (L524)

And I have noticed there are also a handful of "in the wild" users who have deployed it to similar effect, especially in the case of rejecting any value of a certain number or greater. It simply makes it much more ergonomic to write an irrefutable match, as done in Katholieke Universiteit Leuven's [SCALE and MAMBA project](05e5db00d5/WebAssembly/scale_std/src/fixed_point.rs (L685-L695)).

## Tests

There were already many tests in [src/test/ui/half-open-range/patterns](90a2e5e3fe/src/test/ui/half-open-range-patterns), as well as [generic pattern tests that test the `exclusive_range_pattern` feature](673d0db5e3/src/test/ui/pattern/usefulness/integer-ranges/reachability.rs), many dating back to the feature's introduction and remaining standing to this day. However, this stabilization comes with some additional tests to explore the... sometimes interesting behavior of interactions with other patterns. e.g. There is, at least, a mild diagnostic improvement in some edge cases, because before now, the pattern `0..=(5+1)` encounters the `half_open_range_patterns` feature gate and can thus emit the request to enable the feature flag, while also emitting the "inclusive range with no end" diagnostic. There is no intent to allow an `X..=` pattern that I am aware of, so removing the flag request is a strict improvement. The arrival of the `J | K` "or" pattern also enables some odd formations.

Some of the behavior tested for here is derived from experiments in this [Playground](https://play.rust-lang.org/?version=nightly&mode=debug&edition=2018&gist=58777b3c715c85165ac4a70d93efeefc) example, linked at https://github.com/rust-lang/rust/issues/67264#issuecomment-812770692, which may be useful to reference to observe the current behavior more closely.

In addition tests constituting an explanation of the "slicing range patterns" syntax issue are included in this PR.

## Desiderata

The exclusive range patterns and half-open range patterns are fairly strongly requested by many authors, as they make some patterns much more natural to write, but there is disagreement regarding the "closed" exclusive range pattern or the "RangeTo" pattern, especially where it creates "off by one" gaps in the presence of a "catch-all" wildcard case. Also, there are obviously no range analyses in place that will force diagnostics for e.g. highly overlapping matches. I believe these should be warned on, ideally, and I think it would be reasonable to consider such a blocker to stabilizing this feature, but there is no technical issue with the feature as-is from the purely syntactic perspective as such overlapping or missed matches can already be generated today with such a catch-all case. And part of the "point" of the feature, at least from my view, is to make it easier to omit wildcard matches: a pattern with such an "open" match produces an irrefutable match and does not need the wild card case, making it easier to benefit from exhaustiveness checking.

## History

- Implemented:

- Partially via exclusive ranges: https://github.com/rust-lang/rust/pull/35712

- Fully with half-open ranges: https://github.com/rust-lang/rust/pull/67258

- Unresolved Questions:

- The precedence concerns of https://github.com/rust-lang/rust/pull/48501 were considered as likely requiring adjustment but probably wanting a uniform consistent change across all pattern styles, given https://github.com/rust-lang/rust/issues/67264#issuecomment-720711656, but it is still unknown what changes might be desired

- How we want to handle slice patterns in ranges seems to be an open question still, as witnessed in the discussion of this PR!

I checked but I couldn't actually find an RFC for this, and given "approved provisionally by lang team without an RFC", I believe this might require an RFC before it can land? Unsure of procedure here, on account of this being stabilizing a subset of a feature of syntax.

r? `@scottmcm`

Fix ICE when `main` is declared in an `extern` block

Changes in #84401 to implement `imported_main` changed how the crate entry point is found, and a declared `main` in an `extern` block was detected erroneously. This was causing the ICE described in #86110.

This PR adds a check for this case and emits an error instead. Previously a `main` declaration in an `extern` block was not detected as an entry point at all, so emitting an error shouldn't break anything that worked previously. In 1.52.1 stable this is demonstrated, with a `` `main` function not found`` error.

Fixes#86110

parser: Ensure that all nonterminals have tokens after parsing

`parse_nonterminal` should always result in something with tokens.

This requirement wasn't satisfied in two cases:

- `stmt` nonterminal with expression statements (e.g. `0`, or `{}`, or `path + 1`) because `fn parse_stmt_without_recovery` forgot to propagate `force_collect` in some cases.

- `expr` nonterminal with expressions with built-in attributes (e.g. `#[allow(warnings)] 0`) due to an incorrect optimization in `fn parse_expr_force_collect`, it assumed that all expressions starting with `#` have their tokens collected during parsing, but that's not true if all the attributes on that expression are built-in and inert.

(Discovered when trying to implement eager `cfg` expansion for all attributes https://github.com/rust-lang/rust/pull/83824#issuecomment-817317170.)

r? `@Aaron1011`

further split up const_fn feature flag

This continues the work on splitting up `const_fn` into separate feature flags:

* `const_fn_trait_bound` for `const fn` with trait bounds

* `const_fn_unsize` for unsizing coercions in `const fn` (looks like only `dyn` unsizing is still guarded here)

I don't know if there are even any things left that `const_fn` guards... at least libcore and liballoc do not need it any more.

`@oli-obk` are you currently able to do reviews?

This PR modifies the macro expansion infrastructure to handle attributes

in a fully token-based manner. As a result:

* Derives macros no longer lose spans when their input is modified

by eager cfg-expansion. This is accomplished by performing eager

cfg-expansion on the token stream that we pass to the derive

proc-macro

* Inner attributes now preserve spans in all cases, including when we

have multiple inner attributes in a row.

This is accomplished through the following changes:

* New structs `AttrAnnotatedTokenStream` and `AttrAnnotatedTokenTree` are introduced.

These are very similar to a normal `TokenTree`, but they also track

the position of attributes and attribute targets within the stream.

They are built when we collect tokens during parsing.

An `AttrAnnotatedTokenStream` is converted to a regular `TokenStream` when

we invoke a macro.

* Token capturing and `LazyTokenStream` are modified to work with

`AttrAnnotatedTokenStream`. A new `ReplaceRange` type is introduced, which

is created during the parsing of a nested AST node to make the 'outer'

AST node aware of the attributes and attribute target stored deeper in the token stream.

* When we need to perform eager cfg-expansion (either due to `#[derive]` or `#[cfg_eval]`),

we tokenize and reparse our target, capturing additional information about the locations of

`#[cfg]` and `#[cfg_attr]` attributes at any depth within the target.

This is a performance optimization, allowing us to perform less work

in the typical case where captured tokens never have eager cfg-expansion run.

Extract attribute name once and match it against symbols that are being

validated, instead of using `Session::check_name` for each symbol

individually.

Assume that all validated attributes are used, instead of marking them

as such, since the attribute check should be exhaustive.

Use AnonConst for asm! constants

This replaces the old system which used explicit promotion. See #83169 for more background.

The syntax for `const` operands is still the same as before: `const <expr>`.

Fixes#83169

Because the implementation is heavily based on inline consts, we suffer from the same issues:

- We lose the ability to use expressions derived from generics. See the deleted tests in `src/test/ui/asm/const.rs`.

- We are hitting the same ICEs as inline consts, for example #78174. It is unlikely that we will be able to stabilize this before inline consts are stabilized.

Found with https://github.com/est31/warnalyzer.

Dubious changes:

- Is anyone else using rustc_apfloat? I feel weird completely deleting

x87 support.

- Maybe some of the dead code in rustc_data_structures, in case someone

wants to use it in the future?

- Don't change rustc_serialize

I plan to scrap most of the json module in the near future (see

https://github.com/rust-lang/compiler-team/issues/418) and fixing the

tests needed more work than I expected.

TODO: check if any of the comments on the deleted code should be kept.

Allow registering tool lints with `register_tool`

Previously, there was no way to add a custom tool prefix, even if the tool

itself had registered a lint:

```rust

#![feature(register_tool)]

#![register_tool(xyz)]

#![warn(xyz::my_lint)]

```

```

$ rustc unknown-lint.rs --crate-type lib

error[E0710]: an unknown tool name found in scoped lint: `xyz::my_lint`

--> unknown-lint.rs:3:9

|

3 | #![warn(xyz::my_lint)]

| ^^^

```

This allows opting-in to lints from other tools using `register_tool`.

cc https://github.com/rust-lang/rust/issues/66079#issuecomment-788589193, ``@chorman0773``

r? ``@petrochenkov``

Extend `proc_macro_back_compat` lint to `procedural-masquerade`

We now lint on *any* use of `procedural-masquerade` crate. While this

crate still exists, its main reverse dependency (`cssparser`) no longer

depends on it. Any crates still depending off should stop doing so, as

it only exists to support very old Rust versions.

If a crate actually needs to support old versions of rustc via

`procedural-masquerade`, then they'll just need to accept the warning

until we remove it entirely (at the same time as the back-compat hack).

The latest version of `procedural-masquerade` does work with the

latest rustc, but trying to check for the version seems like more

trouble than it's worth.

While working on this, I realized that the `proc-macro-hack` check was

never actually doing anything. The corresponding enum variant in

`proc-macro-hack` is named `Value` or `Nested` - it has never been

called `Input`. Due to a strange Crater issue, the Crater run that

tested adding this did *not* end up testing it - some of the crates that

would have failed did not actually have their tests checked, making it

seem as though the `proc-macro-hack` check was working.

The Crater issue is being discussed at

https://rust-lang.zulipchat.com/#narrow/stream/242791-t-infra/topic/Nearly.20identical.20Crater.20runs.20processed.20a.20crate.20differently/near/230406661

Despite the `proc-macro-hack` check not actually doing anything, we

haven't gotten any reports from users about their build being broken.

I went ahead and removed it entirely, since it's clear that no one is

being affected by the `proc-macro-hack` regression in practice.

ast/hir: Rename field-related structures

I always forget what `ast::Field` and `ast::StructField` mean despite working with AST for long time, so this PR changes the naming to less confusing and more consistent.

- `StructField` -> `FieldDef` ("field definition")

- `Field` -> `ExprField` ("expression field", not "field expression")

- `FieldPat` -> `PatField` ("pattern field", not "field pattern")

Various visiting and other methods working with the fields are renamed correspondingly too.

The second commit reduces the size of `ExprKind` by boxing fields of `ExprKind::Struct` in preparation for https://github.com/rust-lang/rust/pull/80080.

Previously, there was no way to add a custom tool prefix, even if the tool

itself had registered a lint:

```

#![feature(register_tool)]

#![register_tool(xyz)]

#![warn(xyz::my_lint)]

```

```

$ rustc unknown-lint.rs --crate-type lib

error[E0710]: an unknown tool name found in scoped lint: `xyz::my_lint`

--> unknown-lint.rs:3:9

|

3 | #![warn(xyz::my_lint)]

| ^^^

```

This allows opting-in to lints from other tools using `register_tool`.

StructField -> FieldDef ("field definition")

Field -> ExprField ("expression field", not "field expression")

FieldPat -> PatField ("pattern field", not "field pattern")

Also rename visiting and other methods working on them.

We now lint on *any* use of `procedural-masquerade` crate. While this

crate still exists, its main reverse dependency (`cssparser`) no longer

depends on it. Any crates still depending off should stop doing so, as

it only exists to support very old Rust versions.

If a crate actually needs to support old versions of rustc via

`procedural-masquerade`, then they'll just need to accept the warning

until we remove it entirely (at the same time as the back-compat hack).

The latest version of `procedural-masquerade` does not work with the

latest rustc, but trying to check for the version seems like more

trouble than it's worth.

While working on this, I realized that the `proc-macro-hack` check was

never actually doing anything. The corresponding enum variant in

`proc-macro-hack` is named `Value` or `Nested` - it has never been

called `Input`. Due to a strange Crater issue, the Crater run that

tested adding this did *not* end up testing it - some of the crates that

would have failed did not actually have their tests checked, making it

seem as though the `proc-macro-hack` check was working.

The Crater issue is being discussed at

https://rust-lang.zulipchat.com/#narrow/stream/242791-t-infra/topic/Nearly.20identical.20Crater.20runs.20processed.20a.20crate.20differently/near/230406661

Despite the `proc-macro-hack` check not actually doing anything, we

haven't gotten any reports from users about their build being broken.

I went ahead and removed it entirely, since it's clear that no one is

being affected by the `proc-macro-hack` regression in practice.

Introduce `proc_macro_back_compat` lint, and emit for `time-macros-impl`

Now that future-incompat-report support has landed in nightly Cargo, we

can start to make progress towards removing the various proc-macro

back-compat hacks that have accumulated in the compiler.

This PR introduces a new lint `proc_macro_back_compat`, which results in

a future-incompat-report entry being generated. All proc-macro

back-compat warnings will be grouped under this lint. Note that this

lint will never actually become a hard error - instead, we will remove

the special cases for various macros, which will cause older versions of

those crates to emit some other error.

I've added code to fire this lint for the `time-macros-impl` case. This

is the easiest case out of all of our current back-compat hacks - the

crate was renamed to `time-macros`, so seeing a filename with

`time-macros-impl` guarantees that an older version of the parent `time`

crate is in use.

When Cargo's future-incompat-report feature gets stabilized, affected

users will start to see future-incompat warnings when they build their

crates.

Now that future-incompat-report support has landed in nightly Cargo, we

can start to make progress towards removing the various proc-macro

back-compat hacks that have accumulated in the compiler.

This PR introduces a new lint `proc_macro_back_compat`, which results in

a future-incompat-report entry being generated. All proc-macro

back-compat warnings will be grouped under this lint. Note that this

lint will never actually become a hard error - instead, we will remove

the special cases for various macros, which will cause older versions of

those crates to emit some other error.

I've added code to fire this lint for the `time-macros-impl` case. This

is the easiest case out of all of our current back-compat hacks - the

crate was renamed to `time-macros`, so seeing a filename with

`time-macros-impl` guarantees that an older version of the parent `time`

crate is in use.

When Cargo's future-incompat-report feature gets stabilized, affected

users will start to see future-incompat warnings when they build their

crates.

Change x64 size checks to not apply to x32.

Rust contains various size checks conditional on target_arch = "x86_64", but these checks were never intended to apply to x86_64-unknown-linux-gnux32. Add target_pointer_width = "64" to the conditions.

Implement built-in attribute macro `#[cfg_eval]` + some refactoring

This PR implements a built-in attribute macro `#[cfg_eval]` as it was suggested in https://github.com/rust-lang/rust/pull/79078 to avoid `#[derive()]` without arguments being abused as a way to configure input for other attributes.

The macro is used for eagerly expanding all `#[cfg]` and `#[cfg_attr]` attributes in its input ("fully configuring" the input).

The effect is identical to effect of `#[derive(Foo, Bar)]` which also fully configures its input before passing it to macros `Foo` and `Bar`, but unlike `#[derive]` `#[cfg_eval]` can be applied to any syntax nodes supporting macro attributes, not only certain items.

`cfg_eval` was the first name suggested in https://github.com/rust-lang/rust/pull/79078, but other alternatives are also possible, e.g. `cfg_expand`.

```rust

#[cfg_eval]

#[my_attr] // Receives `struct S {}` as input, the field is configured away by `#[cfg_eval]`

struct S {

#[cfg(FALSE)]

field: u8,

}

```

Tracking issue: https://github.com/rust-lang/rust/issues/82679

Let a portion of DefPathHash uniquely identify the DefPath's crate.

This allows to directly map from a `DefPathHash` to the crate it originates from, without constructing side tables to do that mapping -- something that is useful for incremental compilation where we deal with `DefPathHash` instead of `DefId` a lot.

It also allows to reliably and cheaply check for `DefPathHash` collisions which allows the compiler to gracefully abort compilation instead of running into a subsequent ICE at some random place in the code.

The following new piece of documentation describes the most interesting aspects of the changes:

```rust

/// A `DefPathHash` is a fixed-size representation of a `DefPath` that is

/// stable across crate and compilation session boundaries. It consists of two

/// separate 64-bit hashes. The first uniquely identifies the crate this

/// `DefPathHash` originates from (see [StableCrateId]), and the second

/// uniquely identifies the corresponding `DefPath` within that crate. Together

/// they form a unique identifier within an entire crate graph.

///

/// There is a very small chance of hash collisions, which would mean that two

/// different `DefPath`s map to the same `DefPathHash`. Proceeding compilation

/// with such a hash collision would very probably lead to an ICE and, in the

/// worst case, to a silent mis-compilation. The compiler therefore actively

/// and exhaustively checks for such hash collisions and aborts compilation if

/// it finds one.

///

/// `DefPathHash` uses 64-bit hashes for both the crate-id part and the

/// crate-internal part, even though it is likely that there are many more

/// `LocalDefId`s in a single crate than there are individual crates in a crate

/// graph. Since we use the same number of bits in both cases, the collision

/// probability for the crate-local part will be quite a bit higher (though

/// still very small).

///

/// This imbalance is not by accident: A hash collision in the

/// crate-local part of a `DefPathHash` will be detected and reported while

/// compiling the crate in question. Such a collision does not depend on

/// outside factors and can be easily fixed by the crate maintainer (e.g. by

/// renaming the item in question or by bumping the crate version in a harmless

/// way).

///

/// A collision between crate-id hashes on the other hand is harder to fix

/// because it depends on the set of crates in the entire crate graph of a

/// compilation session. Again, using the same crate with a different version

/// number would fix the issue with a high probability -- but that might be

/// easier said then done if the crates in questions are dependencies of

/// third-party crates.

///

/// That being said, given a high quality hash function, the collision

/// probabilities in question are very small. For example, for a big crate like

/// `rustc_middle` (with ~50000 `LocalDefId`s as of the time of writing) there

/// is a probability of roughly 1 in 14,750,000,000 of a crate-internal

/// collision occurring. For a big crate graph with 1000 crates in it, there is

/// a probability of 1 in 36,890,000,000,000 of a `StableCrateId` collision.

```

Given the probabilities involved I hope that no one will ever actually see the error messages. Nonetheless, I'd be glad about some feedback on how to improve them. Should we create a GH issue describing the problem and possible solutions to point to? Or a page in the rustc book?

r? `@pnkfelix` (feel free to re-assign)

Rust contains various size checks conditional on target_arch = "x86_64",

but these checks were never intended to apply to

x86_64-unknown-linux-gnux32. Add target_pointer_width = "64" to the

conditions.

- Rename `broken_intra_doc_links` to `rustdoc::broken_intra_doc_links`

- Ensure that the old lint names still work and give deprecation errors

- Register lints even when running doctests

Otherwise, all `rustdoc::` lints would be ignored.

- Register all existing lints as removed

This unfortunately doesn't work with `register_renamed` because tool

lints have not yet been registered when rustc is running. For similar

reasons, `check_backwards_compat` doesn't work either. Call

`register_removed` directly instead.

- Fix fallout

+ Rustdoc lints for compiler/

+ Rustdoc lints for library/

Note that this does *not* suggest `rustdoc::broken_intra_doc_links` for

`rustdoc::intra_doc_link_resolution_failure`, since there was no time

when the latter was valid.

When token-based attribute handling is implemeneted in #80689,

we will need to access tokens from `HasAttrs` (to perform

cfg-stripping), and we will to access attributes from `HasTokens` (to

construct a `PreexpTokenStream`).

This PR merges the `HasAttrs` and `HasTokens` traits into a new

`AstLike` trait. The previous `HasAttrs` impls from `Vec<Attribute>` and `AttrVec`

are removed - they aren't attribute targets, so the impls never really

made sense.

Crate root is sufficiently different from `mod` items, at least at syntactic level.

Also remove customization point for "`mod` item or crate root" from AST visitors.

Rollup of 11 pull requests

Successful merges:

- #80523 (#[doc(inline)] sym_generated)

- #80920 (Visit more targets when validating attributes)

- #81720 (Updated smallvec version due to RUSTSEC-2021-0003)

- #81891 ([rustdoc-json] Make `header` a vec of modifiers, and FunctionPointer consistent)

- #81912 (Implement the precise analysis pass for lint `disjoint_capture_drop_reorder`)

- #81914 (Fixing bad suggestion for `_` in `const` type when a function #81885)

- #81919 (BTreeMap: fix internal comments)

- #81927 (Add a regression test for #32498)

- #81965 (Fix MIR pretty printer for non-local DefIds)

- #82029 (Use debug log level for developer oriented logs)

- #82056 (fix ice (#82032))

Failed merges:

r? `@ghost`

`@rustbot` modify labels: rollup

This is a pure refactoring split out from #80689.

It represents the most invasive part of that PR, requiring changes in

every caller of `parse_outer_attributes`

In order to eagerly expand `#[cfg]` attributes while preserving the

original `TokenStream`, we need to know the range of tokens that

corresponds to every attribute target. This is accomplished by making

`parse_outer_attributes` return an opaque `AttrWrapper` struct. An

`AttrWrapper` must be converted to a plain `AttrVec` by passing it to

`collect_tokens_trailing_token`. This makes it difficult to accidentally

construct an AST node with attributes without calling `collect_tokens_trailing_token`,

since AST nodes store an `AttrVec`, not an `AttrWrapper`.

As a result, we now call `collect_tokens_trailing_token` for attribute

targets which only support inert attributes, such as generic arguments

and struct fields. Currently, the constructed `LazyTokenStream` is

simply discarded. Future PRs will record the token range corresponding

to the attribute target, allowing those tokens to be removed from an

enclosing `collect_tokens_trailing_token` call if necessary.

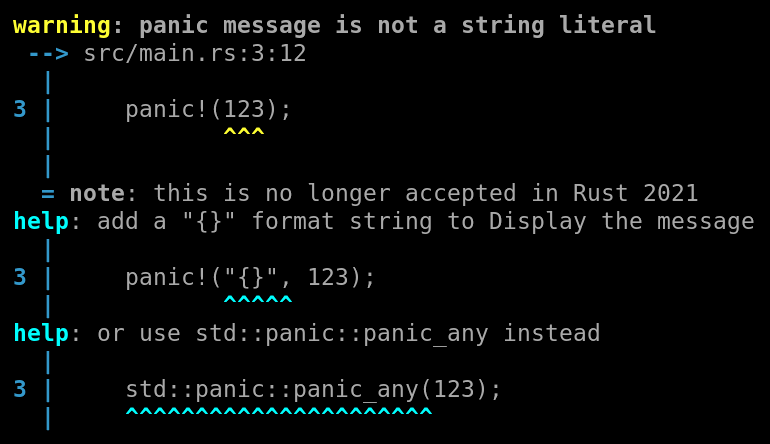

Add lint for `panic!(123)` which is not accepted in Rust 2021.

This extends the `panic_fmt` lint to warn for all cases where the first argument cannot be interpreted as a format string, as will happen in Rust 2021.

It suggests to add `"{}",` to format the message as a string. In the case of `std::panic!()`, it also suggests the recently stabilized

`std::panic::panic_any()` function as an alternative.

It renames the lint to `non_fmt_panic` to match the lint naming guidelines.

This is part of #80162.

r? ```@estebank```

This allows to directly map from a DefPathHash to the crate it

originates from, without constructing side tables to do that mapping.

It also allows to reliably and cheaply check for DefPathHash collisions.

Set tokens on AST node in `collect_tokens`

A new `HasTokens` trait is introduced, which is used to move logic from

the callers of `collect_tokens` into the body of `collect_tokens`.

In addition to reducing duplication, this paves the way for PR #80689,

which needs to perform additional logic during token collection.

A new `HasTokens` trait is introduced, which is used to move logic from

the callers of `collect_tokens` into the body of `collect_tokens`.

In addition to reducing duplication, this paves the way for PR #80689,

which needs to perform additional logic during token collection.

rustc_parse: Better spans for synthesized token streams

I think using the nonterminal span for synthesizing its tokens is a better approximation than using `DUMMY_SP` or the attribute span like #79472 did in `expand.rs`.

r? `@Aaron1011`

- Adds optional default values to const generic parameters in the AST

and HIR

- Parses these optional default values

- Adds a `const_generics_defaults` feature gate

Properly handle attributes on statements

We now collect tokens for the underlying node wrapped by `StmtKind`

nstead of storing tokens directly in `Stmt`.

`LazyTokenStream` now supports capturing a trailing semicolon after it

is initially constructed. This allows us to avoid refactoring statement

parsing to wrap the parsing of the semicolon in `parse_tokens`.

Attributes on item statements

(e.g. `fn foo() { #[bar] struct MyStruct; }`) are now treated as

item attributes, not statement attributes, which is consistent with how

we handle attributes on other kinds of statements. The feature-gating

code is adjusted so that proc-macro attributes are still allowed on item

statements on stable.

Two built-in macros (`#[global_allocator]` and `#[test]`) needed to be

adjusted to support being passed `Annotatable::Stmt`.

We now collect tokens for the underlying node wrapped by `StmtKind`

instead of storing tokens directly in `Stmt`.

`LazyTokenStream` now supports capturing a trailing semicolon after it

is initially constructed. This allows us to avoid refactoring statement

parsing to wrap the parsing of the semicolon in `parse_tokens`.

Attributes on item statements

(e.g. `fn foo() { #[bar] struct MyStruct; }`) are now treated as

item attributes, not statement attributes, which is consistent with how

we handle attributes on other kinds of statements. The feature-gating

code is adjusted so that proc-macro attributes are still allowed on item

statements on stable.

Two built-in macros (`#[global_allocator]` and `#[test]`) needed to be

adjusted to support being passed `Annotatable::Stmt`.

Move lev_distance to rustc_ast, make non-generic

rustc_ast currently has a few dependencies on rustc_lexer. Ideally, an AST

would not have any dependency its lexer, for minimizing

design-time dependencies. Breaking this dependency would also have practical

benefits, since modifying rustc_lexer would not trigger a rebuild of rustc_ast.

This commit does not remove the rustc_ast --> rustc_lexer dependency,

but it does remove one of the sources of this dependency, which is the

code that handles fuzzy matching between symbol names for making suggestions

in diagnostics. Since that code depends only on Symbol, it is easy to move

it to rustc_span. It might even be best to move it to a separate crate,

since other tools such as Cargo use the same algorithm, and have simply

contain a duplicate of the code.

This changes the signature of find_best_match_for_name so that it is no

longer generic over its input. I checked the optimized binaries, and this

function was duplicated for nearly every call site, because most call sites

used short-lived iterator chains, generic over Map and such. But there's

no good reason for a function like this to be generic, since all it does

is immediately convert the generic input (the Iterator impl) to a concrete

Vec<Symbol>. This has all of the costs of generics (duplicated method bodies)

with no benefit.

Changing find_best_match_for_name to be non-generic removed about 10KB of

code from the optimized binary. I know it's a drop in the bucket, but we have

to start reducing binary size, and beginning to tame over-use of generics

is part of that.

rustc_ast currently has a few dependencies on rustc_lexer. Ideally, an AST

would not have any dependency its lexer, for minimizing unnecessarily

design-time dependencies. Breaking this dependency would also have practical

benefits, since modifying rustc_lexer would not trigger a rebuild of rustc_ast.

This commit does not remove the rustc_ast --> rustc_lexer dependency,

but it does remove one of the sources of this dependency, which is the

code that handles fuzzy matching between symbol names for making suggestions

in diagnostics. Since that code depends only on Symbol, it is easy to move

it to rustc_span. It might even be best to move it to a separate crate,

since other tools such as Cargo use the same algorithm, and have simply

contain a duplicate of the code.

This changes the signature of find_best_match_for_name so that it is no

longer generic over its input. I checked the optimized binaries, and this

function was duplicated at nearly every call site, because most call sites

used short-lived iterator chains, generic over Map and such. But there's

no good reason for a function like this to be generic, since all it does

is immediately convert the generic input (the Iterator impl) to a concrete

Vec<Symbol>. This has all of the costs of generics (duplicated method bodies)

with no benefit.

Changing find_best_match_for_name to be non-generic removed about 10KB of

code from the optimized binary. I know it's a drop in the bucket, but we have

to start reducing binary size, and beginning to tame over-use of generics

is part of that.

Make `_` an expression, to discard values in destructuring assignments

This is the third and final step towards implementing destructuring assignment (RFC: rust-lang/rfcs#2909, tracking issue: #71126). This PR is the third and final part of #71156, which was split up to allow for easier review.

With this PR, an underscore `_` is parsed as an expression but is allowed *only* on the left-hand side of a destructuring assignment. There it simply discards a value, similarly to the wildcard `_` in patterns. For instance,

```rust

(a, _) = (1, 2)

```

will simply assign 1 to `a` and discard the 2. Note that for consistency,

```

_ = foo

```

is also allowed and equivalent to just `foo`.

Thanks to ````@varkor```` who helped with the implementation, particularly around pre-expansion gating.

r? ````@petrochenkov````

Implement destructuring assignment for structs and slices

This is the second step towards implementing destructuring assignment (RFC: rust-lang/rfcs#2909, tracking issue: #71126). This PR is the second part of #71156, which was split up to allow for easier review.

Note that the first PR (#78748) is not merged yet, so it is included as the first commit in this one. I thought this would allow the review to start earlier because I have some time this weekend to respond to reviews. If ``@petrochenkov`` prefers to wait until the first PR is merged, I totally understand, of course.

This PR implements destructuring assignment for (tuple) structs and slices. In order to do this, the following *parser change* was necessary: struct expressions are not required to have a base expression, i.e. `Struct { a: 1, .. }` becomes legal (in order to act like a struct pattern).

Unfortunately, this PR slightly regresses the diagnostics implemented in #77283. However, it is only a missing help message in `src/test/ui/issues/issue-77218.rs`. Other instances of this diagnostic are not affected. Since I don't exactly understand how this help message works and how to fix it yet, I was hoping it's OK to regress this temporarily and fix it in a follow-up PR.

Thanks to ``@varkor`` who helped with the implementation, particularly around the struct rest changes.

r? ``@petrochenkov``

Do not collect tokens for doc comments

Doc comment is a single token and AST has all the information to re-create it precisely.

Doc comments are also responsible for majority of calls to `collect_tokens` (with `num_calls == 1` and `num_calls == 0`, cc https://github.com/rust-lang/rust/pull/78736).

(I also moved token collection into `fn parse_attribute` to deduplicate code a bit.)

r? `@Aaron1011`

The discussion seems to have resolved that this lint is a bit "noisy" in

that applying it in all places would result in a reduction in

readability.

A few of the trivial functions (like `Path::new`) are fine to leave

outside of closures.

The general rule seems to be that anything that is obviously an

allocation (`Box`, `Vec`, `vec![]`) should be in a closure, even if it

is a 0-sized allocation.

rustc_ast: Do not panic by default when visiting macro calls

Panicking by default made sense when we didn't have HIR or MIR and everything worked on AST, but now all AST visitors run early and majority of them have to deal with macro calls, often by ignoring them.

The second commit renames `visit_mac` to `visit_mac_call`, the corresponding structures were renamed earlier in https://github.com/rust-lang/rust/pull/69589.

rustc_ast: Visit tokens stored in AST nodes in mutable visitor

After #77271 token visiting is enabled only for one visitor in `rustc_expand\src\mbe\transcribe.rs` which applies hygiene marks to tokens produced by declarative macros (`macro_rules` or `macro`), so this change doesn't affect anything else.

When a macro has some interpolated token from an outer macro in its output

```rust

macro inner() {

$interpolated

}

```

we can use the usual interpretation of interpolated tokens in token-based model - a None-delimited group - to write this macro in an equivalent form

```rust

macro inner() {

⟪ a b c d ⟫

}

```

When we are expanding the macro `inner` we need to apply hygiene marks to all tokens produced by it, including the tokens inside the group.

Before this PR we did this by visiting the AST piece inside the interpolated token and applying marks to all spans in it.

I'm not sure this is 100% correct (ideally we should apply the marks to tokens and then re-parse the AST from tokens), but it's a very good approximation at least.

We didn't however apply the marks to actual tokens stored in the nonterminal, so if we used the nonterminal as a token rather than as an AST piece (e.g. passed it to a proc macro), then we got hygiene bugs.

This PR applies the marks to tokens in addition to the AST pieces thus fixing the issue.

r? `@Aaron1011`

Originally, there has been a dedicated pass for renumbering

AST NodeIds to have actual values. This pass had been added by

commit a5ad4c3794.

Then, later, this step was moved to where it resides now,

macro expansion. See commit c86c8d41a2

or PR #36438.

The comment snippet, added by the original commit, has

survived the times without any change, becoming outdated

at removal of the dedicated pass.

Nowadays, grepping for the next_node_id function will show up

multiple places in the compiler that call it, but the main

rewriting that the comment talks about is still done in the

expansion step, inside an innocious looking visit_id function

that's called during macro invocation collection.

Treat trailing semicolon as a statement in macro call

See #61733 (comment)

We now preserve the trailing semicolon in a macro invocation, even if

the macro expands to nothing. As a result, the following code no longer

compiles:

```rust

macro_rules! empty {

() => { }

}

fn foo() -> bool { //~ ERROR mismatched

{ true } //~ ERROR mismatched

empty!();

}

```

Previously, `{ true }` would be considered the trailing expression, even

though there's a semicolon in `empty!();`

This makes macro expansion more token-based.

See https://github.com/rust-lang/rust/issues/61733#issuecomment-716188981

We now preserve the trailing semicolon in a macro invocation, even if

the macro expands to nothing. As a result, the following code no longer

compiles:

```rust

macro_rules! empty {

() => { }

}

fn foo() -> bool { //~ ERROR mismatched

{ true } //~ ERROR mismatched

empty!();

}

```

Previously, `{ true }` would be considered the trailing expression, even

though there's a semicolon in `empty!();`

This makes macro expansion more token-based.

Suggest that expressions that look like const generic arguments should be enclosed in brackets

I pulled out the changes for const expressions from https://github.com/rust-lang/rust/pull/71592 (without the trait object diagnostic changes) and made some small changes; the implementation is `@estebank's.`

We're also going to want to make some changes separately to account for trait objects (they result in poor diagnostics, as is evident from one of the test cases here), such as an adaption of https://github.com/rust-lang/rust/pull/72273.

Fixes https://github.com/rust-lang/rust/issues/70753.

r? `@petrochenkov`

Split out statement attributes changes from #78306

This is the same as PR https://github.com/rust-lang/rust/pull/78306, but `unused_doc_comments` is modified to explicitly ignore statement items (which preserves the current behavior).

This shouldn't have any user-visible effects, so it can be landed without lang team discussion.

---------

When the 'early' and 'late' visitors visit an attribute target, they

activate any lint attributes (e.g. `#[allow]`) that apply to it.

This can affect warnings emitted on sibiling attributes. For example,

the following code does not produce an `unused_attributes` for

`#[inline]`, since the sibiling `#[allow(unused_attributes)]` suppressed

the warning.

```rust

trait Foo {

#[allow(unused_attributes)] #[inline] fn first();

#[inline] #[allow(unused_attributes)] fn second();

}

```

However, we do not do this for statements - instead, the lint attributes

only become active when we visit the struct nested inside `StmtKind`

(e.g. `Item`).

Currently, this is difficult to observe due to another issue - the

`HasAttrs` impl for `StmtKind` ignores attributes for `StmtKind::Item`.

As a result, the `unused_doc_comments` lint will never see attributes on

item statements.

This commit makes two interrelated fixes to the handling of inert

(non-proc-macro) attributes on statements:

* The `HasAttr` impl for `StmtKind` now returns attributes for

`StmtKind::Item`, treating it just like every other `StmtKind`

variant. The only place relying on the old behavior was macro

which has been updated to explicitly ignore attributes on item

statements. This allows the `unused_doc_comments` lint to fire for

item statements.

* The `early` and `late` lint visitors now activate lint attributes when

invoking the callback for `Stmt`. This ensures that a lint

attribute (e.g. `#[allow(unused_doc_comments)]`) can be applied to

sibiling attributes on an item statement.

For now, the `unused_doc_comments` lint is explicitly disabled on item

statements, which preserves the current behavior. The exact locatiosn

where this lint should fire are being discussed in PR #78306

When the 'early' and 'late' visitors visit an attribute target, they

activate any lint attributes (e.g. `#[allow]`) that apply to it.

This can affect warnings emitted on sibiling attributes. For example,

the following code does not produce an `unused_attributes` for

`#[inline]`, since the sibiling `#[allow(unused_attributes)]` suppressed

the warning.

```rust

trait Foo {

#[allow(unused_attributes)] #[inline] fn first();

#[inline] #[allow(unused_attributes)] fn second();

}

```

However, we do not do this for statements - instead, the lint attributes

only become active when we visit the struct nested inside `StmtKind`

(e.g. `Item`).

Currently, this is difficult to observe due to another issue - the

`HasAttrs` impl for `StmtKind` ignores attributes for `StmtKind::Item`.

As a result, the `unused_doc_comments` lint will never see attributes on

item statements.

This commit makes two interrelated fixes to the handling of inert

(non-proc-macro) attributes on statements:

* The `HasAttr` impl for `StmtKind` now returns attributes for

`StmtKind::Item`, treating it just like every other `StmtKind`

variant. The only place relying on the old behavior was macro

which has been updated to explicitly ignore attributes on item

statements. This allows the `unused_doc_comments` lint to fire for

item statements.

* The `early` and `late` lint visitors now activate lint attributes when

invoking the callback for `Stmt`. This ensures that a lint

attribute (e.g. `#[allow(unused_doc_comments)]`) can be applied to

sibiling attributes on an item statement.

For now, the `unused_doc_comments` lint is explicitly disabled on item

statements, which preserves the current behavior. The exact locatiosn

where this lint should fire are being discussed in PR #78306

This allows us to avoid synthesizing tokens in `prepend_attr`, since we

have the original tokens available.

We still need to synthesize tokens when expanding `cfg_attr`,

but this is an unavoidable consequence of the syntax of `cfg_attr` -

the user does not supply the `#` and `[]` tokens that a `cfg_attr`

expands to.

Rewrite `collect_tokens` implementations to use a flattened buffer

Instead of trying to collect tokens at each depth, we 'flatten' the

stream as we go allong, pushing open/close delimiters to our buffer

just like regular tokens. One capturing is complete, we reconstruct a

nested `TokenTree::Delimited` structure, producing a normal

`TokenStream`.

The reconstructed `TokenStream` is not created immediately - instead, it is

produced on-demand by a closure (wrapped in a new `LazyTokenStream` type). This

closure stores a clone of the original `TokenCursor`, plus a record of the

number of calls to `next()/next_desugared()`. This is sufficient to reconstruct

the tokenstream seen by the callback without storing any additional state. If

the tokenstream is never used (e.g. when a captured `macro_rules!` argument is

never passed to a proc macro), we never actually create a `TokenStream`.

This implementation has a number of advantages over the previous one:

* It is significantly simpler, with no edge cases around capturing the

start/end of a delimited group.

* It can be easily extended to allow replacing tokens an an arbitrary

'depth' by just using `Vec::splice` at the proper position. This is

important for PR #76130, which requires us to track information about

attributes along with tokens.

* The lazy approach to `TokenStream` construction allows us to easily

parse an AST struct, and then decide after the fact whether we need a

`TokenStream`. This will be useful when we start collecting tokens for

`Attribute` - we can discard the `LazyTokenStream` if the parsed

attribute doesn't need tokens (e.g. is a builtin attribute).

The performance impact seems to be neglibile (see

https://github.com/rust-lang/rust/pull/77250#issuecomment-703960604). There is a

small slowdown on a few benchmarks, but it only rises above 1% for incremental

builds, where it represents a larger fraction of the much smaller instruction

count. There a ~1% speedup on a few other incremental benchmarks - my guess is

that the speedups and slowdowns will usually cancel out in practice.

Instead of trying to collect tokens at each depth, we 'flatten' the

stream as we go allong, pushing open/close delimiters to our buffer

just like regular tokens. One capturing is complete, we reconstruct a

nested `TokenTree::Delimited` structure, producing a normal

`TokenStream`.

The reconstructed `TokenStream` is not created immediately - instead, it is

produced on-demand by a closure (wrapped in a new `LazyTokenStream` type). This

closure stores a clone of the original `TokenCursor`, plus a record of the

number of calls to `next()/next_desugared()`. This is sufficient to reconstruct

the tokenstream seen by the callback without storing any additional state. If

the tokenstream is never used (e.g. when a captured `macro_rules!` argument is

never passed to a proc macro), we never actually create a `TokenStream`.

This implementation has a number of advantages over the previous one:

* It is significantly simpler, with no edge cases around capturing the

start/end of a delimited group.

* It can be easily extended to allow replacing tokens an an arbitrary

'depth' by just using `Vec::splice` at the proper position. This is

important for PR #76130, which requires us to track information about

attributes along with tokens.

* The lazy approach to `TokenStream` construction allows us to easily

parse an AST struct, and then decide after the fact whether we need a

`TokenStream`. This will be useful when we start collecting tokens for

`Attribute` - we can discard the `LazyTokenStream` if the parsed

attribute doesn't need tokens (e.g. is a builtin attribute).

The performance impact seems to be neglibile (see

https://github.com/rust-lang/rust/pull/77250#issuecomment-703960604). There is a

small slowdown on a few benchmarks, but it only rises above 1% for incremental

builds, where it represents a larger fraction of the much smaller instruction

count. There a ~1% speedup on a few other incremental benchmarks - my guess is

that the speedups and slowdowns will usually cancel out in practice.

Remove unused code

Rustc has a builtin lint for detecting unused code inside a crate, but when an item is marked `pub`, the code, even if unused inside the entire workspace, is never marked as such. Therefore, I've built [warnalyzer](https://github.com/est31/warnalyzer) to detect unused items in a cross-crate setting.

Closes https://github.com/est31/warnalyzer/issues/2

Prevent stack overflow in deeply nested types.

Related issue #75577 (?)

Unfortunately, I am unable to test whether this actually solves the problem because apparently, 12GB RAM + 2GB swap is not enough to compile the (admittedly toy) source file.

use sort_unstable to sort primitive types

It's not important to retain original order if we have &[1, 1, 2, 3] for example.

clippy::stable_sort_primitive

We currently only attach tokens when parsing a `:stmt` matcher for a

`macro_rules!` macro. Proc-macro attributes on statements are still

unstable, and need additional work.

Allow try blocks as the argument to return expressions

Fixes#76271

I don't think this needs to be edition-aware (phew) since `return try` in 2015 is also the start of an expression, just with a struct literal instead of a block (`return try { x: 4, y: 5 }`).