Move format_args!() into AST (and expand it during AST lowering)

Implements https://github.com/rust-lang/compiler-team/issues/541

This moves FormatArgs from rustc_builtin_macros to rustc_ast_lowering. For now, the end result is the same. But this allows for future changes to do smarter things with format_args!(). It also allows Clippy to directly access the ast::FormatArgs, making things a lot easier.

This change turns the format args types into lang items. The builtin macro used to refer to them by their path. After this change, the path is no longer relevant, making it easier to make changes in `core`.

This updates clippy to use the new language items, but this doesn't yet make clippy use the ast::FormatArgs structure that's now available. That should be done after this is merged.

Convert all the crates that have had their diagnostic migration

completed (except save_analysis because that will be deleted soon and

apfloat because of the licensing problem).

Remove `token::Lit` from `ast::MetaItemLit`.

Currently `ast::MetaItemLit` represents the literal kind twice. This PR removes that redundancy. Best reviewed one commit at a time.

r? `@petrochenkov`

Remove useless borrows and derefs

They are nothing more than noise.

<sub>These are not all of them, but my clippy started crashing (stack overflow), so rip :(</sub>

`token::Lit` contains a `kind` field that indicates what kind of literal

it is. `ast::MetaItemLit` currently wraps a `token::Lit` but also has

its own `kind` field. This means that `ast::MetaItemLit` encodes the

literal kind in two different ways.

This commit changes `ast::MetaItemLit` so it no longer wraps

`token::Lit`. It now contains the `symbol` and `suffix` fields from

`token::Lit`, but not the `kind` field, eliminating the redundancy.

This is required to distinguish between cooked and raw byte string

literals in an `ast::LitKind`, without referring to an adjacent

`token::Lit`. It's a prerequisite for the next commit.

Lower them into a single item with multiple resolutions instead.

This also allows to remove additional `NodId`s and `DefId`s related to those additional items.

There is code for converting `Attribute` (syntactic) to `MetaItem`

(semantic). There is also code for the reverse direction. The reverse

direction isn't really necessary; it's currently only used when

generating attributes, e.g. in `derive` code.

This commit adds some new functions for creating `Attributes`s directly,

without involving `MetaItem`s: `mk_attr_word`, `mk_attr_name_value_str`,

`mk_attr_nested_word`, and

`ExtCtxt::attr_{word,name_value_str,nested_word}`.

These new methods replace the old functions for creating `Attribute`s:

`mk_attr_inner`, `mk_attr_outer`, and `ExtCtxt::attribute`. Those

functions took `MetaItem`s as input, and relied on many other functions

that created `MetaItems`, which are also removed: `mk_name_value_item`,

`mk_list_item`, `mk_word_item`, `mk_nested_word_item`,

`{MetaItem,MetaItemKind,NestedMetaItem}::token_trees`,

`MetaItemKind::attr_args`, `MetaItemLit::{from_lit_kind,to_token}`,

`ExtCtxt::meta_word`.

Overall this cuts more than 100 lines of code and makes thing simpler.

`Lit::from_included_bytes` calls `Lit::from_lit_kind`, but the two call

sites only need the resulting `token::Lit`, not the full `ast::Lit`.

This commit changes those call sites to use `LitKind::to_token_lit`,

which means `from_included_bytes` can be removed.

`MacArgs` is an enum with three variants: `Empty`, `Delimited`, and `Eq`. It's

used in two ways:

- For representing attribute macro arguments (e.g. in `AttrItem`), where all

three variants are used.

- For representing function-like macros (e.g. in `MacCall` and `MacroDef`),

where only the `Delimited` variant is used.

In other words, `MacArgs` is used in two quite different places due to them

having partial overlap. I find this makes the code hard to read. It also leads

to various unreachable code paths, and allows invalid values (such as

accidentally using `MacArgs::Empty` in a `MacCall`).

This commit splits `MacArgs` in two:

- `DelimArgs` is a new struct just for the "delimited arguments" case. It is

now used in `MacCall` and `MacroDef`.

- `AttrArgs` is a renaming of the old `MacArgs` enum for the attribute macro

case. Its `Delimited` variant now contains a `DelimArgs`.

Various other related things are renamed as well.

These changes make the code clearer, avoids several unreachable paths, and

disallows the invalid values.

Instead of `ast::Lit`.

Literal lowering now happens at two different times. Expression literals

are lowered when HIR is crated. Attribute literals are lowered during

parsing.

This commit changes the language very slightly. Some programs that used

to not compile now will compile. This is because some invalid literals

that are removed by `cfg` or attribute macros will no longer trigger

errors. See this comment for more details:

https://github.com/rust-lang/rust/pull/102944#issuecomment-1277476773

`BindingAnnotation` refactor

* `ast::BindingMode` is deleted and replaced with `hir::BindingAnnotation` (which is moved to `ast`)

* `BindingAnnotation` is changed from an enum to a tuple struct e.g. `BindingAnnotation(ByRef::No, Mutability::Mut)`

* Associated constants added for convenience `BindingAnnotation::{NONE, REF, MUT, REF_MUT}`

One goal is to make it more clear that `BindingAnnotation` merely represents syntax `ref mut` and not the actual binding mode. This was especially confusing since we had `ast::BindingMode`->`hir::BindingAnnotation`->`thir::BindingMode`.

I wish there were more symmetry between `ByRef` and `Mutability` (variant) naming (maybe `Mutable::Yes`?), and I also don't love how long the name `BindingAnnotation` is, but this seems like the best compromise. Ideas welcome.

- Rename `ast::Lit::token` as `ast::Lit::token_lit`, because its type is

`token::Lit`, which is not a token. (This has been confusing me for a

long time.)

reasonable because we have an `ast::token::Lit` inside an `ast::Lit`.

- Rename `LitKind::{from,to}_lit_token` as

`LitKind::{from,to}_token_lit`, to match the above change and

`token::Lit`.

Stringify non-shorthand visibility correctly

This makes `stringify!(pub(in crate))` evaluate to `pub(in crate)` rather than `pub(crate)`, matching the behavior before the `crate` shorthand was removed. Further, this changes `stringify!(pub(in super))` to evaluate to `pub(in super)` rather than the current `pub(super)`. If the latter is not desired (it is _technically_ breaking), it can be undone.

Fixes#99981

`@rustbot` label +C-bug +regression-from-stable-to-beta +T-compiler

A `TokenStream` contains a `Lrc<Vec<(TokenTree, Spacing)>>`. But this is

not quite right. `Spacing` makes sense for `TokenTree::Token`, but does

not make sense for `TokenTree::Delimited`, because a

`TokenTree::Delimited` cannot be joined with another `TokenTree`.

This commit fixes this problem, by adding `Spacing` to `TokenTree::Token`,

changing `TokenStream` to contain a `Lrc<Vec<TokenTree>>`, and removing the

`TreeAndSpacing` typedef.

The commit removes these two impls:

- `impl From<TokenTree> for TokenStream`

- `impl From<TokenTree> for TreeAndSpacing`

These were useful, but also resulted in code with many `.into()` calls

that was hard to read, particularly for anyone not highly familiar with

the relevant types. This commit makes some other changes to compensate:

- `TokenTree::token()` becomes `TokenTree::token_{alone,joint}()`.

- `TokenStream::token_{alone,joint}()` are added.

- `TokenStream::delimited` is added.

This results in things like this:

```rust

TokenTree::token(token::Semi, stmt.span).into()

```

changing to this:

```rust

TokenStream::token_alone(token::Semi, stmt.span)

```

This makes the type of the result, and its spacing, clearer.

These changes also simplifies `Cursor` and `CursorRef`, because they no longer

need to distinguish between `next` and `next_with_spacing`.

The change was "Show invisible delimiters (within comments) when pretty

printing". It's useful to show these delimiters, but is a breaking

change for some proc macros.

Fixes#97608.

Begin fixing all the broken doctests in `compiler/`

Begins to fix#95994.

All of them pass now but 24 of them I've marked with `ignore HELP (<explanation>)` (asking for help) as I'm unsure how to get them to work / if we should leave them as they are.

There are also a few that I marked `ignore` that could maybe be made to work but seem less important.

Each `ignore` has a rough "reason" for ignoring after it parentheses, with

- `(pseudo-rust)` meaning "mostly rust-like but contains foreign syntax"

- `(illustrative)` a somewhat catchall for either a fragment of rust that doesn't stand on its own (like a lone type), or abbreviated rust with ellipses and undeclared types that would get too cluttered if made compile-worthy.

- `(not-rust)` stuff that isn't rust but benefits from the syntax highlighting, like MIR.

- `(internal)` uses `rustc_*` code which would be difficult to make work with the testing setup.

Those reason notes are a bit inconsistently applied and messy though. If that's important I can go through them again and try a more principled approach. When I run `rg '```ignore \(' .` on the repo, there look to be lots of different conventions other people have used for this sort of thing. I could try unifying them all if that would be helpful.

I'm not sure if there was a better existing way to do this but I wrote my own script to help me run all the doctests and wade through the output. If that would be useful to anyone else, I put it here: https://github.com/Elliot-Roberts/rust_doctest_fixing_tool

Overhaul `MacArgs`

Motivation:

- Clarify some code that I found hard to understand.

- Eliminate one use of three places where `TokenKind::Interpolated` values are created.

r? `@petrochenkov`

The value in `MacArgs::Eq` is currently represented as a `Token`.

Because of `TokenKind::Interpolated`, `Token` can be either a token or

an arbitrary AST fragment. In practice, a `MacArgs::Eq` starts out as a

literal or macro call AST fragment, and then is later lowered to a

literal token. But this is very non-obvious. `Token` is a much more

general type than what is needed.

This commit restricts things, by introducing a new type `MacArgsEqKind`

that is either an AST expression (pre-lowering) or an AST literal

(post-lowering). The downside is that the code is a bit more verbose in

a few places. The benefit is that makes it much clearer what the

possibilities are (though also shorter in some other places). Also, it

removes one use of `TokenKind::Interpolated`, taking us a step closer to

removing that variant, which will let us make `Token` impl `Copy` and

remove many "handle Interpolated" code paths in the parser.

Things to note:

- Error messages have improved. Messages like this:

```

unexpected token: `"bug" + "found"`

```

now say "unexpected expression", which makes more sense. Although

arbitrary expressions can exist within tokens thanks to

`TokenKind::Interpolated`, that's not obvious to anyone who doesn't

know compiler internals.

- In `parse_mac_args_common`, we no longer need to collect tokens for

the value expression.

Using an obviously-placeholder syntax. An RFC would still be needed before this could have any chance at stabilization, and it might be removed at any point.

But I'd really like to have it in nightly at least to ensure it works well with try_trait_v2, especially as we refactor the traits.

This commit rearranges the `match`. The new code avoids testing for

`MacArgs::Eq` twice, at the cost of repeating the `self.print_path()`

call. I think this is worthwhile because it puts the `match` in a more

standard and readable form.

Parse inner attributes on inline const block

According to https://github.com/rust-lang/rust/pull/84414#issuecomment-826150936, inner attributes are intended to be supported *"in all containers for statements (or some subset of statements)"*.

This PR adds inner attribute parsing and pretty-printing for inline const blocks (https://github.com/rust-lang/rust/issues/76001), which contain statements just like an unsafe block or a loop body.

```rust

let _ = const {

#![allow(...)]

let x = ();

x

};

```

It's only needed for macro expansion, not as a general element in the

AST. This commit removes it, adds `NtOrTt` for the parser and macro

expansion cases, and renames the variants in `NamedMatch` to better

match the new type.

Factor convenience functions out of main printer implementation

The pretty printer in rustc_ast_pretty has a section of methods commented "Convenience functions to talk to the printer". This PR pulls those out to a separate module. This leaves pp.rs with only the minimal API that is core to the pretty printing algorithm.

I found this separation to be helpful in https://github.com/dtolnay/prettyplease because it makes clear when changes are adding some fundamental new capability to the pretty printer algorithm vs just making it more convenient to call some already existing functionality.

The `print_expr` method already places an `ibox(INDENT_UNIT)` around

every expr that gets printed. Some exprs were then using `self.head`

inside of that, which does its own `cbox(INDENT_UNIT)`, resulting in two

levels of indentation:

while true {

stuff;

}

This commit fixes those cases to produce the expected single level of

indentation within every expression containing a block.

while true {

stuff;

}

Previously the pretty printer would compute indentation always relative

to whatever column a block begins at, like this:

fn demo(arg1: usize,

arg2: usize);

This is never the thing to do in the dominant contemporary Rust style.

Rustfmt's default and the style used by the vast majority of Rust

codebases is block indentation:

fn demo(

arg1: usize,

arg2: usize,

);

where every indentation level is a multiple of 4 spaces and each level

is indented relative to the indentation of the previous line, not the

position that the block starts in.

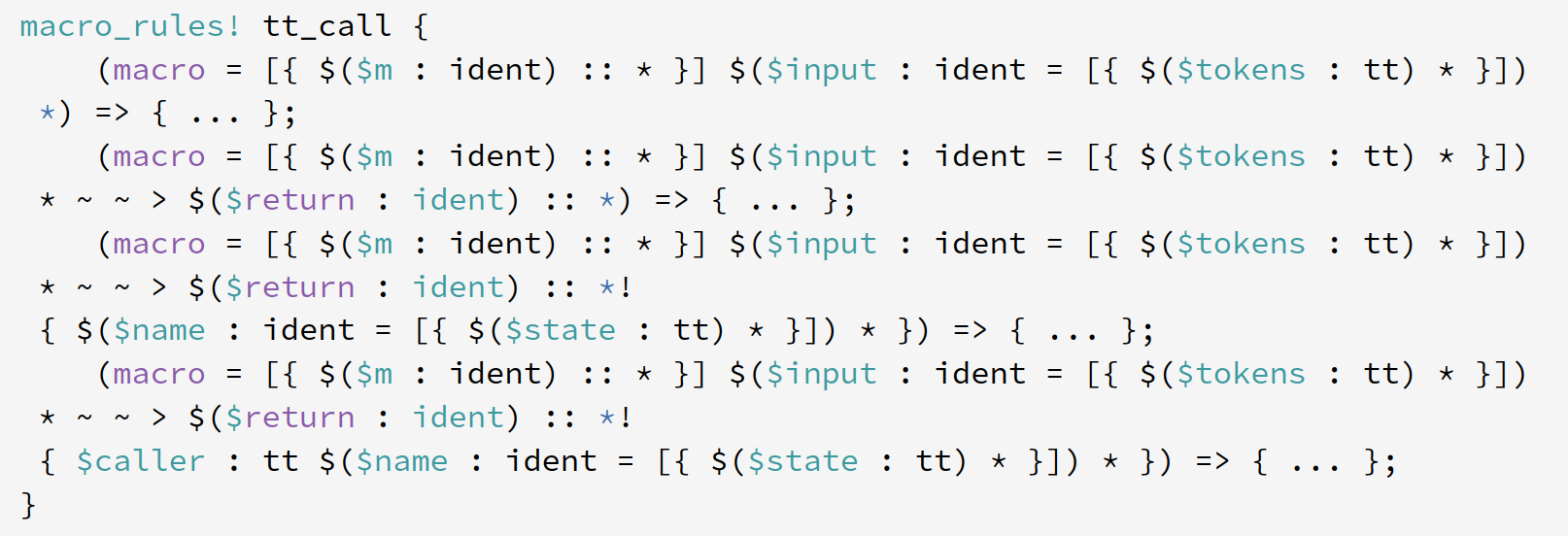

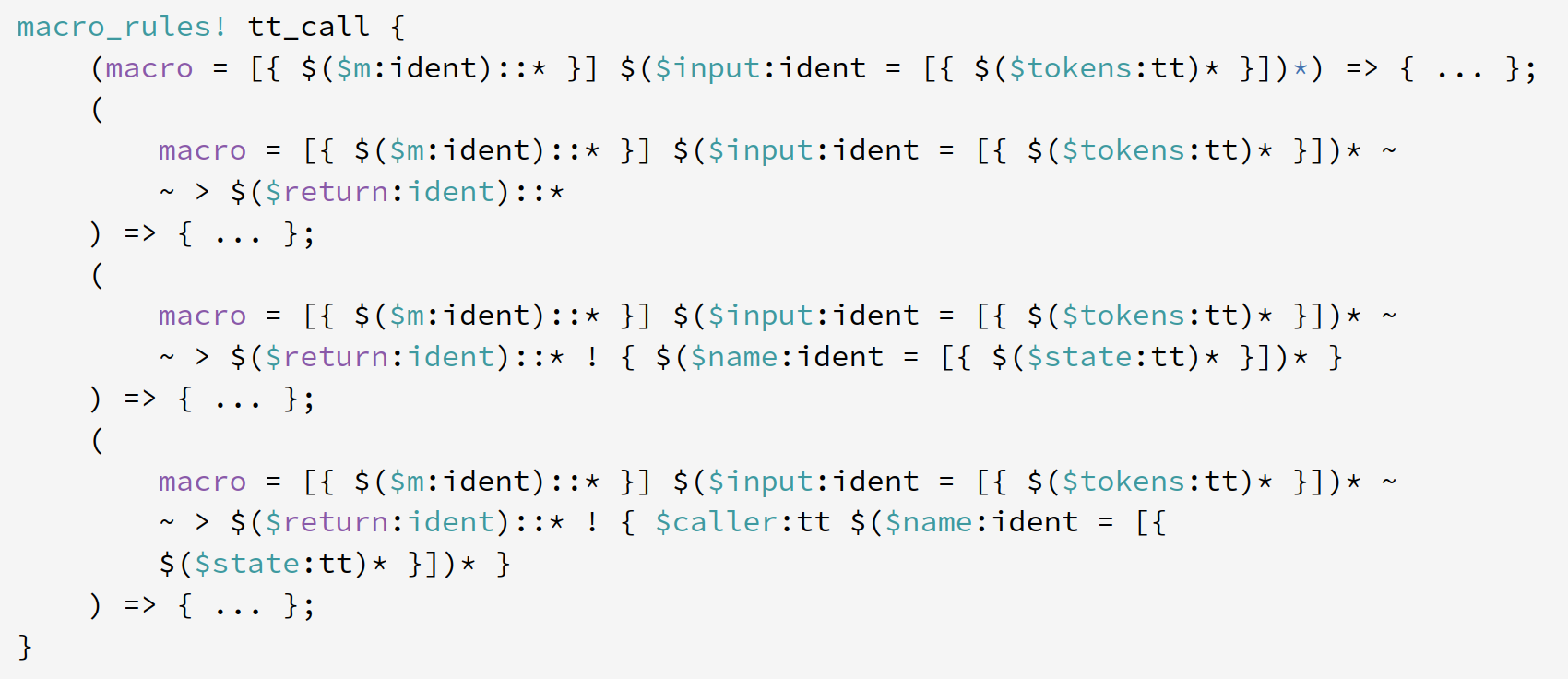

Render more readable macro matcher tokens in rustdoc

Follow-up to #92334.

This PR lifts some of the token rendering logic from https://github.com/dtolnay/prettyplease into rustdoc so that even the matchers for which a source code snippet is not available (because they are macro-generated, or any other reason) follow some baseline good assumptions about where the tokens in the macro matcher are appropriate to space.

The below screenshots show an example of the difference using one of the gnarliest macros I could find. Some things to notice:

- In the **before**, notice how a couple places break in between `$(....)`↵`*`, which is just about the worst possible place that it could break.

- In the **before**, the lines that wrapped are weirdly indented by 1 space of indentation relative to column 0. In the **after**, we use the typical way of block indenting in Rust syntax which is put the open/close delimiters on their own line and indent their contents by 4 spaces relative to the previous line (so 8 spaces relative to column 0, because the matcher itself is indented by 4 relative to the `macro_rules` header).

- In the **after**, macro_rules metavariables like `$tokens:tt` are kept together, which is how just about everybody writing Rust today writes them.

## Before

## After

r? `@camelid`

PrintStackElems with pbreak=PrintStackBreak::Fits always carried a

meaningless value offset=0. We can combine the two types PrintStackElem

+ PrintStackBreak into one PrintFrame enum that stores offset only for

Broken frames.