This means we stop supporting the case where a locally defined trait has only a single impl so we can always use that impl (see nested-tait-inference.rs).

by using an opaque type obligation to bubble up comparisons between opaque types and other types

Also uses proper obligation causes so that the body id works, because out of some reason nll uses body ids for logic instead of just diagnostics.

Return an indexmap in `all_local_trait_impls` query

The data structure previously used here required that `DefId` be `Ord`. As part of #90317, we do not want `DefId` to implement `Ord`.

debuginfo: Make sure that type names for closure and generator environments are unique in debuginfo.

Before this change, closure/generator environments coming from different instantiations of the same generic function were all assigned the same name even though they were distinct types with potentially different data layout. Now we append the generic arguments of the originating function to the type name.

This commit also emits `{closure_env#0}` as the name of these types in order to disambiguate them from the accompanying closure function (which keeps being called `{closure#0}`). Previously both were assigned the same name.

NOTE: Changing debuginfo names like this can break pretty printers and other debugger plugins. I think it's OK in this particular case because the names we are changing were ambiguous anyway. In general though it would be great to have a process for doing changes like these.

Fix two incorrect "it's" (typos in comments)

Found one of these while reading the documentation online. The other came up because it's in the same file.

Add missing | between print options

The help text for the newly stabilized print option is missing a | between stack-protector-strategies and link-args.

Compiler panics should be rare - when they do occur, we want the report

filed by the user to contain as much information as possible. This is

especially important when the panic is due to an incremental compilation

bug, since we may not have enough information to reproduce it.

This PR sets `RUST_BACKTRACE=full` inside `rustc` if the user has not

explicitly set `RUST_BACKTRACE`. This is more verbose than

`RUST_BACKTRACE=1`, but this may make it easier to debug incremental

compilation issues. Users who find this too verbose can still manually

set `RUST_BACKTRACE` before invoking the compiler.

This only affects `rustc` (and any tool using `rustc_driver::install_ice_hook`).

It does *not* affect any user crates or the standard library -

backtraces will continue to be off by default in any application

*compiled* by rustc.

Make dead code check a query.

Dead code check is run for each invocation of the compiler, even if no modifications were involved.

This PR makes dead code check a query keyed on the module. This allows to skip the check when a module has not changed.

To perform this, a query `live_symbols_and_ignored_derived_traits` is introduced to encapsulate the global analysis of finding live symbols. The second query `check_mod_deathness` outputs diagnostics for each module based on this first query's results.

Currently, it can be `None` if the conversion from `OsString` fails, in

which case all searches will skip over the `SearchPathFile`.

The commit changes things so that the `SearchPathFile` just doesn't get

created in the first place. Same behaviour, but slightly simpler code.

Continue work on associated const equality

This actually implements some more complex logic for assigning associated consts to values.

Inside of projection candidates, it now defers to a separate function for either consts or

types. To reduce amount of code, projections are now generic over T, where T is either a Type or

a Const. I can add some comments back later, but this was the fastest way to implement it.

It also now finds the correct type of consts in type_of.

---

The current main TODO is finding the const of the def id for the LeafDef.

Right now it works if the function isn't called, but once you use the trait impl with the bound it fails inside projection.

I was hoping to get some help in getting the `&'tcx ty::Const<'tcx>`, in addition to a bunch of other `todo!()`s which I think may not be hit.

r? `@oli-obk`

Updates #92827

This code and comment appear to be out of date.

`CrateLocator::find_library_crate` is the only caller of this function

and it handles rlib vs dylib overlap itself (see

`CrateLocator::extract_lib`) after inspecting all the files present, so

it doesn't need to see them in any particular order.

Enable combining `+crt-static` and `relocation-model=pic` on `x86_64-unknown-linux-gnu`

Modern `gcc` versions support `-static-pie`, and `rustc` will already fall-back to `-static` if the local `gcc` is too old (and hence this change is optimistic rather than absolute). This brings the `-musl` and `-gnu` targets to feature compatibility (albeit with different default settings).

Of note a `-static` or `-static-pie` binary based on glibc that uses NSS-backed functions (`gethostbyname` or `getpwuid` etc.) need to have access to the `libnss_X.so.2` libraries and any of their dynamic dependencies.

I wasn't sure about the `# only`/`# ignore` changes (I've not got a `gnux32` toolchain to test with hence not also enabling `-static-pie` there).

Disable drop range analysis

The previous PR, #93165, still performed the drop range analysis despite ignoring the results. Unfortunately, there were ICEs in the analysis as well, so some packages failed to build (see the issue #93197 for an example). This change further disables the analysis and just provides dummy results in that case.

Before this change, closure/generator environments coming from different

instantiations of the same generic function were all assigned the same

name even though they were distinct types with potentially different data

layout. Now we append the generic arguments of the originating function

to the type name.

This commit also emits '{closure_env#0}' as the name of these types in

order to disambiguate them from the accompanying closure function

'{closure#0}'. Previously both were assigned the same name.

Rollup of 9 pull requests

Successful merges:

- #91343 (Fix suggestion to slice if scrutinee is a `Result` or `Option`)

- #93019 (If an integer is entered with an upper-case base prefix (0Xbeef, 0O755, 0B1010), suggest to make it lowercase)

- #93090 (`impl Display for io::ErrorKind`)

- #93456 (Remove an unnecessary transmute from opaque::Encoder)

- #93492 (Hide failed command unless in verbose mode)

- #93504 (kmc-solid: Increase the default stack size)

- #93513 (Allow any pretty printed line to have at least 60 chars)

- #93532 (Update books)

- #93533 (Update cargo)

Failed merges:

r? `@ghost`

`@rustbot` modify labels: rollup

Allow any pretty printed line to have at least 60 chars

Follow-up to #93155. The rustc AST pretty printer has a tendency to get stuck in "vertical smear mode" when formatting highly nested code, where it puts a linebreak at *every possible* linebreak opportunity once the indentation goes beyond the pretty printer's target line width:

```rust

...

((&([("test"

as

&str)]

as

[&str; 1])

as

&[&str; 1]),

(&([]

as

[ArgumentV1; 0])

as

&[ArgumentV1; 0]))

...

```

```rust

...

[(1

as

i32),

(2

as

i32),

(3

as

i32)]

as

[i32; 3]

...

```

This is less common after #93155 because that PR greatly reduced the total amount of indentation, but the "vertical smear mode" failure mode is still just as present when you have deeply nested modules, functions, or trait impls, such as in the case of macro-expanded code from `-Zunpretty=expanded`.

Vertical smear mode is never the best way to format highly indented code though. It does not prevent the target line width from being exceeded, and it produces output that is less readable than just a longer line.

This PR makes the pretty printing algorithm allow a minimum of 60 chars on every line independent of indentation. So as code gets more indented, the right margin eventually recedes to make room for formatting without vertical smear.

```console

├─────────────────────────────────────┤

├─────────────────────────────────────┤

├─────────────────────────────────────┤

├───────────────────────────────────┤

├─────────────────────────────────┤

├───────────────────────────────┤

├─────────────────────────────┤

├───────────────────────────┤

├───────────────────────────┤

├───────────────────────────┤

├───────────────────────────┤

├───────────────────────────┤

├─────────────────────────────┤

├───────────────────────────────┤

├─────────────────────────────────┤

├───────────────────────────────────┤

├─────────────────────────────────────┤

```

If an integer is entered with an upper-case base prefix (0Xbeef, 0O755, 0B1010), suggest to make it lowercase

The current error for this case isn't really great, it just complains about the whole thing past the `0` being an invalid suffix.

rustc_errors: only box the `diagnostic` field in `DiagnosticBuilder`.

I happened to need to do the first change (replacing `allow_suggestions` with equivalent functionality on `Diagnostic` itself) as part of a larger change, and noticed that there's only two fields left in `DiagnosticBuilderInner`.

So with this PR, instead of a single pointer, `DiagnosticBuilder` is two pointers, which should work just as well for passing *it* by value (and may even work better wrt some operations, though probably not by much).

But anything that was already taking advantage of `DiagnosticBuilder` being a single pointer, and wrapping it further (e.g. `Result<T, DiagnosticBuilder>` w/ non-ZST `T`), ~~will probably see a slowdown~~, so I want to do a perf run before even trying to propose this.

Check that `#[rustc_must_implement_one_of]` is applied to a trait

`#[rustc_must_implement_one_of]` only makes sense when applied to a trait, so it's sensible to emit an error otherwise.

Store def_id_to_hir_id as variant in hir_owner.

If hir_owner is Owner(_), the LocalDefId is pointing to an owner, so the ItemLocalId is 0.

If the HIR node does not exist, we store Phantom.

Otherwise, we store the HirId associated to the LocalDefId.

Related to #89278

r? `@oli-obk`

Switch pretty printer to block-based indentation

This PR backports 401d60c042 from the `prettyplease` crate into `rustc_ast_pretty`.

A before and after:

```diff

- let res =

- ((::alloc::fmt::format as

- for<'r> fn(Arguments<'r>) -> String {format})(((::core::fmt::Arguments::new_v1

- as

- fn(&[&'static str], &[ArgumentV1]) -> Arguments {Arguments::new_v1})((&([("test"

- as

- &str)]

- as

- [&str; 1])

- as

- &[&str; 1]),

- (&([]

- as

- [ArgumentV1; 0])

- as

- &[ArgumentV1; 0]))

- as

- Arguments))

- as String);

+ let res =

+ ((::alloc::fmt::format as

+ for<'r> fn(Arguments<'r>) -> String {format})(((::core::fmt::Arguments::new_v1

+ as

+ fn(&[&'static str], &[ArgumentV1]) -> Arguments {Arguments::new_v1})((&([("test"

+ as &str)] as [&str; 1]) as

+ &[&str; 1]),

+ (&([] as [ArgumentV1; 0]) as &[ArgumentV1; 0])) as

+ Arguments)) as String);

```

Previously the pretty printer would compute indentation always relative to whatever column a block begins at, like this:

```rust

fn demo(arg1: usize,

arg2: usize);

```

This is never the thing to do in the dominant contemporary Rust style. Rustfmt's default and the style used by the vast majority of Rust codebases is block indentation:

```rust

fn demo(

arg1: usize,

arg2: usize,

);

```

where every indentation level is a multiple of 4 spaces and each level is indented relative to the indentation of the previous line, not the position that the block starts in.

By itself this PR doesn't get perfect formatting in all cases, but it is the smallest possible step in clearly the right direction. More backports from `prettyplease` to tune the ibox/cbox indent levels around various AST node types are upcoming.

The `print_expr` method already places an `ibox(INDENT_UNIT)` around

every expr that gets printed. Some exprs were then using `self.head`

inside of that, which does its own `cbox(INDENT_UNIT)`, resulting in two

levels of indentation:

while true {

stuff;

}

This commit fixes those cases to produce the expected single level of

indentation within every expression containing a block.

while true {

stuff;

}

Previously the pretty printer would compute indentation always relative

to whatever column a block begins at, like this:

fn demo(arg1: usize,

arg2: usize);

This is never the thing to do in the dominant contemporary Rust style.

Rustfmt's default and the style used by the vast majority of Rust

codebases is block indentation:

fn demo(

arg1: usize,

arg2: usize,

);

where every indentation level is a multiple of 4 spaces and each level

is indented relative to the indentation of the previous line, not the

position that the block starts in.

Create `core::fmt::ArgumentV1` with generics instead of fn pointer

Split from (and prerequisite of) #90488, as this seems to have perf implication.

`@rustbot` label: +T-libs

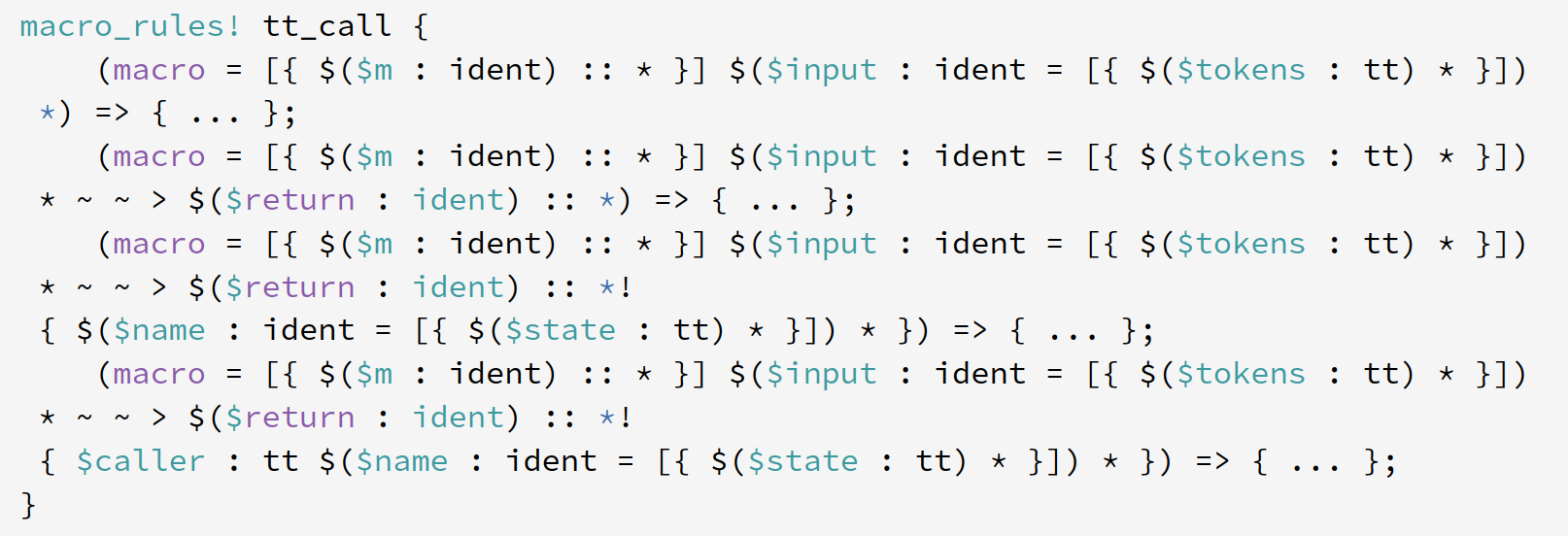

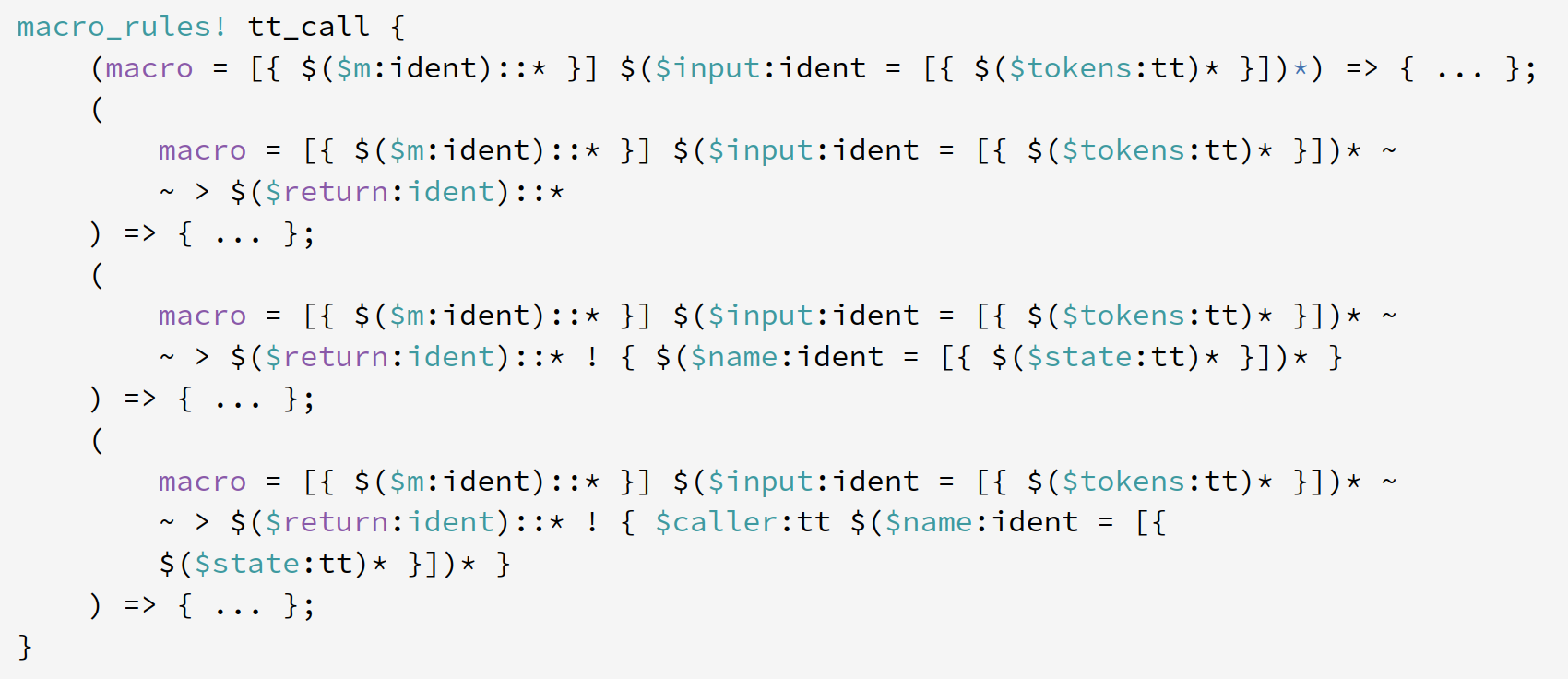

Render more readable macro matcher tokens in rustdoc

Follow-up to #92334.

This PR lifts some of the token rendering logic from https://github.com/dtolnay/prettyplease into rustdoc so that even the matchers for which a source code snippet is not available (because they are macro-generated, or any other reason) follow some baseline good assumptions about where the tokens in the macro matcher are appropriate to space.

The below screenshots show an example of the difference using one of the gnarliest macros I could find. Some things to notice:

- In the **before**, notice how a couple places break in between `$(....)`↵`*`, which is just about the worst possible place that it could break.

- In the **before**, the lines that wrapped are weirdly indented by 1 space of indentation relative to column 0. In the **after**, we use the typical way of block indenting in Rust syntax which is put the open/close delimiters on their own line and indent their contents by 4 spaces relative to the previous line (so 8 spaces relative to column 0, because the matcher itself is indented by 4 relative to the `macro_rules` header).

- In the **after**, macro_rules metavariables like `$tokens:tt` are kept together, which is how just about everybody writing Rust today writes them.

## Before

## After

r? `@camelid`

Rename _args -> args in format_args expansion

As observed in https://github.com/rust-lang/rust/pull/91359#discussion_r786058960, prior to that PR this variable was sometimes never used, such as in the case of:

```rust

println!("");

// used to expand to:

::std::io::_print(

::core::fmt::Arguments::new_v1(

&["\n"],

&match () {

_args => [],

},

),

);

```

so the leading underscore in `_args` was used to suppress an unused variable lint. However after #91359 the variable is always used when present, as the unused case would instead expand to:

```rust

::std::io::_print(::core::fmt::Arguments::new_v1(&["\n"], &[]));

```

Add note suggesting that predicate may be satisfied, but is not `const`

Not sure if we should be printing this in addition to, or perhaps _instead_ of the help message:

```

help: the trait `~const Add` is not implemented for `NonConstAdd`

```

Also added `ParamEnv::is_const` and `PolyTraitPredicate::is_const_if_const` and, in a separate commit, used those in other places instead of `== hir::Constness::Const`, etc.

r? ````@fee1-dead````

remove unused `jemallocator` crate

When it was noticed that the rustc binary wasn't actually using jemalloc via `#[global_allocator]` and that was removed, the dependency remained.

Tests pass locally with a `jemalloc = true` build, but I'll trigger a try build to ensure I haven't missed an edge-case somewhere.

r? ```@ghost``` until that completes

Add `intrinsics::const_deallocate`

Tracking issue: #79597

Related: #91884

This allows deallocation of a memory allocated by `intrinsics::const_allocate`. At the moment, this can be only used to reduce memory usage, but in the future this may be useful to detect memory leaks (If an allocated memory remains after evaluation, raise an error...?).

Rollup of 10 pull requests

Successful merges:

- #92611 (Add links to the reference and rust by example for asm! docs and lints)

- #93158 (wasi: implement `sock_accept` and enable networking)

- #93239 (Add os::unix::net::SocketAddr::from_path)

- #93261 (Some unwinding related cg_ssa cleanups)

- #93295 (Avoid double panics when using `TempDir` in tests)

- #93353 (Unimpl {Add,Sub,Mul,Div,Rem,BitXor,BitOr,BitAnd}<$t> for Saturating<$t>)

- #93356 (Edit docs introduction for `std::cmp::PartialOrd`)

- #93375 (fix typo `documenation`)

- #93399 (rustbuild: Fix compiletest warning when building outside of root.)

- #93404 (Fix a typo from #92899)

Failed merges:

r? `@ghost`

`@rustbot` modify labels: rollup

Add links to the reference and rust by example for asm! docs and lints

These were previously removed in #91728 due to broken links.

cc ``@ehuss`` since this updates the rust-by-example submodule

Fix debuginfo for pointers/references to unsized types

This PR makes the compiler emit fat pointer debuginfo in all cases. Before, we sometimes got thin-pointer debuginfo, making it impossible to fully interpret the pointed to memory in debuggers. The code is actually cleaner now, especially around generation of trait object pointer debuginfo.

Fixes https://github.com/rust-lang/rust/issues/92718

~~Blocked on https://github.com/rust-lang/rust/pull/92729.~~

Suggest tuple-parentheses for enum variants

This follows on from #86493 / #86481, making the parentheses suggestion. To summarise, given the following code:

```rust

fn f() -> Option<(i32, i8)> {

Some(1, 2)

}

```

The current output is:

```

error[E0061]: this enum variant takes 1 argument but 2 arguments were supplied

--> b.rs:2:5

|

2 | Some(1, 2)

| ^^^^ - - supplied 2 arguments

| |

| expected 1 argument

error: aborting due to previous error

For more information about this error, try `rustc --explain E0061`.

```

With this change, `rustc` will now suggest parentheses when:

- The callee is expecting a single tuple argument

- The number of arguments passed matches the element count in the above tuple

- The arguments' types match the tuple's fields

```

error[E0061]: this enum variant takes 1 argument but 2 arguments were supplied

--> b.rs:2:5

|

2 | Some(1, 2)

| ^^^^ - - supplied 2 arguments

|

help: use parentheses to construct a tuple

|

2 | Some((1, 2))

| + +

```

LLDB does not seem to see fields if they are marked with DW_AT_artificial

which breaks pretty printers that use these fields for decoding fat pointers.

Only traverse attrs once while checking for coherence override attributes

In coherence, while checking for negative impls override attributes: only traverse the `DefId`s' attributes once.

This PR is an easy way to get back some of the small perf loss in #93175

Clarify the `usage-of-qualified-ty` error message.

I found this message confusing when I encountered it. This commit makes

it clearer that you have to import the unqualified type yourself.

r? `@lcnr`

Introduce a limit to Levenshtein distance computation

Incorporate distance limit from `find_best_match_for_name` directly into

Levenshtein distance computation.

Use the string size difference as a lower bound on the distance and exit

early when it exceeds the specified limit.

After finding a candidate within a limit, lower the limit further to

restrict the search space.

Add test for stable hash uniqueness of adjacent field values

This PR adds a simple test to check that stable hash will produce a different hash if the order of two values that have the same combined bit pattern changes.

r? `@the8472`

It's simply a binary thing to allow different behaviour for `Copy` vs

`!Copy` types. The new code makes this much clearer; I was scratching my

head over the old code for some time.

If hir_owner is Owner(_), the LocalDefId is pointing to an owner, so the ItemLocalId is 0.

If the HIR node does not exist, we store Phantom.

Otherwise, we store the HirId associated to the LocalDefId.

Ignore unwinding edges when checking for unconditional recursion

The unconditional recursion lint determines if all execution paths

eventually lead to a self-recursive call.

The implementation always follows unwinding edges which limits its

practical utility. For example, it would not lint function `f` because a

call to `g` might unwind. It also wouldn't lint function `h` because an

overflow check preceding the self-recursive call might unwind:

```rust

pub fn f() {

g();

f();

}

pub fn g() { /* ... */ }

pub fn h(a: usize) {

h(a + 1);

}

```

To avoid the issue, assume that terminators that might continue

execution along non-unwinding edges do so.

Fixes#78474.

The unconditional recursion lint determines if all execution paths

eventually lead to a self-recursive call.

The implementation always follows unwinding edges which limits its

practical utility. For example, it would not lint function `f` because a

call to `g` might unwind. It also wouldn't lint function `h` because an

overflow check preceding the self-recursive call might unwind:

```rust

pub fn f() {

g();

f();

}

pub fn g() { /* ... */ }

pub fn h(a: usize) {

h(a + 1);

}

```

To avoid the issue, assume that terminators that might continue

execution along non-unwinding edges do so.

Fix the unsoundness in the `early_otherwise_branch` mir opt pass

Closes#78496 .

This change is a significant rewrite of much of the pass. Exactly what it does is documented in the source file (with ascii art!), and all the changes that are made to the MIR that are not trivially sound are carefully documented. That being said, this is my first time touching MIR, so there are probably some invariants I did not know about that I broke.

This version of the optimization is also somewhat more flexible than the original; for example, we do not care how or where the value on which the parent is switching is computed. There is no requirement that any types be the same. This could be made even more flexible in the future by allowing a wider range of statements in the bodies of `BBC, BBD` (as long as they are all the same of course). This should be a good first step though.

Probably needs a perf run.

r? `@oli-obk` who reviewed things the last time this was touched

Incorporate distance limit from `find_best_match_for_name` directly into

Levenshtein distance computation.

Use the string size difference as a lower bound on the distance and exit

early when it exceeds the specified limit.

After finding a candidate within a limit, lower the limit further to

restrict the search space.

rustdoc: Pre-calculate traits that are in scope for doc links

This eliminates one more late use of resolver (part of #83761).

At early doc link resolution time we go through parent modules of items from the current crate, reexports of items from other crates, trait items, and impl items collected by `collect-intra-doc-links` pass, determine traits that are in scope in each such module, and put those traits into a map used by later rustdoc passes.

r? `@jyn514`

Fix ICE when parsing bad turbofish with lifetime argument

Generalize conditions where we suggest adding the turbofish operator, so we don't ICE during code like

```rust

fn foo() {

A<'a,>

}

```

but instead suggest adding a turbofish.

Fixes#93282

Remove deduplication of early lints

We already have a general mechanism for deduplicating reported

lints, so there's no need to have an additional one for early lints

specifically. This allows us to remove some `PartialEq` impls.

Store a `Symbol` instead of an `Ident` in `AssocItem`

This is the same idea as #92533, but for `AssocItem` instead

of `VariantDef`/`FieldDef`.

With this change, we no longer have any uses of

`#[stable_hasher(project(...))]`

Remove ordering traits from `OutlivesConstraint`

In two cases where this ordering was used, I've replaced the sorting to use a key that does not rely on `DefId` being `Ord`. This is part of #90317. If I understand correctly, whether this is correct depends on whether the `RegionVid`s are tracked during incremental compilation. But I might be mistaken in this approach. cc `@cjgillot`

Use error-on-mismatch policy for PAuth module flags.

This agrees with Clang, and avoids an error when using LTO with mixed

C/Rust. LLVM considers different behaviour flags to be a mismatch,

even when the flag value itself is the same.

This also makes the flag setting explicit for all uses of

LLVMRustAddModuleFlag.

----

I believe that this fixes#92885, but have only reproduced it locally on Linux hosts so cannot confirm that it fixes the issue as reported.

I have not included a test for this because it is covered by an existing test (`src/test/run-make-fulldeps/cross-lang-lto-clang`). It is not without its problems, though:

* The test requires Clang and `--run-clang-based-tests-with=...` to run, and this is not the case on the CI.

* Any test I add would have a similar requirement.

* With this patch applied, the test gets further, but it still fails (for other reasons). I don't think that affects #92885.

Work around missing code coverage data causing llvm-cov failures

If we do not add code coverage instrumentation to the `Body` of a

function, then when we go to generate the function record for it, we

won't write any data and this later causes llvm-cov to fail when

processing data for the entire coverage report.

I've identified two main cases where we do not currently add code

coverage instrumentation to the `Body` of a function:

1. If the function has a single `BasicBlock` and it ends with a

`TerminatorKind::Unreachable`.

2. If the function is created using a proc macro of some kind.

For case 1, this is typically not important as this most often occurs as

a result of function definitions that take or return uninhabited

types. These kinds of functions, by definition, cannot even be called so

they logically should not be counted in code coverage statistics.

For case 2, I haven't looked into this very much but I've noticed while

testing this patch that (other than functions which are covered by case

1) the skipped function coverage debug message is occasionally triggered

in large crate graphs by functions generated from a proc macro. This may

have something to do with weird spans being generated by the proc macro

but this is just a guess.

I think it's reasonable to land this change since currently, we fail to

generate *any* results from llvm-cov when a function has no coverage

instrumentation applied to it. With this change, we get coverage data

for all functions other than the two cases discussed above.

Fixes#93054 which occurs because of uncallable functions which shouldn't

have code coverage anyway.

I will open an issue for missing code coverage of proc macro generated

functions and leave a link here once I have a more minimal repro.

r? ``@tmandry``

cc ``@richkadel``

Move param count error emission to end of `check_argument_types`

The error emission here isn't exactly what is done in #92364, but replicating that is hard . The general move should make for a smaller diff.

Also included the `(usize, Ty, Ty)` to -> `Option<(Ty, Ty)>` commit.

r? ``@estebank``

Properly track `DepNode`s in trait evaluation provisional cache

Fixes#92987

During evaluation of an auto trait predicate, we may encounter a cycle.

This causes us to store the evaluation result in a special 'provisional

cache;. If we later end up determining that the type can legitimately

implement the auto trait despite the cycle, we remove the entry from

the provisional cache, and insert it into the evaluation cache.

Additionally, trait evaluation creates a special anonymous `DepNode`.

All queries invoked during the predicate evaluation are added as

outoging dependency edges from the `DepNode`. This `DepNode` is then

store in the evaluation cache - if a different query ends up reading

from the cache entry, it will also perform a read of the stored

`DepNode`. As a result, the cached evaluation will still end up

(transitively) incurring all of the same dependencies that it would

if it actually performed the uncached evaluation (e.g. a call to

`type_of` to determine constituent types).

Previously, we did not correctly handle the interaction between the

provisional cache and the created `DepNode`. Storing an evaluation

result in the provisional cache would cause us to lose the `DepNode`

created during the evaluation. If we later moved the entry from the

provisional cache to the evaluation cache, we would use the `DepNode`

associated with the evaluation that caused us to 'complete' the cycle,

not the evaluatoon where we first discovered the cycle. As a result,

future reads from the evaluation cache would miss some incremental

compilation dependencies that would have otherwise been added if the

evaluation was *not* cached.

Under the right circumstances, this could lead to us trying to force

a query with a no-longer-existing `DefPathHash`, since we were missing

the (red) dependency edge that would have caused us to bail out before

attempting forcing.

This commit makes the provisional cache store the `DepNode` create

during the provisional evaluation. When we move an entry from the

provisional cache to the evaluation cache, we create a *new* `DepNode`

that has dependencies going to *both* of the evaluation `DepNodes` we

have available. This ensures that cached reads will incur all of

the necessary dependency edges.

The previous PR, #93165, still performed the drop range analysis

despite ignoring the results. Unfortunately, there were ICEs in

the analysis as well, so some packages failed to build (see the

issue #93197 for an example). This change further disables the

analysis and just provides dummy results in that case.

This agrees with Clang, and avoids an error when using LTO with mixed

C/Rust. LLVM considers different behaviour flags to be a mismatch,

even when the flag value itself is the same.

This also makes the flag setting explicit for all uses of

LLVMRustAddModuleFlag.