Try fast_reject::simplify_type in coherence before doing full check

This is a reattempt at landing #69010 (by `@jonas-schievink).` The change adds a fast path for coherence checking to see if there's no way for types to unify since full coherence checking can be somewhat expensive.

This has big effects on code generated by the [`windows`](https://github.com/microsoft/windows-rs) which in some cases spends as much as 20% of compilation time in the `specialization_graph_of` query. In local benchmarks this took a compilation that previously took ~500 seconds down to ~380 seconds.

This is surely not going to make a difference on much smaller crates, so the question is whether it will have a negative impact. #69010 was closed because some of the perf suite crates did show small regressions.

Additional discussion of this issue is happening [here](https://rust-lang.zulipchat.com/#narrow/stream/247081-t-compiler.2Fperformance/topic/windows-rs.20perf).

Rename HIR UnOp variants

This renames the variants in HIR UnOp from

enum UnOp {

UnDeref,

UnNot,

UnNeg,

}

to

enum UnOp {

Deref,

Not,

Neg,

}

Motivations:

- This is more consistent with the rest of the code base where most enum

variants don't have a prefix.

- These variants are never used without the `UnOp` prefix so the extra

`Un` prefix doesn't help with readability. E.g. we don't have any

`UnDeref`s in the code, we only have `UnOp::UnDeref`.

- MIR `UnOp` type variants don't have a prefix so this is more

consistent with MIR types.

- "un" prefix reads like "inverse" or "reverse", so as a beginner in

rustc code base when I see "UnDeref" what comes to my mind is

something like `&*` instead of just `*`.

This renames the variants in HIR UnOp from

enum UnOp {

UnDeref,

UnNot,

UnNeg,

}

to

enum UnOp {

Deref,

Not,

Neg,

}

Motivations:

- This is more consistent with the rest of the code base where most enum

variants don't have a prefix.

- These variants are never used without the `UnOp` prefix so the extra

`Un` prefix doesn't help with readability. E.g. we don't have any

`UnDeref`s in the code, we only have `UnOp::UnDeref`.

- MIR `UnOp` type variants don't have a prefix so this is more

consistent with MIR types.

- "un" prefix reads like "inverse" or "reverse", so as a beginner in

rustc code base when I see "UnDeref" what comes to my mind is

something like "&*" instead of just "*".

Rollup of 11 pull requests

Successful merges:

- #72209 (Add checking for no_mangle to unsafe_code lint)

- #80732 (Allow Trait inheritance with cycles on associated types take 2)

- #81697 (Add "every" as a doc alias for "all".)

- #81826 (Prefer match over combinators to make some Box methods inlineable)

- #81834 (Resolve typedef in HashMap lldb pretty-printer only if possible)

- #81841 ([rustbuild] Output rustdoc-json-types docs )

- #81849 (Expand the docs for ops::ControlFlow a bit)

- #81876 (parser: Fix panic in 'const impl' recovery)

- #81882 (⬆️ rust-analyzer)

- #81888 (Fix pretty printer macro_rules with semicolon.)

- #81896 (Remove outdated comment in windows' mutex.rs)

Failed merges:

r? `@ghost`

`@rustbot` modify labels: rollup

Allow Trait inheritance with cycles on associated types take 2

This reverts the revert of #79209 and fixes the ICEs that's occasioned by that PR exposing some problems that are addressed in #80648 and #79811.

For easier review I'd say, check only the last commit, the first one is just a revert of the revert of #79209 which was already approved.

This also could be considered part or the actual fix of #79560 but I guess for that to be closed and fixed completely we would need to land #80648 and #79811 too.

r? `@nikomatsakis`

cc `@Aaron1011`

Improve SIMD type element count validation

Resolvesrust-lang/stdsimd#53.

These changes are motivated by `stdsimd` moving in the direction of const generic vectors, e.g.:

```rust

#[repr(simd)]

struct SimdF32<const N: usize>([f32; N]);

```

This makes a few changes:

* Establishes a maximum SIMD lane count of 2^16 (65536). This value is arbitrary, but attempts to validate lane count before hitting potential errors in the backend. It's not clear what LLVM's maximum lane count is, but cranelift's appears to be much less than `usize::MAX`, at least.

* Expands some SIMD intrinsics to support arbitrary lane counts. This resolves the ICE in the linked issue.

* Attempts to catch invalid-sized vectors during typeck when possible.

Unresolved questions:

* Generic-length vectors can't be validated in typeck and are only validated after monomorphization while computing layout. This "works", but the errors simply bail out with no context beyond the name of the type. Should these errors instead return `LayoutError` or otherwise provide context in some way? As it stands, users of `stdsimd` could trivially produce monomorphization errors by making zero-length vectors.

cc `@bjorn3`

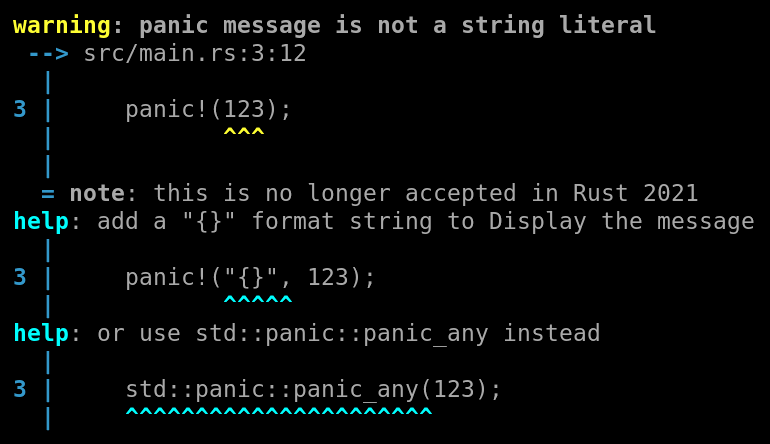

Add lint for `panic!(123)` which is not accepted in Rust 2021.

This extends the `panic_fmt` lint to warn for all cases where the first argument cannot be interpreted as a format string, as will happen in Rust 2021.

It suggests to add `"{}",` to format the message as a string. In the case of `std::panic!()`, it also suggests the recently stabilized

`std::panic::panic_any()` function as an alternative.

It renames the lint to `non_fmt_panic` to match the lint naming guidelines.

This is part of #80162.

r? ```@estebank```

introduce future-compatibility warning for forbidden lint groups

We used to ignore `forbid(group)` scenarios completely. This changed in #78864, but that led to a number of regressions (#80988, #81218).

This PR introduces a future compatibility warning for the case where a group is forbidden but then an individual lint within that group is allowed. We now issue a FCW when we see the "allow", but permit it to take effect.

r? ``@Mark-Simulacrum``

Add a new ABI to support cmse_nonsecure_call

This adds support for the `cmse_nonsecure_call` feature to be able to perform non-secure function call.

See the discussion on Zulip [here](https://rust-lang.zulipchat.com/#narrow/stream/131828-t-compiler/topic/Support.20for.20callsite.20attributes/near/223054928).

This is a followup to #75810 which added `cmse_nonsecure_entry`. As for that PR, I assume that the changes are small enough to not have to go through a RFC but I don't mind doing one if needed 😃

I did not yet create a tracking issue, but if most of it is fine, I can create one and update the various files accordingly (they refer to the other tracking issue now).

On the Zulip chat, I believe `@jonas-schievink` volunteered to be a reviewer 💯

We used to ignore `forbid(group)` scenarios completely. This changed

in #78864, but that led to a number of regressions (#80988, #81218).

This PR introduces a future compatibility warning for the case where

a group is forbidden but then an individual lint within that group

is allowed. We now issue a FCW when we see the "allow", but permit

it to take effect.

Upgrade Chalk

~~Blocked on rust-lang/chalk#670~~

~~Now blocked on rust-lang/chalk#680 and release~~

In addition to the straight upgrade, I also tried to fix some tests by properly returning variables and max universes in the solution. Unfortunately, this actually triggers the same perf problem that rustc traits code runs into in `canonicalizer`. Not sure what the root cause of this problem is, or why it's supposed to be solved in chalk.

r? ```@nikomatsakis```

This commit adds a new ABI to be selected via `extern

"C-cmse-nonsecure-call"` on function pointers in order for the compiler to

apply the corresponding cmse_nonsecure_call callsite attribute.

For Armv8-M targets supporting TrustZone-M, this will perform a

non-secure function call by saving, clearing and calling a non-secure

function pointer using the BLXNS instruction.

See the page on the unstable book for details.

Signed-off-by: Hugues de Valon <hugues.devalon@arm.com>

Remove the remains of query categories

Back in October 2020 in #77830 ``@cjgillot`` removed the query categories information from the profiler, but the actual definitions which query was in which category remained, although unused.

Here I clean that up, to simplify the query definitions even further.

It's unfortunate that this loses all the context for `git blame`, ~~but I'm working on moving those query definitions into `rustc_query_system`, which will lose that context anyway.~~ EDIT: Might not work out.

The functional changes are in the first commit. The second one only changes the indentation.

Add visitors for checking #[inline]

Add visitors for checking #[inline] with struct field

Fix test for #[inline]

Add visitors for checking #[inline] with #[macro_export] macro

Add visitors for checking #[inline] without #[macro_export] macro

Add use alias with Visitor

Fix lint error

Reduce unnecessary variable

Co-authored-by: LingMan <LingMan@users.noreply.github.com>

Change error to warning

Add warning for checking field, arm with #[allow_internal_unstable]

Add name resolver

Formatting

Formatting

Fix error fixture

Add checking field, arm, macro def

Remove const_in_array_repeat

Fixes#80371. Fixes#81315. Fixes#80767. Fixes#75682.

I thought there might be some issue with `Repeats(_, 0)`, but if you increase the items in the array it still ICEs. I'm not sure if this is the best fix but it does fix the given issue.

2229: Fix issues with move closures and mutability

This PR fixes two issues when feature `capture_disjoint_fields` is used.

1. Can't mutate using a mutable reference

2. Move closures try to move value out through a reference.

To do so, we

1. Compute the mutability of the capture and store it as part of the `CapturedPlace` that is written in TypeckResults

2. Restrict capture precision. Note this is temporary for now, to allow the feature to be used with move closures and ByValue captures and might change depending on discussions with the lang team.

- No Derefs are captured for ByValue captures, since that will result in value behind a reference getting moved.

- No projections are applied to raw pointers since these require unsafe blocks. We capture

them completely.

r? `````@nikomatsakis`````

Stabilize `unsigned_abs`

Resolves#74913.

This PR stabilizes the `i*::unsigned_abs()` method, which returns the absolute value of an integer _as its unsigned equivalent_. This has the advantage that it does not overflow on `i*::MIN`.

I have gone ahead and used this in a couple locations throughout the repository.

When `capture_disjoint_fields` is not enabled, checking if the root variable

binding is mutable would suffice.

However with the feature enabled, the captured place might be mutable

because it dereferences a mutable reference.

This PR computes the mutability of each capture after capture analysis

in rustc_typeck. We store this in `ty::CapturedPlace` and then use

`ty::CapturedPlace::mutability` in mir_build and borrow_check.

Refractor a few more types to `rustc_type_ir`

In the continuation of #79169, ~~blocked on that PR~~.

This PR:

- moves `IntVarValue`, `FloatVarValue`, `InferTy` (and friends) and `Variance`

- creates the `IntTy`, `UintTy` and `FloatTy` enums in `rustc_type_ir`, based on their `ast` and `chalk_ir` equilavents, and uses them for types in the rest of the compiler.

~~I will split up that commit to make this easier to review and to have a better commit history.~~

EDIT: done, I split the PR in commits of 200-ish lines each

r? `````@nikomatsakis````` cc `````@jackh726`````

Enforce that query results implement Debug

Currently, we require that query keys implement `Debug`, but we do not do the same for query values. This can make incremental compilation bugs difficult to debug - there isn't a good place to print out the result loaded from disk.

This PR adds `Debug` bounds to several query-related functions, allowing us to debug-print the query value when an 'unstable fingerprint' error occurs. This required adding `#[derive(Debug)]` to a fairly large number of types - hopefully, this doesn't have much of an impact on compiler bootstrapping times.

Prevent query cycles in the MIR inliner

r? `@eddyb` `@wesleywiser`

cc `@rust-lang/wg-mir-opt`

The general design is that we have a new query that is run on the `validated_mir` instead of on the `optimized_mir`. That query is forced before going into the optimization pipeline, so as to not try to read from a stolen MIR.

The query should not be cached cross crate, as you should never call it for items from other crates. By its very design calls into other crates can never cause query cycles.

This is a pessimistic approach to inlining, since we strictly have more calls in the `validated_mir` than we have in `optimized_mir`, but that's not a problem imo.

Various ABI refactorings

This includes changes to the rust abi and various refactorings that will hopefully make it easier to use the abi handling infrastructure of rustc in cg_clif. There are several refactorings that I haven't done. I am opening this draft PR to check that I haven't broken any non x86_64 architectures.

r? `@ghost`

Due to macro expansion, its possible to end up with two distinct

`ExpnId`s that have the same `ExpnData` contents. This violates the

contract of `HashStable`, since two unequal `ExpnId`s will end up with

equal `Fingerprint`s.

This commit adds a `disambiguator` field to `ExpnData`, which is used to

force two otherwise-equivalent `ExpnData`s to be distinct.

Abi::ScalarPair is only ever used for types that don't have a stable

layout anyway so this doesn't break any FFI. It does however reduce the

amount of special casing on the abi outside of the code responsible for

abi specific adjustments to the pass mode.

Improve diagnostics when closure doesn't meet trait bound

Improves the diagnostics when closure doesn't meet trait bound by modifying `TypeckResuts::closure_kind_origins` such that `hir::Place` is used instead of `Symbol`. Using `hir::Place` to describe which capture influenced the decision of selecting a trait a closure satisfies to (Fn/FnMut/FnOnce, Copy) allows us to show precise path in the diagnostics when `capture_disjoint_field` feature is enabled.

Closes rust-lang/project-rfc-2229/issues/21

r? ```@nikomatsakis```

Remove DepKind::CrateMetadata and pre-allocation of DepNodes

Remove much of the special-case handling around crate metadata

dependency tracking by replacing `DepKind::CrateMetadata` and the

pre-allocation of corresponding `DepNodes` with on-demand invocation

of the `crate_hash` query.

Properly handle `SyntaxContext` of dummy spans in incr comp

Fixes#80336

Due to macro expansion, we may end up with spans with an invalid

location and non-root `SyntaxContext`. This commits preserves the

`SyntaxContext` of such spans in the incremental cache, and ensures

that we always hash the `SyntaxContext` when computing the `Fingerprint`

of a `Span`

Previously, we would discard the `SyntaxContext` during serialization to

the incremental cache, causing the span's `Fingerprint` to change across

compilation sessions.

Rework diagnostics for wrong number of generic args (fixes#66228 and #71924)

This PR reworks the `wrong number of {} arguments` message, so that it provides more details and contextual hints.

Fixes#80336

Due to macro expansion, we may end up with spans with an invalid

location and non-root `SyntaxContext`. This commits preserves the

`SyntaxContext` of such spans in the incremental cache, and ensures

that we always hash the `SyntaxContext` when computing the `Fingerprint`

of a `Span`

Previously, we would discard the `SyntaxContext` during serialization to

the incremental cache, causing the span's `Fingerprint` to change across

compilation sessions.

Separate out a `hir::Impl` struct

This makes it possible to pass the `Impl` directly to functions, instead

of having to pass each of the many fields one at a time. It also

simplifies matches in many cases.

See `rustc_save_analysis::dump_visitor::process_impl` or `rustdoc::clean::clean_impl` for a good example of how this makes `impl`s easier to work with.

r? `@petrochenkov` maybe?

This makes it possible to pass the `Impl` directly to functions, instead

of having to pass each of the many fields one at a time. It also

simplifies matches in many cases.

Turn type inhabitedness into a query to fix `exhaustive_patterns` perf

We measured in https://github.com/rust-lang/rust/pull/79394 that enabling the [`exhaustive_patterns` feature](https://github.com/rust-lang/rust/issues/51085) causes significant perf degradation. It was conjectured that the culprit is type inhabitedness checking, and [I hypothesized](https://github.com/rust-lang/rust/pull/79394#issuecomment-733861149) that turning this computation into a query would solve most of the problem.

This PR turns `tcx.is_ty_uninhabited_from` into a query, and I measured a 25% perf gain on the benchmark that stress-tests `exhaustiveness_patterns`. This more than compensates for the 30% perf hit I measured [when creating it](https://github.com/rust-lang/rustc-perf/pull/801). We'll have to measure enabling the feature again, but I suspect this fixes the perf regression entirely.

I'd like a perf run on this PR obviously.

I made small atomic commits to help reviewing. The first one is just me discovering the "revisions" feature of the testing framework.

I believe there's a push to move things out of `rustc_middle` because it's huge. I guess `inhabitedness/mod.rs` could be moved out, but it's quite small. `DefIdForest` might be movable somewhere too. I don't know what the policy is for that.

Ping `@camelid` since you were interested in following along

`@rustbot` modify labels: +A-exhaustiveness-checking

Since `DefIdForest` contains 0 or 1 elements the large majority of the

time, by allocating only in the >1 case we avoid almost all allocations,

compared to `Arc<SmallVec<[DefId;1]>>`. This shaves off 0.2% on the

benchmark that stresses uninhabitedness checking.

Remove much of the special-case handling around crate metadata

dependency tracking by replacing `DepKind::CrateMetadata` and the

pre-allocation of corresponding `DepNodes` with on-demand invocation

of the `crate_hash` query.

Make CTFE able to check for UB...

... by not doing any optimizations on the `const fn` MIR used in CTFE. This means we duplicate all `const fn`'s MIR now, once for CTFE, once for runtime. This PR is for checking the perf effect, so we have some data when talking about https://github.com/rust-lang/const-eval/blob/master/rfcs/0000-const-ub.md

To do this, we now have two queries for obtaining mir: `optimized_mir` and `mir_for_ctfe`. It is now illegal to invoke `optimized_mir` to obtain the MIR of a const/static item's initializer, an array length, an inline const expression or an enum discriminant initializer. For `const fn`, both `optimized_mir` and `mir_for_ctfe` work, the former returning the MIR that LLVM should use if the function is called at runtime. Similarly it is illegal to invoke `mir_for_ctfe` on regular functions.

This is all checked via appropriate assertions and I don't think it is easy to get wrong, as there should be no `mir_for_ctfe` calls outside the const evaluator or metadata encoding. Almost all rustc devs should keep using `optimized_mir` (or `instance_mir` for that matter).

Serialize incr comp structures to file via fixed-size buffer

Reduce a large memory spike that happens during serialization by writing

the incr comp structures to file by way of a fixed-size buffer, rather

than an unbounded vector.

Effort was made to keep the instruction count close to that of the

previous implementation. However, buffered writing to a file inherently

has more overhead than writing to a vector, because each write may

result in a handleable error. To reduce this overhead, arrangements are

made so that each LEB128-encoded integer can be written to the buffer

with only one capacity and error check. Higher-level optimizations in

which entire composite structures can be written with one capacity and

error check are possible, but would require much more work.

The performance is mostly on par with the previous implementation, with

small to moderate instruction count regressions. The memory reduction is

significant, however, so it seems like a worth-while trade-off.

Reduce a large memory spike that happens during serialization by writing

the incr comp structures to file by way of a fixed-size buffer, rather

than an unbounded vector.

Effort was made to keep the instruction count close to that of the

previous implementation. However, buffered writing to a file inherently

has more overhead than writing to a vector, because each write may

result in a handleable error. To reduce this overhead, arrangements are

made so that each LEB128-encoded integer can be written to the buffer

with only one capacity and error check. Higher-level optimizations in

which entire composite structures can be written with one capacity and

error check are possible, but would require much more work.

The performance is mostly on par with the previous implementation, with

small to moderate instruction count regressions. The memory reduction is

significant, however, so it seems like a worth-while trade-off.

Access query (DepKind) metadata through fields

This refactors the access to query definition metadata (attributes such as eval always, anon, has_params) and loading/forcing functions to generate a number of structs, instead of matching on the DepKind enum. This makes access to the fields cheaper to compile. Using a struct means that finding the metadata for a given query is just an offset away; previously the match may have been compiled to a jump table but likely not completely inlined as we expect here.

A previous attempt explored a similar strategy, but using trait objects in #78314 that proved less effective, likely due to higher overheads due to forcing dynamic calls and poorer cache utilization (all metadata is fairly densely packed with this PR).