mirror of

https://github.com/rust-lang/rust.git

synced 2025-02-19 18:34:08 +00:00

Auto merge of #43506 - michaelwoerister:async-llvm, r=alexcrichton

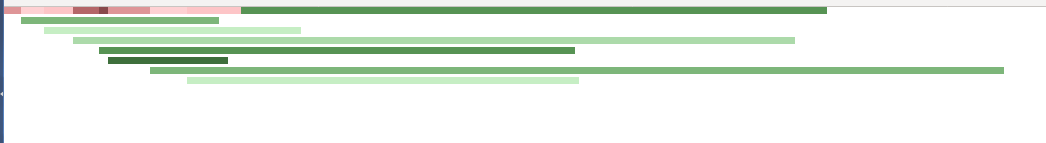

Run translation and LLVM in parallel when compiling with multiple CGUs This is still a work in progress but the bulk of the implementation is done, so I thought it would be good to get it in front of more eyes. This PR makes the compiler start running LLVM while translation is still in progress, effectively allowing for more parallelism towards the end of the compilation pipeline. It also allows the main thread to switch between either translation or running LLVM, which allows to reduce peak memory usage since not all LLVM module have to be kept in memory until linking. This is especially good for incr. comp. but it works just as well when running with `-Ccodegen-units=N`. In order to help tuning and debugging the work scheduler, the PR adds the `-Ztrans-time-graph` flag which spits out html files that show how work packages where scheduled:  (red is translation, green is llvm) One side effect here is that `-Ztime-passes` might show something not quite correct because trans and LLVM are not strictly separated anymore. I plan to have some special handling there that will try to produce useful output. One open question is how to determine whether the trans-thread should switch to intermediate LLVM processing. TODO: - [x] Restore `-Z time-passes` output for LLVM. - [x] Update documentation, esp. for work package scheduling. - [x] Tune the scheduling algorithm. cc @alexcrichton @rust-lang/compiler

This commit is contained in:

commit

e772c28d2e

2

src/Cargo.lock

generated

2

src/Cargo.lock

generated

@ -1518,11 +1518,11 @@ dependencies = [

|

||||

name = "rustc_trans"

|

||||

version = "0.0.0"

|

||||

dependencies = [

|

||||

"crossbeam 0.2.10 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"flate2 0.2.19 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"gcc 0.3.51 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"jobserver 0.1.6 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"log 0.3.8 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"num_cpus 1.6.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"owning_ref 0.3.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc 0.0.0",

|

||||

"rustc-demangle 0.1.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

|

||||

@ -50,7 +50,7 @@ pub use self::NativeLibraryKind::*;

|

||||

|

||||

// lonely orphan structs and enums looking for a better home

|

||||

|

||||

#[derive(Clone, Debug)]

|

||||

#[derive(Clone, Debug, Copy)]

|

||||

pub struct LinkMeta {

|

||||

pub crate_hash: Svh,

|

||||

}

|

||||

@ -161,15 +161,13 @@ pub struct ExternCrate {

|

||||

}

|

||||

|

||||

pub struct EncodedMetadata {

|

||||

pub raw_data: Vec<u8>,

|

||||

pub hashes: EncodedMetadataHashes,

|

||||

pub raw_data: Vec<u8>

|

||||

}

|

||||

|

||||

impl EncodedMetadata {

|

||||

pub fn new() -> EncodedMetadata {

|

||||

EncodedMetadata {

|

||||

raw_data: Vec::new(),

|

||||

hashes: EncodedMetadataHashes::new(),

|

||||

}

|

||||

}

|

||||

}

|

||||

@ -294,7 +292,7 @@ pub trait CrateStore {

|

||||

tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

link_meta: &LinkMeta,

|

||||

reachable: &NodeSet)

|

||||

-> EncodedMetadata;

|

||||

-> (EncodedMetadata, EncodedMetadataHashes);

|

||||

fn metadata_encoding_version(&self) -> &[u8];

|

||||

}

|

||||

|

||||

@ -424,7 +422,7 @@ impl CrateStore for DummyCrateStore {

|

||||

tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

link_meta: &LinkMeta,

|

||||

reachable: &NodeSet)

|

||||

-> EncodedMetadata {

|

||||

-> (EncodedMetadata, EncodedMetadataHashes) {

|

||||

bug!("encode_metadata")

|

||||

}

|

||||

fn metadata_encoding_version(&self) -> &[u8] { bug!("metadata_encoding_version") }

|

||||

|

||||

@ -1059,6 +1059,8 @@ options! {DebuggingOptions, DebuggingSetter, basic_debugging_options,

|

||||

"choose which RELRO level to use"),

|

||||

nll: bool = (false, parse_bool, [UNTRACKED],

|

||||

"run the non-lexical lifetimes MIR pass"),

|

||||

trans_time_graph: bool = (false, parse_bool, [UNTRACKED],

|

||||

"generate a graphical HTML report of time spent in trans and LLVM"),

|

||||

}

|

||||

|

||||

pub fn default_lib_output() -> CrateType {

|

||||

@ -1498,6 +1500,23 @@ pub fn build_session_options_and_crate_config(matches: &getopts::Matches)

|

||||

early_error(error_format, "Value for codegen units must be a positive nonzero integer");

|

||||

}

|

||||

|

||||

// It's possible that we have `codegen_units > 1` but only one item in

|

||||

// `trans.modules`. We could theoretically proceed and do LTO in that

|

||||

// case, but it would be confusing to have the validity of

|

||||

// `-Z lto -C codegen-units=2` depend on details of the crate being

|

||||

// compiled, so we complain regardless.

|

||||

if cg.lto && cg.codegen_units > 1 {

|

||||

// This case is impossible to handle because LTO expects to be able

|

||||

// to combine the entire crate and all its dependencies into a

|

||||

// single compilation unit, but each codegen unit is in a separate

|

||||

// LLVM context, so they can't easily be combined.

|

||||

early_error(error_format, "can't perform LTO when using multiple codegen units");

|

||||

}

|

||||

|

||||

if cg.lto && debugging_opts.incremental.is_some() {

|

||||

early_error(error_format, "can't perform LTO when compiling incrementally");

|

||||

}

|

||||

|

||||

let mut prints = Vec::<PrintRequest>::new();

|

||||

if cg.target_cpu.as_ref().map_or(false, |s| s == "help") {

|

||||

prints.push(PrintRequest::TargetCPUs);

|

||||

|

||||

@ -57,6 +57,32 @@ pub fn time<T, F>(do_it: bool, what: &str, f: F) -> T where

|

||||

let rv = f();

|

||||

let dur = start.elapsed();

|

||||

|

||||

print_time_passes_entry_internal(what, dur);

|

||||

|

||||

TIME_DEPTH.with(|slot| slot.set(old));

|

||||

|

||||

rv

|

||||

}

|

||||

|

||||

pub fn print_time_passes_entry(do_it: bool, what: &str, dur: Duration) {

|

||||

if !do_it {

|

||||

return

|

||||

}

|

||||

|

||||

let old = TIME_DEPTH.with(|slot| {

|

||||

let r = slot.get();

|

||||

slot.set(r + 1);

|

||||

r

|

||||

});

|

||||

|

||||

print_time_passes_entry_internal(what, dur);

|

||||

|

||||

TIME_DEPTH.with(|slot| slot.set(old));

|

||||

}

|

||||

|

||||

fn print_time_passes_entry_internal(what: &str, dur: Duration) {

|

||||

let indentation = TIME_DEPTH.with(|slot| slot.get());

|

||||

|

||||

let mem_string = match get_resident() {

|

||||

Some(n) => {

|

||||

let mb = n as f64 / 1_000_000.0;

|

||||

@ -65,14 +91,10 @@ pub fn time<T, F>(do_it: bool, what: &str, f: F) -> T where

|

||||

None => "".to_owned(),

|

||||

};

|

||||

println!("{}time: {}{}\t{}",

|

||||

repeat(" ").take(old).collect::<String>(),

|

||||

repeat(" ").take(indentation).collect::<String>(),

|

||||

duration_to_secs_str(dur),

|

||||

mem_string,

|

||||

what);

|

||||

|

||||

TIME_DEPTH.with(|slot| slot.set(old));

|

||||

|

||||

rv

|

||||

}

|

||||

|

||||

// Hack up our own formatting for the duration to make it easier for scripts

|

||||

|

||||

@ -15,8 +15,7 @@ use rustc_data_structures::stable_hasher::StableHasher;

|

||||

use rustc_mir as mir;

|

||||

use rustc::session::{Session, CompileResult};

|

||||

use rustc::session::CompileIncomplete;

|

||||

use rustc::session::config::{self, Input, OutputFilenames, OutputType,

|

||||

OutputTypes};

|

||||

use rustc::session::config::{self, Input, OutputFilenames, OutputType};

|

||||

use rustc::session::search_paths::PathKind;

|

||||

use rustc::lint;

|

||||

use rustc::middle::{self, dependency_format, stability, reachable};

|

||||

@ -26,7 +25,6 @@ use rustc::ty::{self, TyCtxt, Resolutions, GlobalArenas};

|

||||

use rustc::traits;

|

||||

use rustc::util::common::{ErrorReported, time};

|

||||

use rustc::util::nodemap::NodeSet;

|

||||

use rustc::util::fs::rename_or_copy_remove;

|

||||

use rustc_allocator as allocator;

|

||||

use rustc_borrowck as borrowck;

|

||||

use rustc_incremental::{self, IncrementalHashesMap};

|

||||

@ -208,7 +206,7 @@ pub fn compile_input(sess: &Session,

|

||||

println!("Pre-trans");

|

||||

tcx.print_debug_stats();

|

||||

}

|

||||

let trans = phase_4_translate_to_llvm(tcx, analysis, &incremental_hashes_map,

|

||||

let trans = phase_4_translate_to_llvm(tcx, analysis, incremental_hashes_map,

|

||||

&outputs);

|

||||

|

||||

if log_enabled!(::log::LogLevel::Info) {

|

||||

@ -231,7 +229,7 @@ pub fn compile_input(sess: &Session,

|

||||

sess.code_stats.borrow().print_type_sizes();

|

||||

}

|

||||

|

||||

let phase5_result = phase_5_run_llvm_passes(sess, &trans, &outputs);

|

||||

let (phase5_result, trans) = phase_5_run_llvm_passes(sess, trans);

|

||||

|

||||

controller_entry_point!(after_llvm,

|

||||

sess,

|

||||

@ -239,8 +237,6 @@ pub fn compile_input(sess: &Session,

|

||||

phase5_result);

|

||||

phase5_result?;

|

||||

|

||||

write::cleanup_llvm(&trans);

|

||||

|

||||

phase_6_link_output(sess, &trans, &outputs);

|

||||

|

||||

// Now that we won't touch anything in the incremental compilation directory

|

||||

@ -1055,9 +1051,9 @@ pub fn phase_3_run_analysis_passes<'tcx, F, R>(sess: &'tcx Session,

|

||||

/// be discarded.

|

||||

pub fn phase_4_translate_to_llvm<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

analysis: ty::CrateAnalysis,

|

||||

incremental_hashes_map: &IncrementalHashesMap,

|

||||

incremental_hashes_map: IncrementalHashesMap,

|

||||

output_filenames: &OutputFilenames)

|

||||

-> trans::CrateTranslation {

|

||||

-> write::OngoingCrateTranslation {

|

||||

let time_passes = tcx.sess.time_passes();

|

||||

|

||||

time(time_passes,

|

||||

@ -1067,63 +1063,27 @@ pub fn phase_4_translate_to_llvm<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

let translation =

|

||||

time(time_passes,

|

||||

"translation",

|

||||

move || trans::trans_crate(tcx, analysis, &incremental_hashes_map, output_filenames));

|

||||

move || trans::trans_crate(tcx, analysis, incremental_hashes_map, output_filenames));

|

||||

|

||||

time(time_passes,

|

||||

"assert dep graph",

|

||||

|| rustc_incremental::assert_dep_graph(tcx));

|

||||

|

||||

time(time_passes,

|

||||

"serialize dep graph",

|

||||

|| rustc_incremental::save_dep_graph(tcx,

|

||||

&incremental_hashes_map,

|

||||

&translation.metadata.hashes,

|

||||

translation.link.crate_hash));

|

||||

translation

|

||||

}

|

||||

|

||||

/// Run LLVM itself, producing a bitcode file, assembly file or object file

|

||||

/// as a side effect.

|

||||

pub fn phase_5_run_llvm_passes(sess: &Session,

|

||||

trans: &trans::CrateTranslation,

|

||||

outputs: &OutputFilenames) -> CompileResult {

|

||||

if sess.opts.cg.no_integrated_as ||

|

||||

(sess.target.target.options.no_integrated_as &&

|

||||

(outputs.outputs.contains_key(&OutputType::Object) ||

|

||||

outputs.outputs.contains_key(&OutputType::Exe)))

|

||||

{

|

||||

let output_types = OutputTypes::new(&[(OutputType::Assembly, None)]);

|

||||

time(sess.time_passes(),

|

||||

"LLVM passes",

|

||||

|| write::run_passes(sess, trans, &output_types, outputs));

|

||||

trans: write::OngoingCrateTranslation)

|

||||

-> (CompileResult, trans::CrateTranslation) {

|

||||

let trans = trans.join(sess);

|

||||

|

||||

write::run_assembler(sess, outputs);

|

||||

|

||||

// HACK the linker expects the object file to be named foo.0.o but

|

||||

// `run_assembler` produces an object named just foo.o. Rename it if we

|

||||

// are going to build an executable

|

||||

if sess.opts.output_types.contains_key(&OutputType::Exe) {

|

||||

let f = outputs.path(OutputType::Object);

|

||||

rename_or_copy_remove(&f,

|

||||

f.with_file_name(format!("{}.0.o",

|

||||

f.file_stem().unwrap().to_string_lossy()))).unwrap();

|

||||

}

|

||||

|

||||

// Remove assembly source, unless --save-temps was specified

|

||||

if !sess.opts.cg.save_temps {

|

||||

fs::remove_file(&outputs.temp_path(OutputType::Assembly, None)).unwrap();

|

||||

}

|

||||

} else {

|

||||

time(sess.time_passes(),

|

||||

"LLVM passes",

|

||||

|| write::run_passes(sess, trans, &sess.opts.output_types, outputs));

|

||||

if sess.opts.debugging_opts.incremental_info {

|

||||

write::dump_incremental_data(&trans);

|

||||

}

|

||||

|

||||

time(sess.time_passes(),

|

||||

"serialize work products",

|

||||

move || rustc_incremental::save_work_products(sess));

|

||||

|

||||

sess.compile_status()

|

||||

(sess.compile_status(), trans)

|

||||

}

|

||||

|

||||

/// Run the linker on any artifacts that resulted from the LLVM run.

|

||||

|

||||

@ -34,7 +34,7 @@ use super::file_format;

|

||||

use super::work_product;

|

||||

|

||||

pub fn save_dep_graph<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

incremental_hashes_map: &IncrementalHashesMap,

|

||||

incremental_hashes_map: IncrementalHashesMap,

|

||||

metadata_hashes: &EncodedMetadataHashes,

|

||||

svh: Svh) {

|

||||

debug!("save_dep_graph()");

|

||||

@ -51,7 +51,7 @@ pub fn save_dep_graph<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

eprintln!("incremental: {} edges in dep-graph", query.graph.len_edges());

|

||||

}

|

||||

|

||||

let mut hcx = HashContext::new(tcx, incremental_hashes_map);

|

||||

let mut hcx = HashContext::new(tcx, &incremental_hashes_map);

|

||||

let preds = Predecessors::new(&query, &mut hcx);

|

||||

let mut current_metadata_hashes = FxHashMap();

|

||||

|

||||

|

||||

@ -15,7 +15,8 @@ use schema;

|

||||

use rustc::ty::maps::QueryConfig;

|

||||

use rustc::middle::cstore::{CrateStore, CrateSource, LibSource, DepKind,

|

||||

NativeLibrary, MetadataLoader, LinkMeta,

|

||||

LinkagePreference, LoadedMacro, EncodedMetadata};

|

||||

LinkagePreference, LoadedMacro, EncodedMetadata,

|

||||

EncodedMetadataHashes};

|

||||

use rustc::hir::def;

|

||||

use rustc::middle::lang_items;

|

||||

use rustc::session::Session;

|

||||

@ -443,7 +444,7 @@ impl CrateStore for cstore::CStore {

|

||||

tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

link_meta: &LinkMeta,

|

||||

reachable: &NodeSet)

|

||||

-> EncodedMetadata

|

||||

-> (EncodedMetadata, EncodedMetadataHashes)

|

||||

{

|

||||

encoder::encode_metadata(tcx, link_meta, reachable)

|

||||

}

|

||||

|

||||

@ -1638,7 +1638,7 @@ impl<'a, 'tcx, 'v> ItemLikeVisitor<'v> for ImplVisitor<'a, 'tcx> {

|

||||

pub fn encode_metadata<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

link_meta: &LinkMeta,

|

||||

exported_symbols: &NodeSet)

|

||||

-> EncodedMetadata

|

||||

-> (EncodedMetadata, EncodedMetadataHashes)

|

||||

{

|

||||

let mut cursor = Cursor::new(vec![]);

|

||||

cursor.write_all(METADATA_HEADER).unwrap();

|

||||

@ -1681,10 +1681,7 @@ pub fn encode_metadata<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

result[header + 2] = (pos >> 8) as u8;

|

||||

result[header + 3] = (pos >> 0) as u8;

|

||||

|

||||

EncodedMetadata {

|

||||

raw_data: result,

|

||||

hashes: metadata_hashes,

|

||||

}

|

||||

(EncodedMetadata { raw_data: result }, metadata_hashes)

|

||||

}

|

||||

|

||||

pub fn get_repr_options<'a, 'tcx, 'gcx>(tcx: &TyCtxt<'a, 'tcx, 'gcx>, did: DefId) -> ReprOptions {

|

||||

|

||||

@ -10,7 +10,7 @@ crate-type = ["dylib"]

|

||||

test = false

|

||||

|

||||

[dependencies]

|

||||

crossbeam = "0.2"

|

||||

num_cpus = "1.0"

|

||||

flate2 = "0.2"

|

||||

jobserver = "0.1.5"

|

||||

log = "0.3"

|

||||

|

||||

@ -37,11 +37,22 @@ use rustc::ich::{ATTR_PARTITION_REUSED, ATTR_PARTITION_TRANSLATED};

|

||||

const MODULE: &'static str = "module";

|

||||

const CFG: &'static str = "cfg";

|

||||

|

||||

#[derive(Debug, PartialEq)]

|

||||

enum Disposition { Reused, Translated }

|

||||

#[derive(Debug, PartialEq, Clone, Copy)]

|

||||

pub enum Disposition { Reused, Translated }

|

||||

|

||||

impl ModuleTranslation {

|

||||

pub fn disposition(&self) -> (String, Disposition) {

|

||||

let disposition = match self.source {

|

||||

ModuleSource::Preexisting(_) => Disposition::Reused,

|

||||

ModuleSource::Translated(_) => Disposition::Translated,

|

||||

};

|

||||

|

||||

(self.name.clone(), disposition)

|

||||

}

|

||||

}

|

||||

|

||||

pub(crate) fn assert_module_sources<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

modules: &[ModuleTranslation]) {

|

||||

modules: &[(String, Disposition)]) {

|

||||

let _ignore = tcx.dep_graph.in_ignore();

|

||||

|

||||

if tcx.sess.opts.incremental.is_none() {

|

||||

@ -56,7 +67,7 @@ pub(crate) fn assert_module_sources<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

|

||||

struct AssertModuleSource<'a, 'tcx: 'a> {

|

||||

tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

modules: &'a [ModuleTranslation],

|

||||

modules: &'a [(String, Disposition)],

|

||||

}

|

||||

|

||||

impl<'a, 'tcx> AssertModuleSource<'a, 'tcx> {

|

||||

@ -75,15 +86,15 @@ impl<'a, 'tcx> AssertModuleSource<'a, 'tcx> {

|

||||

}

|

||||

|

||||

let mname = self.field(attr, MODULE);

|

||||

let mtrans = self.modules.iter().find(|mtrans| *mtrans.name == *mname.as_str());

|

||||

let mtrans = self.modules.iter().find(|&&(ref name, _)| name == mname.as_str());

|

||||

let mtrans = match mtrans {

|

||||

Some(m) => m,

|

||||

None => {

|

||||

debug!("module name `{}` not found amongst:", mname);

|

||||

for mtrans in self.modules {

|

||||

for &(ref name, ref disposition) in self.modules {

|

||||

debug!("module named `{}` with disposition {:?}",

|

||||

mtrans.name,

|

||||

self.disposition(mtrans));

|

||||

name,

|

||||

disposition);

|

||||

}

|

||||

|

||||

self.tcx.sess.span_err(

|

||||

@ -93,7 +104,7 @@ impl<'a, 'tcx> AssertModuleSource<'a, 'tcx> {

|

||||

}

|

||||

};

|

||||

|

||||

let mtrans_disposition = self.disposition(mtrans);

|

||||

let mtrans_disposition = mtrans.1;

|

||||

if disposition != mtrans_disposition {

|

||||

self.tcx.sess.span_err(

|

||||

attr.span,

|

||||

@ -104,13 +115,6 @@ impl<'a, 'tcx> AssertModuleSource<'a, 'tcx> {

|

||||

}

|

||||

}

|

||||

|

||||

fn disposition(&self, mtrans: &ModuleTranslation) -> Disposition {

|

||||

match mtrans.source {

|

||||

ModuleSource::Preexisting(_) => Disposition::Reused,

|

||||

ModuleSource::Translated(_) => Disposition::Translated,

|

||||

}

|

||||

}

|

||||

|

||||

fn field(&self, attr: &ast::Attribute, name: &str) -> ast::Name {

|

||||

for item in attr.meta_item_list().unwrap_or_else(Vec::new) {

|

||||

if item.check_name(name) {

|

||||

|

||||

@ -12,7 +12,7 @@ use back::link;

|

||||

use back::write;

|

||||

use back::symbol_export;

|

||||

use rustc::session::config;

|

||||

use errors::FatalError;

|

||||

use errors::{FatalError, Handler};

|

||||

use llvm;

|

||||

use llvm::archive_ro::ArchiveRO;

|

||||

use llvm::{ModuleRef, TargetMachineRef, True, False};

|

||||

@ -41,24 +41,24 @@ pub fn crate_type_allows_lto(crate_type: config::CrateType) -> bool {

|

||||

}

|

||||

|

||||

pub fn run(cgcx: &CodegenContext,

|

||||

diag_handler: &Handler,

|

||||

llmod: ModuleRef,

|

||||

tm: TargetMachineRef,

|

||||

config: &ModuleConfig,

|

||||

temp_no_opt_bc_filename: &Path) -> Result<(), FatalError> {

|

||||

let handler = cgcx.handler;

|

||||

if cgcx.opts.cg.prefer_dynamic {

|

||||

handler.struct_err("cannot prefer dynamic linking when performing LTO")

|

||||

.note("only 'staticlib', 'bin', and 'cdylib' outputs are \

|

||||

supported with LTO")

|

||||

.emit();

|

||||

diag_handler.struct_err("cannot prefer dynamic linking when performing LTO")

|

||||

.note("only 'staticlib', 'bin', and 'cdylib' outputs are \

|

||||

supported with LTO")

|

||||

.emit();

|

||||

return Err(FatalError)

|

||||

}

|

||||

|

||||

// Make sure we actually can run LTO

|

||||

for crate_type in cgcx.crate_types.iter() {

|

||||

if !crate_type_allows_lto(*crate_type) {

|

||||

let e = handler.fatal("lto can only be run for executables, cdylibs and \

|

||||

static library outputs");

|

||||

let e = diag_handler.fatal("lto can only be run for executables, cdylibs and \

|

||||

static library outputs");

|

||||

return Err(e)

|

||||

}

|

||||

}

|

||||

@ -116,13 +116,13 @@ pub fn run(cgcx: &CodegenContext,

|

||||

if res.is_err() {

|

||||

let msg = format!("failed to decompress bc of `{}`",

|

||||

name);

|

||||

Err(handler.fatal(&msg))

|

||||

Err(diag_handler.fatal(&msg))

|

||||

} else {

|

||||

Ok(inflated)

|

||||

}

|

||||

} else {

|

||||

Err(handler.fatal(&format!("Unsupported bytecode format version {}",

|

||||

version)))

|

||||

Err(diag_handler.fatal(&format!("Unsupported bytecode format version {}",

|

||||

version)))

|

||||

}

|

||||

})?

|

||||

} else {

|

||||

@ -136,7 +136,7 @@ pub fn run(cgcx: &CodegenContext,

|

||||

if res.is_err() {

|

||||

let msg = format!("failed to decompress bc of `{}`",

|

||||

name);

|

||||

Err(handler.fatal(&msg))

|

||||

Err(diag_handler.fatal(&msg))

|

||||

} else {

|

||||

Ok(inflated)

|

||||

}

|

||||

@ -152,7 +152,7 @@ pub fn run(cgcx: &CodegenContext,

|

||||

Ok(())

|

||||

} else {

|

||||

let msg = format!("failed to load bc of `{}`", name);

|

||||

Err(write::llvm_err(handler, msg))

|

||||

Err(write::llvm_err(&diag_handler, msg))

|

||||

}

|

||||

})?;

|

||||

}

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

@ -23,29 +23,30 @@

|

||||

//! but one TypeRef corresponds to many `Ty`s; for instance, tup(int, int,

|

||||

//! int) and rec(x=int, y=int, z=int) will have the same TypeRef.

|

||||

|

||||

use super::CrateTranslation;

|

||||

use super::ModuleLlvm;

|

||||

use super::ModuleSource;

|

||||

use super::ModuleTranslation;

|

||||

use super::ModuleKind;

|

||||

|

||||

use assert_module_sources;

|

||||

use back::link;

|

||||

use back::linker::LinkerInfo;

|

||||

use back::symbol_export::{self, ExportedSymbols};

|

||||

use back::write::{self, OngoingCrateTranslation};

|

||||

use llvm::{ContextRef, Linkage, ModuleRef, ValueRef, Vector, get_param};

|

||||

use llvm;

|

||||

use metadata;

|

||||

use rustc::hir::def_id::LOCAL_CRATE;

|

||||

use rustc::middle::lang_items::StartFnLangItem;

|

||||

use rustc::middle::cstore::EncodedMetadata;

|

||||

use rustc::middle::cstore::{EncodedMetadata, EncodedMetadataHashes};

|

||||

use rustc::ty::{self, Ty, TyCtxt};

|

||||

use rustc::dep_graph::AssertDepGraphSafe;

|

||||

use rustc::middle::cstore::LinkMeta;

|

||||

use rustc::hir::map as hir_map;

|

||||

use rustc::util::common::time;

|

||||

use rustc::session::config::{self, NoDebugInfo, OutputFilenames};

|

||||

use rustc::util::common::{time, print_time_passes_entry};

|

||||

use rustc::session::config::{self, NoDebugInfo, OutputFilenames, OutputType};

|

||||

use rustc::session::Session;

|

||||

use rustc_incremental::IncrementalHashesMap;

|

||||

use rustc_incremental::{self, IncrementalHashesMap};

|

||||

use abi;

|

||||

use allocator;

|

||||

use mir::lvalue::LvalueRef;

|

||||

@ -68,6 +69,7 @@ use mir;

|

||||

use monomorphize::{self, Instance};

|

||||

use partitioning::{self, PartitioningStrategy, CodegenUnit};

|

||||

use symbol_names_test;

|

||||

use time_graph;

|

||||

use trans_item::{TransItem, DefPathBasedNames};

|

||||

use type_::Type;

|

||||

use type_of;

|

||||

@ -78,6 +80,7 @@ use libc::c_uint;

|

||||

use std::ffi::{CStr, CString};

|

||||

use std::str;

|

||||

use std::sync::Arc;

|

||||

use std::time::{Instant, Duration};

|

||||

use std::i32;

|

||||

use syntax_pos::Span;

|

||||

use syntax::attr;

|

||||

@ -647,9 +650,23 @@ pub fn set_link_section(ccx: &CrateContext,

|

||||

}

|

||||

}

|

||||

|

||||

// check for the #[rustc_error] annotation, which forces an

|

||||

// error in trans. This is used to write compile-fail tests

|

||||

// that actually test that compilation succeeds without

|

||||

// reporting an error.

|

||||

fn check_for_rustc_errors_attr(tcx: TyCtxt) {

|

||||

if let Some((id, span)) = *tcx.sess.entry_fn.borrow() {

|

||||

let main_def_id = tcx.hir.local_def_id(id);

|

||||

|

||||

if tcx.has_attr(main_def_id, "rustc_error") {

|

||||

tcx.sess.span_fatal(span, "compilation successful");

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// Create the `main` function which will initialise the rust runtime and call

|

||||

/// users main function.

|

||||

pub fn maybe_create_entry_wrapper(ccx: &CrateContext) {

|

||||

fn maybe_create_entry_wrapper(ccx: &CrateContext) {

|

||||

let (main_def_id, span) = match *ccx.sess().entry_fn.borrow() {

|

||||

Some((id, span)) => {

|

||||

(ccx.tcx().hir.local_def_id(id), span)

|

||||

@ -657,14 +674,6 @@ pub fn maybe_create_entry_wrapper(ccx: &CrateContext) {

|

||||

None => return,

|

||||

};

|

||||

|

||||

// check for the #[rustc_error] annotation, which forces an

|

||||

// error in trans. This is used to write compile-fail tests

|

||||

// that actually test that compilation succeeds without

|

||||

// reporting an error.

|

||||

if ccx.tcx().has_attr(main_def_id, "rustc_error") {

|

||||

ccx.tcx().sess.span_fatal(span, "compilation successful");

|

||||

}

|

||||

|

||||

let instance = Instance::mono(ccx.tcx(), main_def_id);

|

||||

|

||||

if !ccx.codegen_unit().contains_item(&TransItem::Fn(instance)) {

|

||||

@ -728,7 +737,8 @@ fn contains_null(s: &str) -> bool {

|

||||

fn write_metadata<'a, 'gcx>(tcx: TyCtxt<'a, 'gcx, 'gcx>,

|

||||

link_meta: &LinkMeta,

|

||||

exported_symbols: &NodeSet)

|

||||

-> (ContextRef, ModuleRef, EncodedMetadata) {

|

||||

-> (ContextRef, ModuleRef,

|

||||

EncodedMetadata, EncodedMetadataHashes) {

|

||||

use std::io::Write;

|

||||

use flate2::Compression;

|

||||

use flate2::write::DeflateEncoder;

|

||||

@ -758,15 +768,18 @@ fn write_metadata<'a, 'gcx>(tcx: TyCtxt<'a, 'gcx, 'gcx>,

|

||||

}).max().unwrap();

|

||||

|

||||

if kind == MetadataKind::None {

|

||||

return (metadata_llcx, metadata_llmod, EncodedMetadata::new());

|

||||

return (metadata_llcx,

|

||||

metadata_llmod,

|

||||

EncodedMetadata::new(),

|

||||

EncodedMetadataHashes::new());

|

||||

}

|

||||

|

||||

let cstore = &tcx.sess.cstore;

|

||||

let metadata = cstore.encode_metadata(tcx,

|

||||

&link_meta,

|

||||

exported_symbols);

|

||||

let (metadata, hashes) = cstore.encode_metadata(tcx,

|

||||

&link_meta,

|

||||

exported_symbols);

|

||||

if kind == MetadataKind::Uncompressed {

|

||||

return (metadata_llcx, metadata_llmod, metadata);

|

||||

return (metadata_llcx, metadata_llmod, metadata, hashes);

|

||||

}

|

||||

|

||||

assert!(kind == MetadataKind::Compressed);

|

||||

@ -794,7 +807,7 @@ fn write_metadata<'a, 'gcx>(tcx: TyCtxt<'a, 'gcx, 'gcx>,

|

||||

let directive = CString::new(directive).unwrap();

|

||||

llvm::LLVMSetModuleInlineAsm(metadata_llmod, directive.as_ptr())

|

||||

}

|

||||

return (metadata_llcx, metadata_llmod, metadata);

|

||||

return (metadata_llcx, metadata_llmod, metadata, hashes);

|

||||

}

|

||||

|

||||

// Create a `__imp_<symbol> = &symbol` global for every public static `symbol`.

|

||||

@ -803,7 +816,7 @@ fn write_metadata<'a, 'gcx>(tcx: TyCtxt<'a, 'gcx, 'gcx>,

|

||||

// code references on its own.

|

||||

// See #26591, #27438

|

||||

fn create_imps(sess: &Session,

|

||||

llvm_modules: &[ModuleLlvm]) {

|

||||

llvm_module: &ModuleLlvm) {

|

||||

// The x86 ABI seems to require that leading underscores are added to symbol

|

||||

// names, so we need an extra underscore on 32-bit. There's also a leading

|

||||

// '\x01' here which disables LLVM's symbol mangling (e.g. no extra

|

||||

@ -814,28 +827,26 @@ fn create_imps(sess: &Session,

|

||||

"\x01__imp_"

|

||||

};

|

||||

unsafe {

|

||||

for ll in llvm_modules {

|

||||

let exported: Vec<_> = iter_globals(ll.llmod)

|

||||

.filter(|&val| {

|

||||

llvm::LLVMRustGetLinkage(val) ==

|

||||

llvm::Linkage::ExternalLinkage &&

|

||||

llvm::LLVMIsDeclaration(val) == 0

|

||||

})

|

||||

.collect();

|

||||

let exported: Vec<_> = iter_globals(llvm_module.llmod)

|

||||

.filter(|&val| {

|

||||

llvm::LLVMRustGetLinkage(val) ==

|

||||

llvm::Linkage::ExternalLinkage &&

|

||||

llvm::LLVMIsDeclaration(val) == 0

|

||||

})

|

||||

.collect();

|

||||

|

||||

let i8p_ty = Type::i8p_llcx(ll.llcx);

|

||||

for val in exported {

|

||||

let name = CStr::from_ptr(llvm::LLVMGetValueName(val));

|

||||

let mut imp_name = prefix.as_bytes().to_vec();

|

||||

imp_name.extend(name.to_bytes());

|

||||

let imp_name = CString::new(imp_name).unwrap();

|

||||

let imp = llvm::LLVMAddGlobal(ll.llmod,

|

||||

i8p_ty.to_ref(),

|

||||

imp_name.as_ptr() as *const _);

|

||||

let init = llvm::LLVMConstBitCast(val, i8p_ty.to_ref());

|

||||

llvm::LLVMSetInitializer(imp, init);

|

||||

llvm::LLVMRustSetLinkage(imp, llvm::Linkage::ExternalLinkage);

|

||||

}

|

||||

let i8p_ty = Type::i8p_llcx(llvm_module.llcx);

|

||||

for val in exported {

|

||||

let name = CStr::from_ptr(llvm::LLVMGetValueName(val));

|

||||

let mut imp_name = prefix.as_bytes().to_vec();

|

||||

imp_name.extend(name.to_bytes());

|

||||

let imp_name = CString::new(imp_name).unwrap();

|

||||

let imp = llvm::LLVMAddGlobal(llvm_module.llmod,

|

||||

i8p_ty.to_ref(),

|

||||

imp_name.as_ptr() as *const _);

|

||||

let init = llvm::LLVMConstBitCast(val, i8p_ty.to_ref());

|

||||

llvm::LLVMSetInitializer(imp, init);

|

||||

llvm::LLVMRustSetLinkage(imp, llvm::Linkage::ExternalLinkage);

|

||||

}

|

||||

}

|

||||

}

|

||||

@ -920,27 +931,26 @@ pub fn find_exported_symbols(tcx: TyCtxt, reachable: &NodeSet) -> NodeSet {

|

||||

|

||||

pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

analysis: ty::CrateAnalysis,

|

||||

incremental_hashes_map: &IncrementalHashesMap,

|

||||

incremental_hashes_map: IncrementalHashesMap,

|

||||

output_filenames: &OutputFilenames)

|

||||

-> CrateTranslation {

|

||||

-> OngoingCrateTranslation {

|

||||

check_for_rustc_errors_attr(tcx);

|

||||

|

||||

// Be careful with this krate: obviously it gives access to the

|

||||

// entire contents of the krate. So if you push any subtasks of

|

||||

// `TransCrate`, you need to be careful to register "reads" of the

|

||||

// particular items that will be processed.

|

||||

let krate = tcx.hir.krate();

|

||||

|

||||

let ty::CrateAnalysis { reachable, .. } = analysis;

|

||||

|

||||

let check_overflow = tcx.sess.overflow_checks();

|

||||

|

||||

let link_meta = link::build_link_meta(incremental_hashes_map);

|

||||

|

||||

let link_meta = link::build_link_meta(&incremental_hashes_map);

|

||||

let exported_symbol_node_ids = find_exported_symbols(tcx, &reachable);

|

||||

|

||||

let shared_ccx = SharedCrateContext::new(tcx,

|

||||

check_overflow,

|

||||

output_filenames);

|

||||

// Translate the metadata.

|

||||

let (metadata_llcx, metadata_llmod, metadata) =

|

||||

let (metadata_llcx, metadata_llmod, metadata, metadata_incr_hashes) =

|

||||

time(tcx.sess.time_passes(), "write metadata", || {

|

||||

write_metadata(tcx, &link_meta, &exported_symbol_node_ids)

|

||||

});

|

||||

@ -952,27 +962,44 @@ pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

llcx: metadata_llcx,

|

||||

llmod: metadata_llmod,

|

||||

}),

|

||||

kind: ModuleKind::Metadata,

|

||||

};

|

||||

|

||||

let no_builtins = attr::contains_name(&krate.attrs, "no_builtins");

|

||||

let time_graph = if tcx.sess.opts.debugging_opts.trans_time_graph {

|

||||

Some(time_graph::TimeGraph::new())

|

||||

} else {

|

||||

None

|

||||

};

|

||||

|

||||

// Skip crate items and just output metadata in -Z no-trans mode.

|

||||

if tcx.sess.opts.debugging_opts.no_trans ||

|

||||

!tcx.sess.opts.output_types.should_trans() {

|

||||

let empty_exported_symbols = ExportedSymbols::empty();

|

||||

let linker_info = LinkerInfo::new(&shared_ccx, &empty_exported_symbols);

|

||||

return CrateTranslation {

|

||||

crate_name: tcx.crate_name(LOCAL_CRATE),

|

||||

modules: vec![],

|

||||

metadata_module: metadata_module,

|

||||

allocator_module: None,

|

||||

link: link_meta,

|

||||

metadata: metadata,

|

||||

exported_symbols: empty_exported_symbols,

|

||||

no_builtins: no_builtins,

|

||||

linker_info: linker_info,

|

||||

windows_subsystem: None,

|

||||

};

|

||||

let ongoing_translation = write::start_async_translation(

|

||||

tcx.sess,

|

||||

output_filenames,

|

||||

time_graph.clone(),

|

||||

tcx.crate_name(LOCAL_CRATE),

|

||||

link_meta,

|

||||

metadata,

|

||||

Arc::new(empty_exported_symbols),

|

||||

no_builtins,

|

||||

None,

|

||||

linker_info,

|

||||

false);

|

||||

|

||||

ongoing_translation.submit_pre_translated_module_to_llvm(tcx.sess, metadata_module, true);

|

||||

|

||||

assert_and_save_dep_graph(tcx,

|

||||

incremental_hashes_map,

|

||||

metadata_incr_hashes,

|

||||

link_meta);

|

||||

|

||||

ongoing_translation.check_for_errors(tcx.sess);

|

||||

|

||||

return ongoing_translation;

|

||||

}

|

||||

|

||||

let exported_symbols = Arc::new(ExportedSymbols::compute(tcx,

|

||||

@ -983,12 +1010,110 @@ pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

let (translation_items, codegen_units) =

|

||||

collect_and_partition_translation_items(&shared_ccx, &exported_symbols);

|

||||

|

||||

assert!(codegen_units.len() <= 1 || !tcx.sess.lto());

|

||||

|

||||

let linker_info = LinkerInfo::new(&shared_ccx, &exported_symbols);

|

||||

let subsystem = attr::first_attr_value_str_by_name(&krate.attrs,

|

||||

"windows_subsystem");

|

||||

let windows_subsystem = subsystem.map(|subsystem| {

|

||||

if subsystem != "windows" && subsystem != "console" {

|

||||

tcx.sess.fatal(&format!("invalid windows subsystem `{}`, only \

|

||||

`windows` and `console` are allowed",

|

||||

subsystem));

|

||||

}

|

||||

subsystem.to_string()

|

||||

});

|

||||

|

||||

let no_integrated_as = tcx.sess.opts.cg.no_integrated_as ||

|

||||

(tcx.sess.target.target.options.no_integrated_as &&

|

||||

(output_filenames.outputs.contains_key(&OutputType::Object) ||

|

||||

output_filenames.outputs.contains_key(&OutputType::Exe)));

|

||||

|

||||

let ongoing_translation = write::start_async_translation(

|

||||

tcx.sess,

|

||||

output_filenames,

|

||||

time_graph.clone(),

|

||||

tcx.crate_name(LOCAL_CRATE),

|

||||

link_meta,

|

||||

metadata,

|

||||

exported_symbols.clone(),

|

||||

no_builtins,

|

||||

windows_subsystem,

|

||||

linker_info,

|

||||

no_integrated_as);

|

||||

|

||||

// Translate an allocator shim, if any

|

||||

//

|

||||

// If LTO is enabled and we've got some previous LLVM module we translated

|

||||

// above, then we can just translate directly into that LLVM module. If not,

|

||||

// however, we need to create a separate module and trans into that. Note

|

||||

// that the separate translation is critical for the standard library where

|

||||

// the rlib's object file doesn't have allocator functions but the dylib

|

||||

// links in an object file that has allocator functions. When we're

|

||||

// compiling a final LTO artifact, though, there's no need to worry about

|

||||

// this as we're not working with this dual "rlib/dylib" functionality.

|

||||

let allocator_module = if tcx.sess.lto() {

|

||||

None

|

||||

} else if let Some(kind) = tcx.sess.allocator_kind.get() {

|

||||

unsafe {

|

||||

let (llcx, llmod) =

|

||||

context::create_context_and_module(tcx.sess, "allocator");

|

||||

let modules = ModuleLlvm {

|

||||

llmod: llmod,

|

||||

llcx: llcx,

|

||||

};

|

||||

time(tcx.sess.time_passes(), "write allocator module", || {

|

||||

allocator::trans(tcx, &modules, kind)

|

||||

});

|

||||

|

||||

Some(ModuleTranslation {

|

||||

name: link::ALLOCATOR_MODULE_NAME.to_string(),

|

||||

symbol_name_hash: 0, // we always rebuild allocator shims

|

||||

source: ModuleSource::Translated(modules),

|

||||

kind: ModuleKind::Allocator,

|

||||

})

|

||||

}

|

||||

} else {

|

||||

None

|

||||

};

|

||||

|

||||

if let Some(allocator_module) = allocator_module {

|

||||

ongoing_translation.submit_pre_translated_module_to_llvm(tcx.sess, allocator_module, false);

|

||||

}

|

||||

|

||||

let codegen_unit_count = codegen_units.len();

|

||||

ongoing_translation.submit_pre_translated_module_to_llvm(tcx.sess,

|

||||

metadata_module,

|

||||

codegen_unit_count == 0);

|

||||

|

||||

let translation_items = Arc::new(translation_items);

|

||||

|

||||

let mut all_stats = Stats::default();

|

||||

let modules: Vec<ModuleTranslation> = codegen_units

|

||||

.into_iter()

|

||||

.map(|cgu| {

|

||||

let mut module_dispositions = tcx.sess.opts.incremental.as_ref().map(|_| Vec::new());

|

||||

|

||||

// We sort the codegen units by size. This way we can schedule work for LLVM

|

||||

// a bit more efficiently. Note that "size" is defined rather crudely at the

|

||||

// moment as it is just the number of TransItems in the CGU, not taking into

|

||||

// account the size of each TransItem.

|

||||

let codegen_units = {

|

||||

let mut codegen_units = codegen_units;

|

||||

codegen_units.sort_by_key(|cgu| -(cgu.items().len() as isize));

|

||||

codegen_units

|

||||

};

|

||||

|

||||

let mut total_trans_time = Duration::new(0, 0);

|

||||

|

||||

for (cgu_index, cgu) in codegen_units.into_iter().enumerate() {

|

||||

ongoing_translation.wait_for_signal_to_translate_item();

|

||||

ongoing_translation.check_for_errors(tcx.sess);

|

||||

|

||||

let start_time = Instant::now();

|

||||

|

||||

let module = {

|

||||

let _timing_guard = time_graph

|

||||

.as_ref()

|

||||

.map(|time_graph| time_graph.start(write::TRANS_WORKER_TIMELINE,

|

||||

write::TRANS_WORK_PACKAGE_KIND));

|

||||

let dep_node = cgu.work_product_dep_node();

|

||||

let ((stats, module), _) =

|

||||

tcx.dep_graph.with_task(dep_node,

|

||||

@ -998,9 +1123,41 @@ pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

exported_symbols.clone())),

|

||||

module_translation);

|

||||

all_stats.extend(stats);

|

||||

|

||||

if let Some(ref mut module_dispositions) = module_dispositions {

|

||||

module_dispositions.push(module.disposition());

|

||||

}

|

||||

|

||||

module

|

||||

})

|

||||

.collect();

|

||||

};

|

||||

|

||||

let time_to_translate = Instant::now().duration_since(start_time);

|

||||

|

||||

// We assume that the cost to run LLVM on a CGU is proportional to

|

||||

// the time we needed for translating it.

|

||||

let cost = time_to_translate.as_secs() * 1_000_000_000 +

|

||||

time_to_translate.subsec_nanos() as u64;

|

||||

|

||||

total_trans_time += time_to_translate;

|

||||

|

||||

let is_last_cgu = (cgu_index + 1) == codegen_unit_count;

|

||||

|

||||

ongoing_translation.submit_translated_module_to_llvm(tcx.sess,

|

||||

module,

|

||||

cost,

|

||||

is_last_cgu);

|

||||

ongoing_translation.check_for_errors(tcx.sess);

|

||||

}

|

||||

|

||||

// Since the main thread is sometimes blocked during trans, we keep track

|

||||

// -Ztime-passes output manually.

|

||||

print_time_passes_entry(tcx.sess.time_passes(),

|

||||

"translate to LLVM IR",

|

||||

total_trans_time);

|

||||

|

||||

if let Some(module_dispositions) = module_dispositions {

|

||||

assert_module_sources::assert_module_sources(tcx, &module_dispositions);

|

||||

}

|

||||

|

||||

fn module_translation<'a, 'tcx>(

|

||||

scx: AssertDepGraphSafe<&SharedCrateContext<'a, 'tcx>>,

|

||||

@ -1044,7 +1201,8 @@ pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

let module = ModuleTranslation {

|

||||

name: cgu_name,

|

||||

symbol_name_hash,

|

||||

source: ModuleSource::Preexisting(buf.clone())

|

||||

source: ModuleSource::Preexisting(buf.clone()),

|

||||

kind: ModuleKind::Regular,

|

||||

};

|

||||

return (Stats::default(), module);

|

||||

}

|

||||

@ -1099,21 +1257,40 @@ pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

debuginfo::finalize(&ccx);

|

||||

}

|

||||

|

||||

let llvm_module = ModuleLlvm {

|

||||

llcx: ccx.llcx(),

|

||||

llmod: ccx.llmod(),

|

||||

};

|

||||

|

||||

// In LTO mode we inject the allocator shim into the existing

|

||||

// module.

|

||||

if ccx.sess().lto() {

|

||||

if let Some(kind) = ccx.sess().allocator_kind.get() {

|

||||

time(ccx.sess().time_passes(), "write allocator module", || {

|

||||

unsafe {

|

||||

allocator::trans(ccx.tcx(), &llvm_module, kind);

|

||||

}

|

||||

});

|

||||

}

|

||||

}

|

||||

|

||||

// Adjust exported symbols for MSVC dllimport

|

||||

if ccx.sess().target.target.options.is_like_msvc &&

|

||||

ccx.sess().crate_types.borrow().iter().any(|ct| *ct == config::CrateTypeRlib) {

|

||||

create_imps(ccx.sess(), &llvm_module);

|

||||

}

|

||||

|

||||

ModuleTranslation {

|

||||

name: cgu_name,

|

||||

symbol_name_hash,

|

||||

source: ModuleSource::Translated(ModuleLlvm {

|

||||

llcx: ccx.llcx(),

|

||||

llmod: ccx.llmod(),

|

||||

})

|

||||

source: ModuleSource::Translated(llvm_module),

|

||||

kind: ModuleKind::Regular,

|

||||

}

|

||||

};

|

||||

|

||||

(lcx.into_stats(), module)

|

||||

}

|

||||

|

||||

assert_module_sources::assert_module_sources(tcx, &modules);

|

||||

|

||||

symbol_names_test::report_symbol_names(tcx);

|

||||

|

||||

if shared_ccx.sess().trans_stats() {

|

||||

@ -1144,85 +1321,29 @@ pub fn trans_crate<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

}

|

||||

}

|

||||

|

||||

let sess = shared_ccx.sess();

|

||||

ongoing_translation.check_for_errors(tcx.sess);

|

||||

|

||||

// Get the list of llvm modules we created. We'll do a few wacky

|

||||

// transforms on them now.

|

||||

assert_and_save_dep_graph(tcx,

|

||||

incremental_hashes_map,

|

||||

metadata_incr_hashes,

|

||||

link_meta);

|

||||

ongoing_translation

|

||||

}

|

||||

|

||||

let llvm_modules: Vec<_> =

|

||||

modules.iter()

|

||||

.filter_map(|module| match module.source {

|

||||

ModuleSource::Translated(llvm) => Some(llvm),

|

||||

_ => None,

|

||||

})

|

||||

.collect();

|

||||

fn assert_and_save_dep_graph<'a, 'tcx>(tcx: TyCtxt<'a, 'tcx, 'tcx>,

|

||||

incremental_hashes_map: IncrementalHashesMap,

|

||||

metadata_incr_hashes: EncodedMetadataHashes,

|

||||

link_meta: LinkMeta) {

|

||||

time(tcx.sess.time_passes(),

|

||||

"assert dep graph",

|

||||

|| rustc_incremental::assert_dep_graph(tcx));

|

||||

|

||||

if sess.target.target.options.is_like_msvc &&

|

||||

sess.crate_types.borrow().iter().any(|ct| *ct == config::CrateTypeRlib) {

|

||||

create_imps(sess, &llvm_modules);

|

||||

}

|

||||

|

||||

// Translate an allocator shim, if any

|

||||

//

|

||||

// If LTO is enabled and we've got some previous LLVM module we translated

|

||||

// above, then we can just translate directly into that LLVM module. If not,

|

||||

// however, we need to create a separate module and trans into that. Note

|

||||

// that the separate translation is critical for the standard library where

|

||||

// the rlib's object file doesn't have allocator functions but the dylib

|

||||

// links in an object file that has allocator functions. When we're

|

||||

// compiling a final LTO artifact, though, there's no need to worry about

|

||||

// this as we're not working with this dual "rlib/dylib" functionality.

|

||||

let allocator_module = tcx.sess.allocator_kind.get().and_then(|kind| unsafe {

|

||||

if sess.lto() && llvm_modules.len() > 0 {

|

||||

time(tcx.sess.time_passes(), "write allocator module", || {

|

||||

allocator::trans(tcx, &llvm_modules[0], kind)

|

||||

});

|

||||

None

|

||||

} else {

|

||||

let (llcx, llmod) =

|

||||

context::create_context_and_module(tcx.sess, "allocator");

|

||||

let modules = ModuleLlvm {

|

||||

llmod: llmod,

|

||||

llcx: llcx,

|

||||

};

|

||||

time(tcx.sess.time_passes(), "write allocator module", || {

|

||||

allocator::trans(tcx, &modules, kind)

|

||||

});

|

||||

|

||||

Some(ModuleTranslation {

|

||||

name: link::ALLOCATOR_MODULE_NAME.to_string(),

|

||||

symbol_name_hash: 0, // we always rebuild allocator shims

|

||||

source: ModuleSource::Translated(modules),

|

||||

})

|

||||

}

|

||||

});

|

||||

|

||||

let linker_info = LinkerInfo::new(&shared_ccx, &exported_symbols);

|

||||

|

||||

let subsystem = attr::first_attr_value_str_by_name(&krate.attrs,

|

||||

"windows_subsystem");

|

||||

let windows_subsystem = subsystem.map(|subsystem| {

|

||||

if subsystem != "windows" && subsystem != "console" {

|

||||

tcx.sess.fatal(&format!("invalid windows subsystem `{}`, only \

|

||||

`windows` and `console` are allowed",

|

||||

subsystem));

|

||||

}

|

||||

subsystem.to_string()

|

||||

});

|

||||

|

||||

CrateTranslation {

|

||||

crate_name: tcx.crate_name(LOCAL_CRATE),

|

||||

modules: modules,

|

||||

metadata_module: metadata_module,

|

||||

allocator_module: allocator_module,

|

||||

link: link_meta,

|

||||

metadata: metadata,

|

||||

exported_symbols: Arc::try_unwrap(exported_symbols)

|

||||

.expect("There's still a reference to exported_symbols?"),

|

||||

no_builtins: no_builtins,

|

||||

linker_info: linker_info,

|

||||

windows_subsystem: windows_subsystem,

|

||||

}

|

||||

time(tcx.sess.time_passes(),

|

||||

"serialize dep graph",

|

||||

|| rustc_incremental::save_dep_graph(tcx,

|

||||

incremental_hashes_map,

|

||||

&metadata_incr_hashes,

|

||||

link_meta.crate_hash));

|

||||

}

|

||||

|

||||

#[inline(never)] // give this a place in the profiler

|

||||

|

||||

@ -36,9 +36,9 @@

|

||||

|

||||

use rustc::dep_graph::WorkProduct;

|

||||

use syntax_pos::symbol::Symbol;

|

||||

use std::sync::Arc;

|

||||

|

||||

extern crate flate2;

|

||||

extern crate crossbeam;

|

||||

extern crate libc;

|

||||

extern crate owning_ref;

|

||||

#[macro_use] extern crate rustc;

|

||||

@ -54,6 +54,7 @@ extern crate rustc_const_math;

|

||||

extern crate rustc_bitflags;

|

||||

extern crate rustc_demangle;

|

||||

extern crate jobserver;

|

||||

extern crate num_cpus;

|

||||

|

||||

#[macro_use] extern crate log;

|

||||

#[macro_use] extern crate syntax;

|

||||

@ -124,13 +125,13 @@ mod mir;

|

||||

mod monomorphize;

|

||||

mod partitioning;

|

||||

mod symbol_names_test;

|

||||

mod time_graph;

|

||||

mod trans_item;

|

||||

mod tvec;

|

||||

mod type_;

|

||||

mod type_of;

|

||||

mod value;

|

||||

|

||||

#[derive(Clone)]

|

||||

pub struct ModuleTranslation {

|

||||

/// The name of the module. When the crate may be saved between

|

||||

/// compilations, incremental compilation requires that name be

|

||||

@ -140,6 +141,58 @@ pub struct ModuleTranslation {

|

||||

pub name: String,

|

||||

pub symbol_name_hash: u64,

|

||||

pub source: ModuleSource,

|

||||

pub kind: ModuleKind,

|

||||

}

|

||||

|

||||

#[derive(Copy, Clone, Debug)]

|

||||

pub enum ModuleKind {

|

||||

Regular,

|

||||

Metadata,

|

||||

Allocator,

|

||||

}

|

||||

|

||||

impl ModuleTranslation {

|

||||

pub fn into_compiled_module(self, emit_obj: bool, emit_bc: bool) -> CompiledModule {

|

||||

let pre_existing = match self.source {

|

||||

ModuleSource::Preexisting(_) => true,

|

||||

ModuleSource::Translated(_) => false,

|

||||

};

|

||||

|

||||

CompiledModule {

|

||||

name: self.name.clone(),

|

||||

kind: self.kind,

|

||||

symbol_name_hash: self.symbol_name_hash,

|

||||

pre_existing,

|

||||

emit_obj,

|

||||

emit_bc,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Drop for ModuleTranslation {

|

||||

fn drop(&mut self) {

|

||||

match self.source {

|

||||

ModuleSource::Preexisting(_) => {

|

||||

// Nothing to dispose.

|

||||

},

|

||||

ModuleSource::Translated(llvm) => {

|

||||

unsafe {

|

||||

llvm::LLVMDisposeModule(llvm.llmod);

|

||||

llvm::LLVMContextDispose(llvm.llcx);

|

||||

}

|

||||

},

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Debug)]

|

||||

pub struct CompiledModule {

|

||||

pub name: String,

|

||||

pub kind: ModuleKind,

|

||||

pub symbol_name_hash: u64,

|

||||

pub pre_existing: bool,

|

||||

pub emit_obj: bool,

|

||||

pub emit_bc: bool,

|

||||

}

|

||||

|

||||

#[derive(Clone)]

|

||||

@ -151,7 +204,7 @@ pub enum ModuleSource {

|

||||

Translated(ModuleLlvm),

|

||||

}

|

||||

|

||||

#[derive(Copy, Clone)]

|

||||

#[derive(Copy, Clone, Debug)]

|

||||

pub struct ModuleLlvm {

|

||||

pub llcx: llvm::ContextRef,

|

||||

pub llmod: llvm::ModuleRef,

|

||||

@ -162,12 +215,12 @@ unsafe impl Sync for ModuleTranslation { }

|

||||

|

||||

pub struct CrateTranslation {

|

||||

pub crate_name: Symbol,

|

||||

pub modules: Vec<ModuleTranslation>,

|

||||

pub metadata_module: ModuleTranslation,

|

||||

pub allocator_module: Option<ModuleTranslation>,

|

||||

pub modules: Vec<CompiledModule>,

|

||||

pub metadata_module: CompiledModule,

|

||||

pub allocator_module: Option<CompiledModule>,

|

||||

pub link: rustc::middle::cstore::LinkMeta,

|

||||

pub metadata: rustc::middle::cstore::EncodedMetadata,

|

||||

pub exported_symbols: back::symbol_export::ExportedSymbols,

|

||||

pub exported_symbols: Arc<back::symbol_export::ExportedSymbols>,

|

||||

pub no_builtins: bool,

|

||||

pub windows_subsystem: Option<String>,

|

||||

pub linker_info: back::linker::LinkerInfo

|

||||

|

||||

181

src/librustc_trans/time_graph.rs

Normal file

181

src/librustc_trans/time_graph.rs

Normal file

@ -0,0 +1,181 @@

|

||||

// Copyright 2017 The Rust Project Developers. See the COPYRIGHT

|

||||

// file at the top-level directory of this distribution and at

|

||||

// http://rust-lang.org/COPYRIGHT.

|

||||

//

|

||||

// Licensed under the Apache License, Version 2.0 <LICENSE-APACHE or

|

||||

// http://www.apache.org/licenses/LICENSE-2.0> or the MIT license

|

||||

// <LICENSE-MIT or http://opensource.org/licenses/MIT>, at your

|

||||

// option. This file may not be copied, modified, or distributed

|

||||

// except according to those terms.

|

||||

|

||||

use std::collections::HashMap;

|

||||

use std::marker::PhantomData;

|

||||

use std::sync::{Arc, Mutex};

|

||||

use std::time::Instant;

|

||||

use std::io::prelude::*;

|

||||

use std::fs::File;

|

||||

|

||||

const OUTPUT_WIDTH_IN_PX: u64 = 1000;

|

||||

const TIME_LINE_HEIGHT_IN_PX: u64 = 7;

|

||||

const TIME_LINE_HEIGHT_STRIDE_IN_PX: usize = 10;

|

||||

|

||||

#[derive(Clone)]

|

||||

struct Timing {

|

||||

start: Instant,

|

||||

end: Instant,

|

||||

work_package_kind: WorkPackageKind,

|

||||

}

|

||||

|

||||

#[derive(Clone, Copy, Hash, Eq, PartialEq, Debug)]

|

||||

pub struct TimelineId(pub usize);

|

||||

|

||||

#[derive(Clone)]

|

||||

struct PerThread {

|

||||

timings: Vec<Timing>,

|

||||

open_work_package: Option<(Instant, WorkPackageKind)>,

|

||||

}

|

||||

|

||||

#[derive(Clone)]

|

||||

pub struct TimeGraph {

|

||||

data: Arc<Mutex<HashMap<TimelineId, PerThread>>>,

|

||||

}

|

||||

|

||||

#[derive(Clone, Copy)]

|

||||

pub struct WorkPackageKind(pub &'static [&'static str]);

|

||||

|

||||

pub struct RaiiToken {

|

||||

graph: TimeGraph,

|

||||

timeline: TimelineId,

|

||||

// The token must not be Send:

|

||||

_marker: PhantomData<*const ()>

|

||||

}

|

||||

|

||||

|

||||

impl Drop for RaiiToken {

|

||||

fn drop(&mut self) {

|

||||

self.graph.end(self.timeline);

|

||||

}

|

||||

}

|

||||

|

||||

impl TimeGraph {

|

||||

pub fn new() -> TimeGraph {

|

||||

TimeGraph {

|

||||

data: Arc::new(Mutex::new(HashMap::new()))

|

||||

}

|

||||

}

|

||||

|

||||

pub fn start(&self,

|

||||

timeline: TimelineId,

|

||||

work_package_kind: WorkPackageKind) -> RaiiToken {

|

||||

{

|

||||

let mut table = self.data.lock().unwrap();

|

||||

|

||||

let mut data = table.entry(timeline).or_insert(PerThread {

|

||||

timings: Vec::new(),

|

||||

open_work_package: None,

|

||||

});

|

||||

|

||||

assert!(data.open_work_package.is_none());

|

||||

data.open_work_package = Some((Instant::now(), work_package_kind));

|

||||

}

|

||||

|

||||

RaiiToken {

|

||||

graph: self.clone(),

|

||||

timeline,

|

||||

_marker: PhantomData,

|

||||

}

|

||||

}

|

||||

|

||||

fn end(&self, timeline: TimelineId) {

|

||||

let end = Instant::now();

|

||||

|

||||

let mut table = self.data.lock().unwrap();

|

||||

let mut data = table.get_mut(&timeline).unwrap();

|

||||

|

||||

if let Some((start, work_package_kind)) = data.open_work_package {

|

||||

data.timings.push(Timing {

|

||||

start,

|

||||

end,

|

||||

work_package_kind,

|

||||

});

|

||||

} else {

|

||||

bug!("end timing without start?")

|

||||

}

|

||||

|

||||

data.open_work_package = None;

|

||||

}

|

||||

|

||||

pub fn dump(&self, output_filename: &str) {

|

||||

let table = self.data.lock().unwrap();

|

||||

|

||||

for data in table.values() {

|

||||

assert!(data.open_work_package.is_none());

|

||||

}

|

||||

|

||||

let mut timelines: Vec<PerThread> =

|

||||

table.values().map(|data| data.clone()).collect();

|

||||

|

||||

timelines.sort_by_key(|timeline| timeline.timings[0].start);

|

||||

|

||||

let earliest_instant = timelines[0].timings[0].start;

|

||||

let latest_instant = timelines.iter()

|

||||

.map(|timeline| timeline.timings

|

||||

.last()

|

||||

.unwrap()

|

||||

.end)

|

||||

.max()

|

||||

.unwrap();

|

||||

let max_distance = distance(earliest_instant, latest_instant);

|

||||

|

||||

let mut file = File::create(format!("{}.html", output_filename)).unwrap();

|

||||

|

||||

writeln!(file, "<html>").unwrap();

|

||||

writeln!(file, "<head></head>").unwrap();

|

||||

writeln!(file, "<body>").unwrap();

|

||||

|

||||

let mut color = 0;

|

||||

|

||||

for (line_index, timeline) in timelines.iter().enumerate() {

|

||||

let line_top = line_index * TIME_LINE_HEIGHT_STRIDE_IN_PX;

|

||||

|

||||

for span in &timeline.timings {

|

||||

let start = distance(earliest_instant, span.start);

|

||||

let end = distance(earliest_instant, span.end);

|

||||

|

||||

let start = normalize(start, max_distance, OUTPUT_WIDTH_IN_PX);

|

||||

let end = normalize(end, max_distance, OUTPUT_WIDTH_IN_PX);

|

||||

|

||||

let colors = span.work_package_kind.0;

|

||||

|

||||

writeln!(file, "<div style='position:absolute; \

|

||||

top:{}px; \

|

||||

left:{}px; \

|

||||

width:{}px; \

|

||||

height:{}px; \

|

||||

background:{};'></div>",

|

||||

line_top,

|

||||

start,

|

||||

end - start,

|

||||

TIME_LINE_HEIGHT_IN_PX,

|

||||

colors[color % colors.len()]

|

||||

).unwrap();

|

||||

|

||||

color += 1;

|

||||

}

|

||||

}

|

||||

|

||||

writeln!(file, "</body>").unwrap();

|

||||

writeln!(file, "</html>").unwrap();

|

||||

}

|

||||

}

|

||||

|

||||

fn distance(zero: Instant, x: Instant) -> u64 {

|

||||

|

||||

let duration = x.duration_since(zero);

|

||||

(duration.as_secs() * 1_000_000_000 + duration.subsec_nanos() as u64) // / div

|

||||

}

|

||||

|

||||

fn normalize(distance: u64, max: u64, max_pixels: u64) -> u64 {

|

||||

(max_pixels * distance) / max

|

||||