mirror of

https://github.com/rust-lang/rust.git

synced 2025-01-22 12:43:36 +00:00

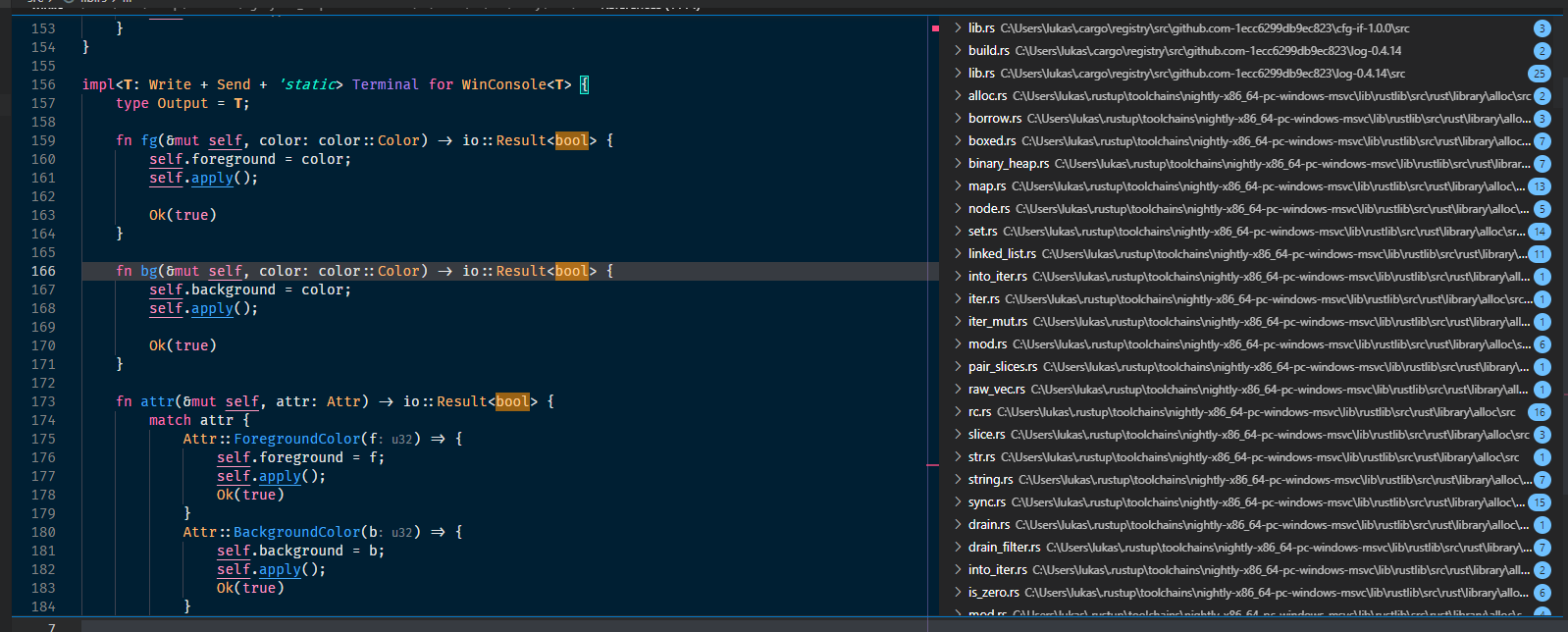

8021: Enable searching for builtin types r=matklad a=Veykril Not too sure how useful this is for reference search overall, but for completeness sake it should be there  Also enables document highlighting for them. 8022: some clippy::performance fixes r=matklad a=matthiaskrgr use vec![] instead of Vec::new() + push() avoid redundant clones use chars instead of &str for single char patterns in ends_with() and starts_with() allocate some Vecs with capacity to avoid unnecessary resizing Co-authored-by: Lukas Wirth <lukastw97@gmail.com> Co-authored-by: Matthias Krüger <matthias.krueger@famsik.de>

This commit is contained in:

commit

5138baf2ac

@ -266,8 +266,7 @@ impl ModuleDef {

|

||||

}

|

||||

|

||||

pub fn canonical_path(&self, db: &dyn HirDatabase) -> Option<String> {

|

||||

let mut segments = Vec::new();

|

||||

segments.push(self.name(db)?.to_string());

|

||||

let mut segments = vec![self.name(db)?.to_string()];

|

||||

for m in self.module(db)?.path_to_root(db) {

|

||||

segments.extend(m.name(db).map(|it| it.to_string()))

|

||||

}

|

||||

|

||||

@ -108,7 +108,7 @@ fn parse_adt(tt: &tt::Subtree) -> Result<BasicAdtInfo, mbe::ExpandError> {

|

||||

}

|

||||

|

||||

fn make_type_args(n: usize, bound: Vec<tt::TokenTree>) -> Vec<tt::TokenTree> {

|

||||

let mut result = Vec::<tt::TokenTree>::new();

|

||||

let mut result = Vec::<tt::TokenTree>::with_capacity(n * 2);

|

||||

result.push(

|

||||

tt::Leaf::Punct(tt::Punct {

|

||||

char: '<',

|

||||

|

||||

@ -218,7 +218,7 @@ mod tests {

|

||||

let result = join_lines(&file, range);

|

||||

|

||||

let actual = {

|

||||

let mut actual = before.to_string();

|

||||

let mut actual = before;

|

||||

result.apply(&mut actual);

|

||||

actual

|

||||

};

|

||||

@ -622,7 +622,7 @@ fn foo() {

|

||||

let parse = SourceFile::parse(&before);

|

||||

let result = join_lines(&parse.tree(), sel);

|

||||

let actual = {

|

||||

let mut actual = before.to_string();

|

||||

let mut actual = before;

|

||||

result.apply(&mut actual);

|

||||

actual

|

||||

};

|

||||

|

||||

@ -29,7 +29,7 @@ use crate::{display::TryToNav, FilePosition, NavigationTarget};

|

||||

|

||||

#[derive(Debug, Clone)]

|

||||

pub struct ReferenceSearchResult {

|

||||

pub declaration: Declaration,

|

||||

pub declaration: Option<Declaration>,

|

||||

pub references: FxHashMap<FileId, Vec<(TextRange, Option<ReferenceAccess>)>>,

|

||||

}

|

||||

|

||||

@ -91,10 +91,10 @@ pub(crate) fn find_all_refs(

|

||||

_ => {}

|

||||

}

|

||||

}

|

||||

let nav = def.try_to_nav(sema.db)?;

|

||||

let decl_range = nav.focus_or_full_range();

|

||||

|

||||

let declaration = Declaration { nav, access: decl_access(&def, &syntax, decl_range) };

|

||||

let declaration = def.try_to_nav(sema.db).map(|nav| {

|

||||

let decl_range = nav.focus_or_full_range();

|

||||

Declaration { nav, access: decl_access(&def, &syntax, decl_range) }

|

||||

});

|

||||

let references = usages

|

||||

.into_iter()

|

||||

.map(|(file_id, refs)| {

|

||||

@ -1004,8 +1004,7 @@ impl Foo {

|

||||

let refs = analysis.find_all_refs(pos, search_scope).unwrap().unwrap();

|

||||

|

||||

let mut actual = String::new();

|

||||

{

|

||||

let decl = refs.declaration;

|

||||

if let Some(decl) = refs.declaration {

|

||||

format_to!(actual, "{}", decl.nav.debug_render());

|

||||

if let Some(access) = decl.access {

|

||||

format_to!(actual, " {:?}", access)

|

||||

@ -1258,4 +1257,17 @@ fn main() {

|

||||

"#]],

|

||||

);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_primitives() {

|

||||

check(

|

||||

r#"

|

||||

fn foo(_: bool) -> bo$0ol { true }

|

||||

"#,

|

||||

expect![[r#"

|

||||

FileId(0) 10..14

|

||||

FileId(0) 19..23

|

||||

"#]],

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

@ -510,7 +510,8 @@ fn source_edit_from_def(

|

||||

def: Definition,

|

||||

new_name: &str,

|

||||

) -> RenameResult<(FileId, TextEdit)> {

|

||||

let nav = def.try_to_nav(sema.db).unwrap();

|

||||

let nav =

|

||||

def.try_to_nav(sema.db).ok_or_else(|| format_err!("No references found at position"))?;

|

||||

|

||||

let mut replacement_text = String::new();

|

||||

let mut repl_range = nav.focus_or_full_range();

|

||||

|

||||

@ -145,9 +145,8 @@ mod tests {

|

||||

use super::*;

|

||||

|

||||

fn do_type_char(char_typed: char, before: &str) -> Option<String> {

|

||||

let (offset, before) = extract_offset(before);

|

||||

let (offset, mut before) = extract_offset(before);

|

||||

let edit = TextEdit::insert(offset, char_typed.to_string());

|

||||

let mut before = before.to_string();

|

||||

edit.apply(&mut before);

|

||||

let parse = SourceFile::parse(&before);

|

||||

on_char_typed_inner(&parse.tree(), offset, char_typed).map(|it| {

|

||||

|

||||

@ -59,7 +59,7 @@ pub(crate) fn add_format_like_completions(

|

||||

/// Checks whether provided item is a string literal.

|

||||

fn string_literal_contents(item: &ast::String) -> Option<String> {

|

||||

let item = item.text();

|

||||

if item.len() >= 2 && item.starts_with("\"") && item.ends_with("\"") {

|

||||

if item.len() >= 2 && item.starts_with('\"') && item.ends_with('\"') {

|

||||

return Some(item[1..item.len() - 1].to_owned());

|

||||

}

|

||||

|

||||

|

||||

@ -70,7 +70,7 @@ impl Definition {

|

||||

hir::ModuleDef::Static(it) => it.name(db)?,

|

||||

hir::ModuleDef::Trait(it) => it.name(db),

|

||||

hir::ModuleDef::TypeAlias(it) => it.name(db),

|

||||

hir::ModuleDef::BuiltinType(_) => return None,

|

||||

hir::ModuleDef::BuiltinType(it) => it.name(),

|

||||

},

|

||||

Definition::SelfType(_) => return None,

|

||||

Definition::Local(it) => it.name(db)?,

|

||||

|

||||

@ -6,7 +6,7 @@

|

||||

|

||||

use std::{convert::TryInto, mem};

|

||||

|

||||

use base_db::{FileId, FileRange, SourceDatabaseExt};

|

||||

use base_db::{FileId, FileRange, SourceDatabase, SourceDatabaseExt};

|

||||

use hir::{DefWithBody, HasSource, Module, ModuleSource, Semantics, Visibility};

|

||||

use once_cell::unsync::Lazy;

|

||||

use rustc_hash::FxHashMap;

|

||||

@ -138,6 +138,20 @@ impl IntoIterator for SearchScope {

|

||||

impl Definition {

|

||||

fn search_scope(&self, db: &RootDatabase) -> SearchScope {

|

||||

let _p = profile::span("search_scope");

|

||||

|

||||

if let Definition::ModuleDef(hir::ModuleDef::BuiltinType(_)) = self {

|

||||

let mut res = FxHashMap::default();

|

||||

|

||||

let graph = db.crate_graph();

|

||||

for krate in graph.iter() {

|

||||

let root_file = graph[krate].root_file_id;

|

||||

let source_root_id = db.file_source_root(root_file);

|

||||

let source_root = db.source_root(source_root_id);

|

||||

res.extend(source_root.iter().map(|id| (id, None)));

|

||||

}

|

||||

return SearchScope::new(res);

|

||||

}

|

||||

|

||||

let module = match self.module(db) {

|

||||

Some(it) => it,

|

||||

None => return SearchScope::empty(),

|

||||

|

||||

@ -222,14 +222,10 @@ fn convert_doc_comment(token: &syntax::SyntaxToken) -> Option<Vec<tt::TokenTree>

|

||||

let doc = comment.kind().doc?;

|

||||

|

||||

// Make `doc="\" Comments\""

|

||||

let mut meta_tkns = Vec::new();

|

||||

meta_tkns.push(mk_ident("doc"));

|

||||

meta_tkns.push(mk_punct('='));

|

||||

meta_tkns.push(mk_doc_literal(&comment));

|

||||

let meta_tkns = vec![mk_ident("doc"), mk_punct('='), mk_doc_literal(&comment)];

|

||||

|

||||

// Make `#![]`

|

||||

let mut token_trees = Vec::new();

|

||||

token_trees.push(mk_punct('#'));

|

||||

let mut token_trees = vec![mk_punct('#')];

|

||||

if let ast::CommentPlacement::Inner = doc {

|

||||

token_trees.push(mk_punct('!'));

|

||||

}

|

||||

|

||||

@ -33,7 +33,7 @@ pub(crate) fn read_info(dylib_path: &Path) -> io::Result<RustCInfo> {

|

||||

}

|

||||

|

||||

let version_part = items.next().ok_or(err!("no version string"))?;

|

||||

let mut version_parts = version_part.split("-");

|

||||

let mut version_parts = version_part.split('-');

|

||||

let version = version_parts.next().ok_or(err!("no version"))?;

|

||||

let channel = version_parts.next().unwrap_or_default().to_string();

|

||||

|

||||

@ -51,7 +51,7 @@ pub(crate) fn read_info(dylib_path: &Path) -> io::Result<RustCInfo> {

|

||||

let date = date[0..date.len() - 2].to_string();

|

||||

|

||||

let version_numbers = version

|

||||

.split(".")

|

||||

.split('.')

|

||||

.map(|it| it.parse::<usize>())

|

||||

.collect::<Result<Vec<_>, _>>()

|

||||

.map_err(|_| err!("version number error"))?;

|

||||

|

||||

@ -6,7 +6,7 @@ use crate::{cfg_flag::CfgFlag, utf8_stdout};

|

||||

|

||||

pub(crate) fn get(target: Option<&str>) -> Vec<CfgFlag> {

|

||||

let _p = profile::span("rustc_cfg::get");

|

||||

let mut res = Vec::new();

|

||||

let mut res = Vec::with_capacity(6 * 2 + 1);

|

||||

|

||||

// Some nightly-only cfgs, which are required for stdlib

|

||||

res.push(CfgFlag::Atom("target_thread_local".into()));

|

||||

|

||||

@ -846,9 +846,9 @@ pub(crate) fn handle_references(

|

||||

};

|

||||

|

||||

let decl = if params.context.include_declaration {

|

||||

Some(FileRange {

|

||||

file_id: refs.declaration.nav.file_id,

|

||||

range: refs.declaration.nav.focus_or_full_range(),

|

||||

refs.declaration.map(|decl| FileRange {

|

||||

file_id: decl.nav.file_id,

|

||||

range: decl.nav.focus_or_full_range(),

|

||||

})

|

||||

} else {

|

||||

None

|

||||

@ -1153,14 +1153,12 @@ pub(crate) fn handle_document_highlight(

|

||||

Some(refs) => refs,

|

||||

};

|

||||

|

||||

let decl = if refs.declaration.nav.file_id == position.file_id {

|

||||

Some(DocumentHighlight {

|

||||

range: to_proto::range(&line_index, refs.declaration.nav.focus_or_full_range()),

|

||||

kind: refs.declaration.access.map(to_proto::document_highlight_kind),

|

||||

})

|

||||

} else {

|

||||

None

|

||||

};

|

||||

let decl = refs.declaration.filter(|decl| decl.nav.file_id == position.file_id).map(|decl| {

|

||||

DocumentHighlight {

|

||||

range: to_proto::range(&line_index, decl.nav.focus_or_full_range()),

|

||||

kind: decl.access.map(to_proto::document_highlight_kind),

|

||||

}

|

||||

});

|

||||

|

||||

let file_refs = refs.references.get(&position.file_id).map_or(&[][..], Vec::as_slice);

|

||||

let mut res = Vec::with_capacity(file_refs.len() + 1);

|

||||

|

||||

@ -360,11 +360,11 @@ mod tests {

|

||||

"Completion with disjoint edits is valid"

|

||||

);

|

||||

assert!(

|

||||

!all_edits_are_disjoint(&completion_with_disjoint_edits, &[joint_edit.clone()]),

|

||||

!all_edits_are_disjoint(&completion_with_disjoint_edits, &[joint_edit]),

|

||||

"Completion with disjoint edits and joint extra edit is invalid"

|

||||

);

|

||||

assert!(

|

||||

all_edits_are_disjoint(&completion_with_disjoint_edits, &[disjoint_edit_2.clone()]),

|

||||

all_edits_are_disjoint(&completion_with_disjoint_edits, &[disjoint_edit_2]),

|

||||

"Completion with disjoint edits and joint extra edit is valid"

|

||||

);

|

||||

}

|

||||

|

||||

Loading…

Reference in New Issue

Block a user